In-Kwon Lee

Semantic Guidance Tuning for Text-To-Image Diffusion Models

Dec 26, 2023Abstract:Recent advancements in Text-to-Image (T2I) diffusion models have demonstrated impressive success in generating high-quality images with zero-shot generalization capabilities. Yet, current models struggle to closely adhere to prompt semantics, often misrepresenting or overlooking specific attributes. To address this, we propose a simple, training-free approach that modulates the guidance direction of diffusion models during inference. We first decompose the prompt semantics into a set of concepts, and monitor the guidance trajectory in relation to each concept. Our key observation is that deviations in model's adherence to prompt semantics are highly correlated with divergence of the guidance from one or more of these concepts. Based on this observation, we devise a technique to steer the guidance direction towards any concept from which the model diverges. Extensive experimentation validates that our method improves the semantic alignment of images generated by diffusion models in response to prompts. Project page is available at: https://korguy.github.io/

Utilizing Task-Generic Motion Prior to Recover Full-Body Motion from Very Sparse Signals

Aug 30, 2023Abstract:The most popular type of devices used to track a user's posture in a virtual reality experience consists of a head-mounted display and two controllers held in both hands. However, due to the limited number of tracking sensors (three in total), faithfully recovering the user in full-body is challenging, limiting the potential for interactions among simulated user avatars within the virtual world. Therefore, recent studies have attempted to reconstruct full-body poses using neural networks that utilize previously learned human poses or accept a series of past poses over a short period. In this paper, we propose a method that utilizes information from a neural motion prior to improve the accuracy of reconstructed user's motions. Our approach aims to reconstruct user's full-body poses by predicting the latent representation of the user's overall motion from limited input signals and integrating this information with tracking sensor inputs. This is based on the premise that the ultimate goal of pose reconstruction is to reconstruct the motion, which is a series of poses. Our results show that this integration enables more accurate reconstruction of the user's full-body motion, particularly enhancing the robustness of lower body motion reconstruction from impoverished signals. Web: https://https://mjsh34.github.io/mp-sspe/

F-RDW: Redirected Walking with Forecasting Future Position

Apr 07, 2023Abstract:In order to serve better VR experiences to users, existing predictive methods of Redirected Walking (RDW) exploit future information to reduce the number of reset occurrences. However, such methods often impose a precondition during deployment, either in the virtual environment's layout or the user's walking direction, which constrains its universal applications. To tackle this challenge, we propose a novel mechanism F-RDW that is twofold: (1) forecasts the future information of a user in the virtual space without any assumptions, and (2) fuse this information while maneuvering existing RDW methods. The backbone of the first step is an LSTM-based model that ingests the user's spatial and eye-tracking data to predict the user's future position in the virtual space, and the following step feeds those predicted values into existing RDW methods (such as MPCRed, S2C, TAPF, and ARC) while respecting their internal mechanism in applicable ways.The results of our simulation test and user study demonstrate the significance of future information when using RDW in small physical spaces or complex environments. We prove that the proposed mechanism significantly reduces the number of resets and increases the traveled distance between resets, hence augmenting the redirection performance of all RDW methods explored in this work.

Multi-Layered Unseen Garments Draping Network

Apr 07, 2023Abstract:While recent AI-based draping networks have significantly advanced the ability to simulate the appearance of clothes worn by 3D human models, the handling of multi-layered garments remains a challenging task. This paper presents a model for draping multi-layered garments that are unseen during the training process. Our proposed framework consists of three stages: garment embedding, single-layered garment draping, and untangling. The model represents a garment independent to its topological structure by mapping it onto the $UV$ map of a human body model, allowing for the ability to handle previously unseen garments. In the single-layered garment draping phase, the model sequentially drapes all garments in each layer on the body without considering interactions between them. The untangling phase utilizes a GNN-based network to model the interaction between the garments of different layers, enabling the simulation of complex multi-layered clothing. The proposed model demonstrates strong performance on both unseen synthetic and real garment reconstruction data on a diverse range of human body shapes and poses.

Flexible Networks for Learning Physical Dynamics of Deformable Objects

Dec 07, 2021

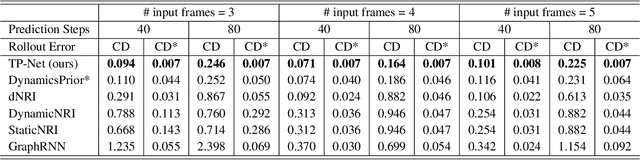

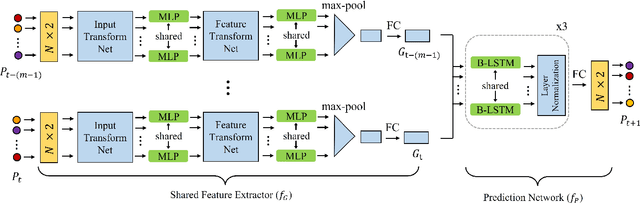

Abstract:Learning the physical dynamics of deformable objects with particle-based representation has been the objective of many computational models in machine learning. While several state-of-the-art models have achieved this objective in simulated environments, most existing models impose a precondition, such that the input is a sequence of ordered point sets - i.e., the order of the points in each point set must be the same across the entire input sequence. This restrains the model to generalize to real-world data, which is considered to be a sequence of unordered point sets. In this paper, we propose a model named time-wise PointNet (TP-Net) that solves this problem by directly consuming a sequence of unordered point sets to infer the future state of a deformable object with particle-based representation. Our model consists of a shared feature extractor that extracts global features from each input point set in parallel and a prediction network that aggregates and reasons on these features for future prediction. The key concept of our approach is that we use global features rather than local features to achieve invariance to input permutations and ensure the stability and scalability of our model. Experiments demonstrate that our model achieves state-of-the-art performance in both synthetic dataset and in real-world dataset, with real-time prediction speed. We provide quantitative and qualitative analysis on why our approach is more effective and efficient than existing approaches.

Discovering Latent Representations of Relations for Interacting Systems

Nov 10, 2021

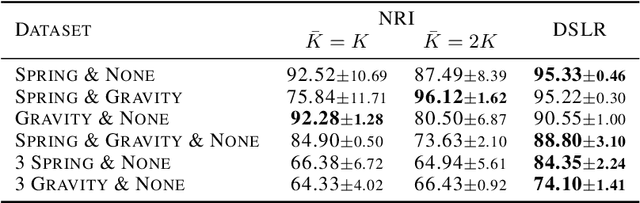

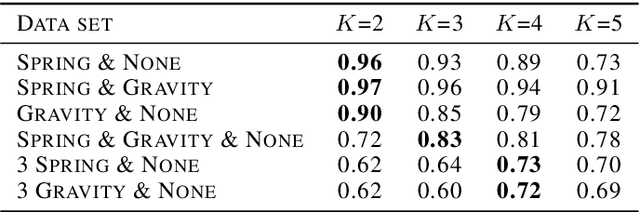

Abstract:Systems whose entities interact with each other are common. In many interacting systems, it is difficult to observe the relations between entities which is the key information for analyzing the system. In recent years, there has been increasing interest in discovering the relationships between entities using graph neural networks. However, existing approaches are difficult to apply if the number of relations is unknown or if the relations are complex. We propose the DiScovering Latent Relation (DSLR) model, which is flexibly applicable even if the number of relations is unknown or many types of relations exist. The flexibility of our DSLR model comes from the design concept of our encoder that represents the relation between entities in a latent space rather than a discrete variable and a decoder that can handle many types of relations. We performed the experiments on synthetic and real-world graph data with various relationships between entities, and compared the qualitative and quantitative results with other approaches. The experiments show that the proposed method is suitable for analyzing dynamic graphs with an unknown number of complex relations.

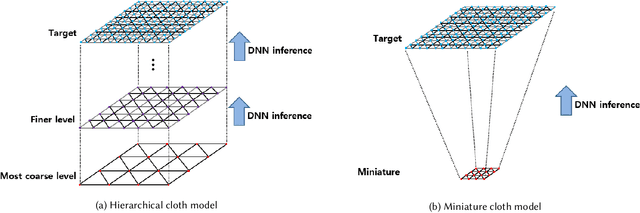

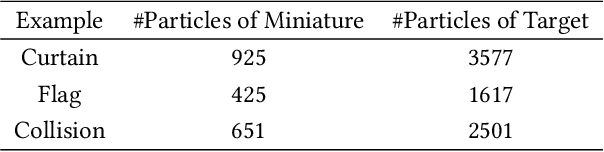

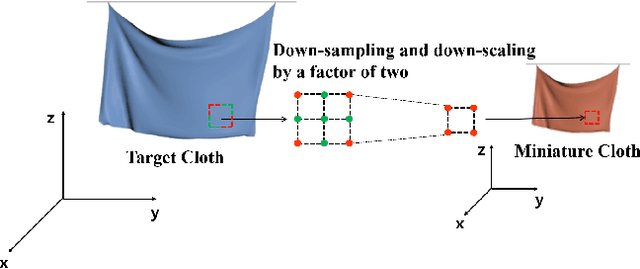

Efficient Cloth Simulation using Miniature Cloth and Upscaling Deep Neural Networks

Jul 09, 2019

Abstract:Cloth simulation requires a fast and stable method for interactively and realistically visualizing fabric materials using computer graphics. We propose an efficient cloth simulation method using miniature cloth simulation and upscaling Deep Neural Networks (DNN). The upscaling DNNs generate the target cloth simulation from the results of physically-based simulations of a miniature cloth that has similar physical properties to those of the target cloth. We have verified the utility of the proposed method through experiments, and the results demonstrate that it is possible to generate fast and stable cloth simulations under various conditions.

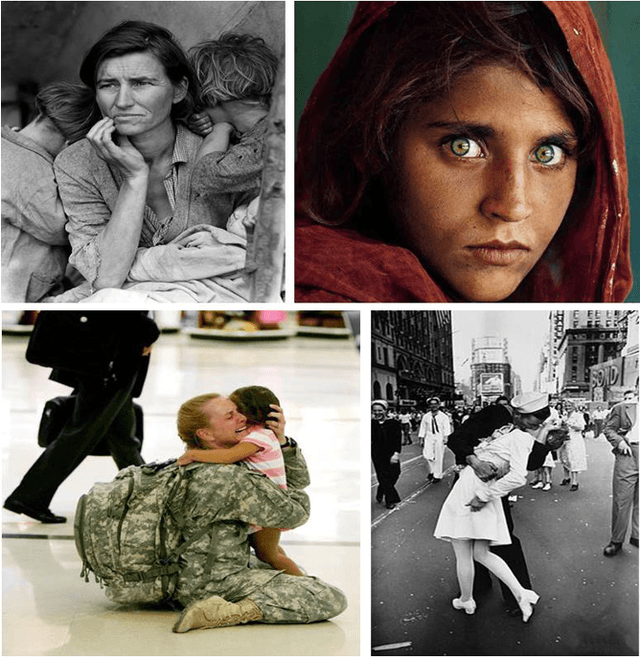

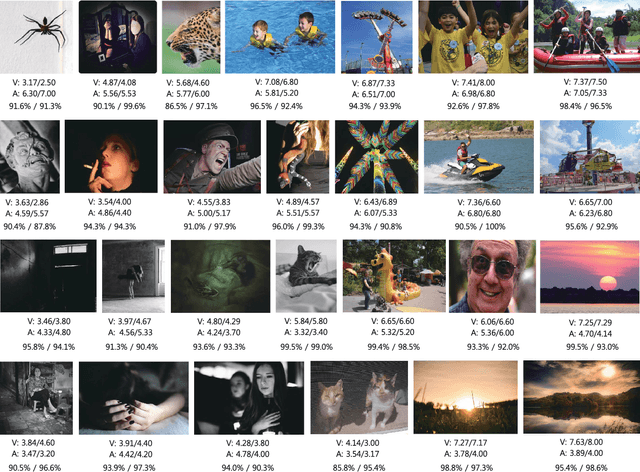

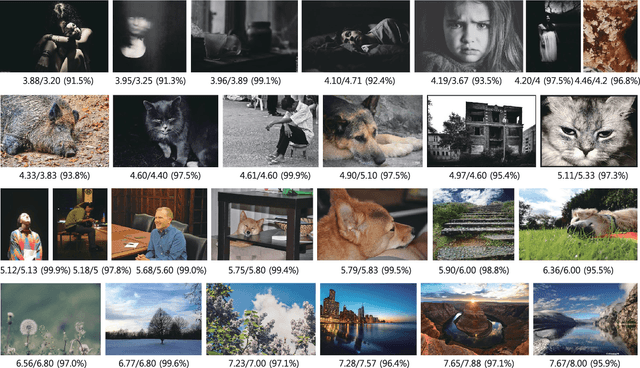

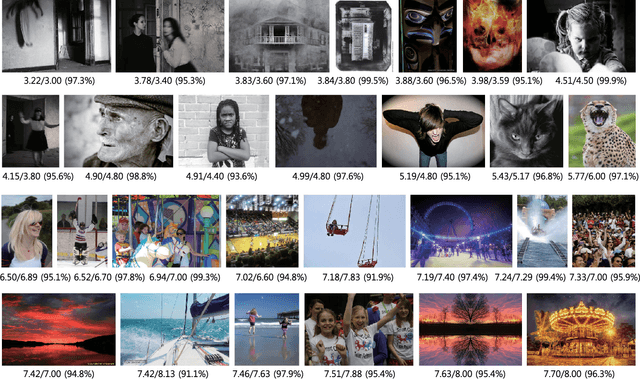

Building Emotional Machines: Recognizing Image Emotions through Deep Neural Networks

Jul 03, 2017

Abstract:An image is a very effective tool for conveying emotions. Many researchers have investigated in computing the image emotions by using various features extracted from images. In this paper, we focus on two high level features, the object and the background, and assume that the semantic information of images is a good cue for predicting emotion. An object is one of the most important elements that define an image, and we find out through experiments that there is a high correlation between the object and the emotion in images. Even with the same object, there may be slight difference in emotion due to different backgrounds, and we use the semantic information of the background to improve the prediction performance. By combining the different levels of features, we build an emotion based feed forward deep neural network which produces the emotion values of a given image. The output emotion values in our framework are continuous values in the 2-dimensional space (Valence and Arousal), which are more effective than using a few number of emotion categories in describing emotions. Experiments confirm the effectiveness of our network in predicting the emotion of images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge