Ilknur Kabul

AutoNLU: Detecting, root-causing, and fixing NLU model errors

Oct 12, 2021

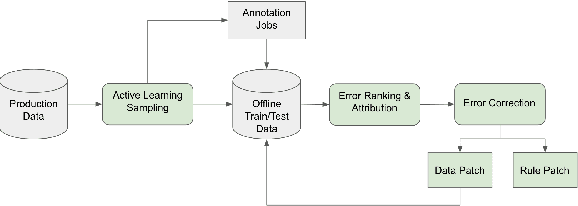

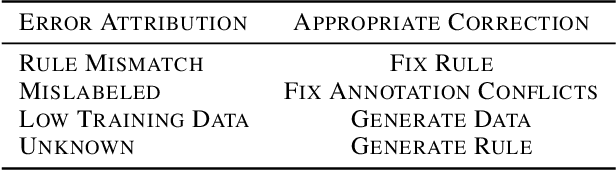

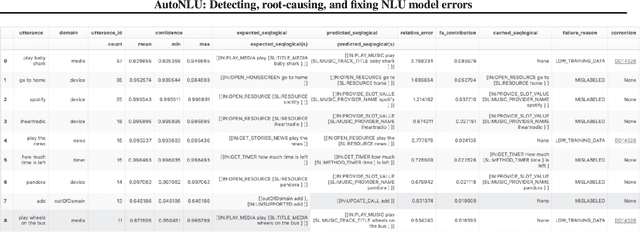

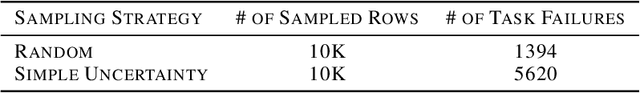

Abstract:Improving the quality of Natural Language Understanding (NLU) models, and more specifically, task-oriented semantic parsing models, in production is a cumbersome task. In this work, we present a system called AutoNLU, which we designed to scale the NLU quality improvement process. It adds automation to three key steps: detection, attribution, and correction of model errors, i.e., bugs. We detected four times more failed tasks than with random sampling, finding that even a simple active learning sampling method on an uncalibrated model is surprisingly effective for this purpose. The AutoNLU tool empowered linguists to fix ten times more semantic parsing bugs than with prior manual processes, auto-correcting 65% of all identified bugs.

Robust Deep Learning with Active Noise Cancellation for Spatial Computing

Nov 16, 2020

Abstract:This paper proposes CANC, a Co-teaching Active Noise Cancellation method, applied in spatial computing to address deep learning trained with extreme noisy labels. Deep learning algorithms have been successful in spatial computing for land or building footprint recognition. However a lot of noise exists in ground truth labels due to how labels are collected in spatial computing and satellite imagery. Existing methods to deal with extreme label noise conduct clean sample selection and do not utilize the remaining samples. Such techniques can be wasteful due to the cost of data retrieval. Our proposed CANC algorithm not only conserves high-cost training samples but also provides active label correction to better improve robust deep learning with extreme noisy labels. We demonstrate the effectiveness of CANC for building footprint recognition for spatial computing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge