Igor Tchappi

Benchmarking Pre-Trained Time Series Models for Electricity Price Forecasting

Jun 09, 2025Abstract:Accurate electricity price forecasting (EPF) is crucial for effective decision-making in power trading on the spot market. While recent advances in generative artificial intelligence (GenAI) and pre-trained large language models (LLMs) have inspired the development of numerous time series foundation models (TSFMs) for time series forecasting, their effectiveness in EPF remains uncertain. To address this gap, we benchmark several state-of-the-art pretrained models--Chronos-Bolt, Chronos-T5, TimesFM, Moirai, Time-MoE, and TimeGPT--against established statistical and machine learning (ML) methods for EPF. Using 2024 day-ahead auction (DAA) electricity prices from Germany, France, the Netherlands, Austria, and Belgium, we generate daily forecasts with a one-day horizon. Chronos-Bolt and Time-MoE emerge as the strongest among the TSFMs, performing on par with traditional models. However, the biseasonal MSTL model, which captures daily and weekly seasonality, stands out for its consistent performance across countries and evaluation metrics, with no TSFM statistically outperforming it.

KG-HTC: Integrating Knowledge Graphs into LLMs for Effective Zero-shot Hierarchical Text Classification

May 08, 2025

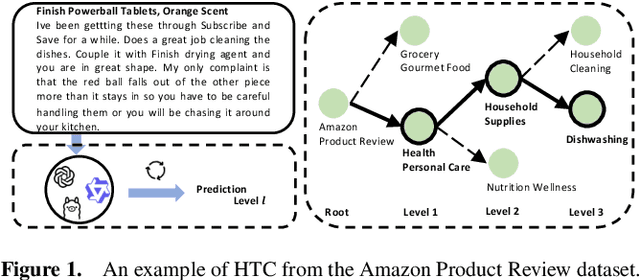

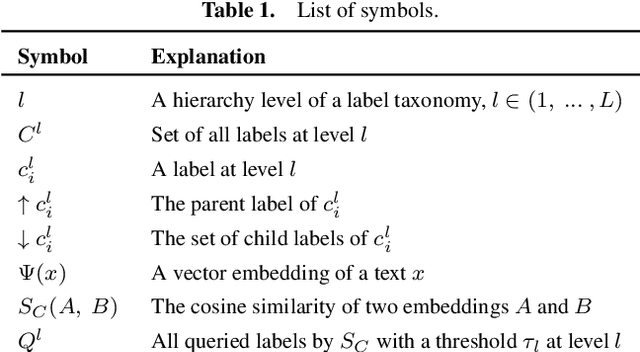

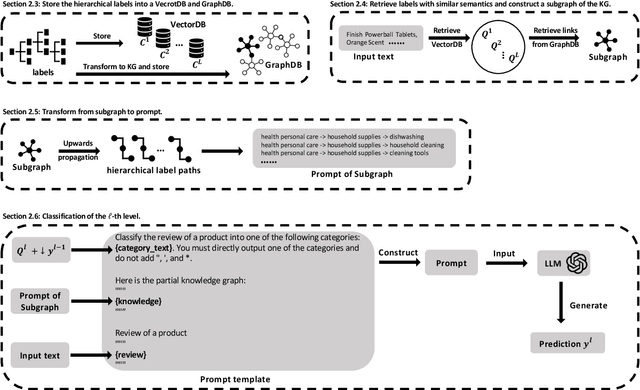

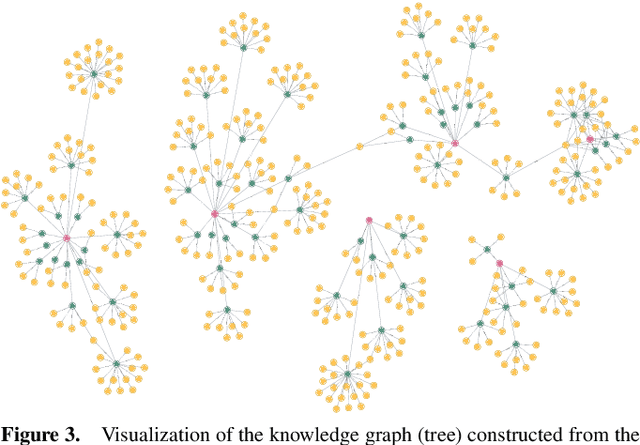

Abstract:Hierarchical Text Classification (HTC) involves assigning documents to labels organized within a taxonomy. Most previous research on HTC has focused on supervised methods. However, in real-world scenarios, employing supervised HTC can be challenging due to a lack of annotated data. Moreover, HTC often faces issues with large label spaces and long-tail distributions. In this work, we present Knowledge Graphs for zero-shot Hierarchical Text Classification (KG-HTC), which aims to address these challenges of HTC in applications by integrating knowledge graphs with Large Language Models (LLMs) to provide structured semantic context during classification. Our method retrieves relevant subgraphs from knowledge graphs related to the input text using a Retrieval-Augmented Generation (RAG) approach. Our KG-HTC can enhance LLMs to understand label semantics at various hierarchy levels. We evaluate KG-HTC on three open-source HTC datasets: WoS, DBpedia, and Amazon. Our experimental results show that KG-HTC significantly outperforms three baselines in the strict zero-shot setting, particularly achieving substantial improvements at deeper levels of the hierarchy. This evaluation demonstrates the effectiveness of incorporating structured knowledge into LLMs to address HTC's challenges in large label spaces and long-tailed label distributions. Our code is available at: https://github.com/QianboZang/KG-HTC.

Towards Effective EU E-Participation: The Development of AskThePublic

Apr 04, 2025Abstract:E-participation platforms can be an important asset for governments in increasing trust and fostering democratic societies. By engaging non-governmental and private institutions, domain experts, and even the general public, policymakers can make informed and inclusive decisions. Drawing on the Media Richness Theory and applying the Design Science Research method, we explore how a chatbot can be designed to improve the effectiveness of the policy-making process of existing citizen involvement platforms. Leveraging the Have Your Say platform, which solicits feedback on European Commission initiatives and regulations, a Large Language Model based chatbot, called AskThePublic is created, providing policymakers, journalists, researchers, and interested citizens with a convenient channel to explore and engage with public input. By conducting 11 semistructured interviews, the results show that the participants value the interactive and structured responses as well as enhanced language capabilities, thus increasing their likelihood of engaging with AskThePublic over the existing platform. An outlook for future iterations is provided and discussed with regard to the perspectives of the different stakeholders.

Noise Augmented Fine Tuning for Mitigating Hallucinations in Large Language Models

Apr 04, 2025Abstract:Large language models (LLMs) often produce inaccurate or misleading content-hallucinations. To address this challenge, we introduce Noise-Augmented Fine-Tuning (NoiseFiT), a novel framework that leverages adaptive noise injection based on the signal-to-noise ratio (SNR) to enhance model robustness. In particular, NoiseFiT selectively perturbs layers identified as either high-SNR (more robust) or low-SNR (potentially under-regularized) using a dynamically scaled Gaussian noise. We further propose a hybrid loss that combines standard cross-entropy, soft cross-entropy, and consistency regularization to ensure stable and accurate outputs under noisy training conditions. Our theoretical analysis shows that adaptive noise injection is both unbiased and variance-preserving, providing strong guarantees for convergence in expectation. Empirical results on multiple test and benchmark datasets demonstrate that NoiseFiT significantly reduces hallucination rates, often improving or matching baseline performance in key tasks. These findings highlight the promise of noise-driven strategies for achieving robust, trustworthy language modeling without incurring prohibitive computational overhead. Given the comprehensive and detailed nature of our experiments, we have publicly released the fine-tuning logs, benchmark evaluation artifacts, and source code online at W&B, Hugging Face, and GitHub, respectively, to foster further research, accessibility and reproducibility.

CognArtive: Large Language Models for Automating Art Analysis and Decoding Aesthetic Elements

Feb 04, 2025Abstract:Art, as a universal language, can be interpreted in diverse ways, with artworks embodying profound meanings and nuances. The advent of Large Language Models (LLMs) and the availability of Multimodal Large Language Models (MLLMs) raise the question of how these transformative models can be used to assess and interpret the artistic elements of artworks. While research has been conducted in this domain, to the best of our knowledge, a deep and detailed understanding of the technical and expressive features of artworks using LLMs has not been explored. In this study, we investigate the automation of a formal art analysis framework to analyze a high-throughput number of artworks rapidly and examine how their patterns evolve over time. We explore how LLMs can decode artistic expressions, visual elements, composition, and techniques, revealing emerging patterns that develop across periods. Finally, we discuss the strengths and limitations of LLMs in this context, emphasizing their ability to process vast quantities of art-related data and generate insightful interpretations. Due to the exhaustive and granular nature of the results, we have developed interactive data visualizations, available online https://cognartive.github.io/, to enhance understanding and accessibility.

Towards Explainability for a Civilian UAV Fleet Management using an Agent-based Approach

Sep 22, 2019

Abstract:This paper presents an initial design concept and specification of a civilian Unmanned Aerial Vehicle (UAV) management simulation system that focuses on explainability for the human-in-the-loop control of semi-autonomous UAVs. The goal of the system is to facilitate the operator intervention in critical scenarios (e.g. avoid safety issues or financial risks). Explainability is supported via user-friendly abstractions on Belief-Desire-Intention agents. To evaluate the effectiveness of the system, a human-computer interaction study is proposed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge