Ignacio de Loyola Páez-Ubieta

Transferability of labels between multilens cameras

Jan 20, 2025Abstract:In this work, a new method for automatically extending Bounding Box (BB) and mask labels across different channels on multilens cameras is presented. For that purpose, the proposed method combines the well known phase correlation method with a refinement process. During the first step, images are aligned by localizing the peak of intensity obtained in the spatial domain after performing the cross correlation process in the frequency domain. The second step consists of obtaining the best possible transformation by using an iterative process maximising the IoU (Intersection over Union) metric. Results show that, by using this method, labels could be transferred across different lens on a camera with an accuracy over 90% in most cases and just by using 65 ms in the whole process. Once the transformations are obtained, artificial RGB images are generated, for labeling them so as to transfer this information into each of the other lens. This work will allow users to use this type of cameras in more fields rather than satellite or medical imagery, giving the chance of labeling even invisible objects in the visible spectrum.

QDGset: A Large Scale Grasping Dataset Generated with Quality-Diversity

Oct 03, 2024

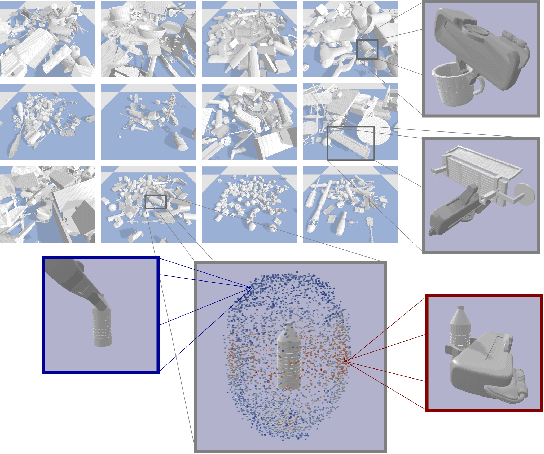

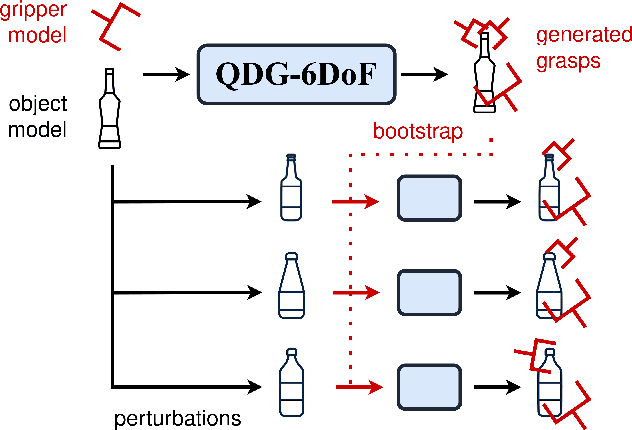

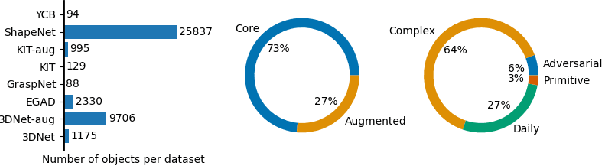

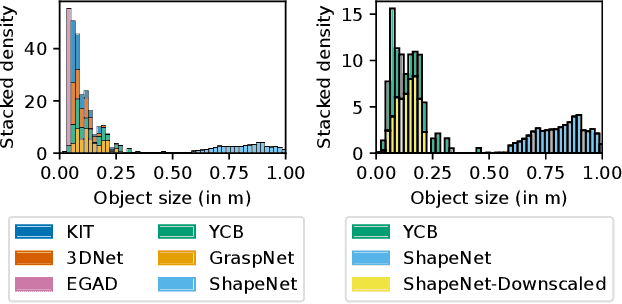

Abstract:Recent advances in AI have led to significant results in robotic learning, but skills like grasping remain partially solved. Many recent works exploit synthetic grasping datasets to learn to grasp unknown objects. However, those datasets were generated using simple grasp sampling methods using priors. Recently, Quality-Diversity (QD) algorithms have been proven to make grasp sampling significantly more efficient. In this work, we extend QDG-6DoF, a QD framework for generating object-centric grasps, to scale up the production of synthetic grasping datasets. We propose a data augmentation method that combines the transformation of object meshes with transfer learning from previous grasping repertoires. The conducted experiments show that this approach reduces the number of required evaluations per discovered robust grasp by up to 20%. We used this approach to generate QDGset, a dataset of 6DoF grasp poses that contains about 3.5 and 4.5 times more grasps and objects, respectively, than the previous state-of-the-art. Our method allows anyone to easily generate data, eventually contributing to a large-scale collaborative dataset of synthetic grasps.

Visual-tactile manipulation to collect household waste in outdoor

Jul 15, 2024Abstract:This work presents a perception system applied to robotic manipulation, that is able to assist in navigation, household waste classification and collection in outdoor environments. This system is made up of optical tactile sensors, RGBD cameras and a LiDAR. These sensors are integrated on a mobile platform with a robot manipulator and a robotic gripper. Our system is divided in three software modules, two of them are vision-based and the last one is tactile-based. The vision-based modules use CNNs to localize and recognize solid household waste, together with the grasping points estimation. The tactile-based module, which also uses CNNs and image processing, adjusts the gripper opening to control the grasping from touch data. Our proposal achieves localization errors around 6 %, a recognition accuracy of 98% and ensures the grasping stability the 91% of the attempts. The sum of runtimes of the three modules is less than 750 ms.

* in Spanish language

Vision and Tactile Robotic System to Grasp Litter in Outdoor Environments

Jul 11, 2024Abstract:The accumulation of litter is increasing in many places and is consequently becoming a problem that must be dealt with. In this paper, we present a manipulator robotic system to collect litter in outdoor environments. This system has three functionalities. Firstly, it uses colour images to detect and recognise litter comprising different materials. Secondly, depth data are combined with pixels of waste objects to compute a 3D location and segment three-dimensional point clouds of the litter items in the scene. The grasp in 3 Degrees of Freedom (DoFs) is then estimated for a robot arm with a gripper for the segmented cloud of each instance of waste. Finally, two tactile-based algorithms are implemented and then employed in order to provide the gripper with a sense of touch. This work uses two low-cost visual-based tactile sensors at the fingertips. One of them addresses the detection of contact (which is obtained from tactile images) between the gripper and solid waste, while another has been designed to detect slippage in order to prevent the objects grasped from falling. Our proposal was successfully tested by carrying out extensive experimentation with different objects varying in size, texture, geometry and materials in different outdoor environments (a tiled pavement, a surface of stone/soil, and grass). Our system achieved an average score of 94% for the detection and Collection Success Rate (CSR) as regards its overall performance, and of 80% for the collection of items of litter at the first attempt.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge