Hunsang Lee

Emerging Property of Masked Token for Effective Pre-training

Apr 12, 2024

Abstract:Driven by the success of Masked Language Modeling (MLM), the realm of self-supervised learning for computer vision has been invigorated by the central role of Masked Image Modeling (MIM) in driving recent breakthroughs. Notwithstanding the achievements of MIM across various downstream tasks, its overall efficiency is occasionally hampered by the lengthy duration of the pre-training phase. This paper presents a perspective that the optimization of masked tokens as a means of addressing the prevailing issue. Initially, we delve into an exploration of the inherent properties that a masked token ought to possess. Within the properties, we principally dedicated to articulating and emphasizing the `data singularity' attribute inherent in masked tokens. Through a comprehensive analysis of the heterogeneity between masked tokens and visible tokens within pre-trained models, we propose a novel approach termed masked token optimization (MTO), specifically designed to improve model efficiency through weight recalibration and the enhancement of the key property of masked tokens. The proposed method serves as an adaptable solution that seamlessly integrates into any MIM approach that leverages masked tokens. As a result, MTO achieves a considerable improvement in pre-training efficiency, resulting in an approximately 50% reduction in pre-training epochs required to attain converged performance of the recent approaches.

Adaptive confidence thresholding for semi-supervised monocular depth estimation

Sep 27, 2020

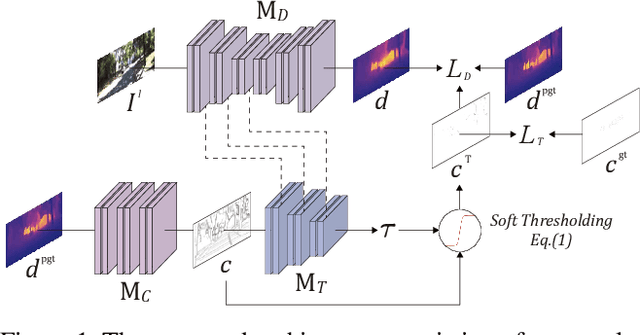

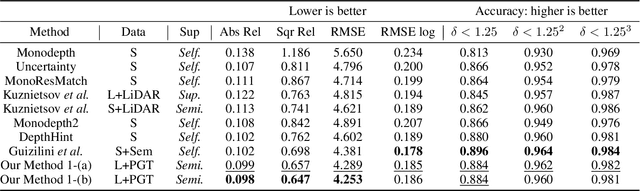

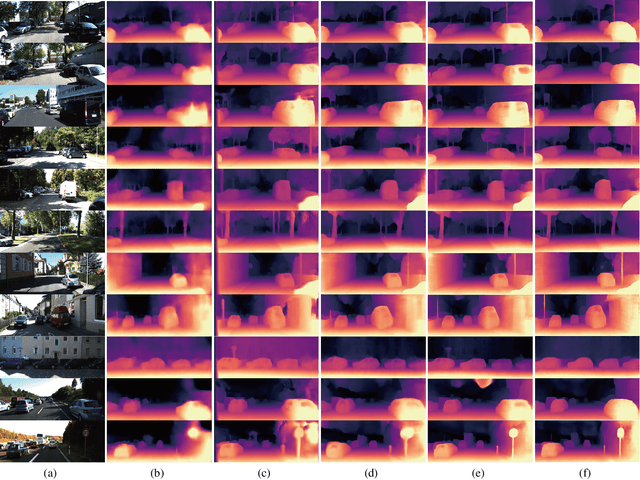

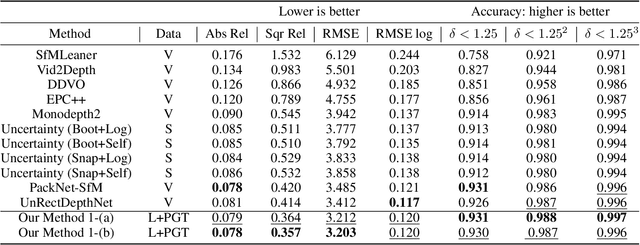

Abstract:Self-supervised monocular depth estimation has become an appealing solution to the lack of ground truth labels, but its reconstruction loss often produces over-smoothed results across object boundaries and is incapable of handling occlusion explicitly. In this paper, we propose a new approach to leverage pseudo ground truth depth maps of stereo images generated from pretrained stereo matching methods. Our method is comprised of three subnetworks; monocular depth network, confidence network, and threshold network. The confidence map of the pseudo ground truth depth map is first estimated to mitigate performance degeneration by inaccurate pseudo depth maps. To cope with the prediction error of the confidence map itself, we also propose to leverage the threshold network that learns the threshold {\tau} in an adaptive manner. The confidence map is thresholded via a differentiable soft-thresholding operator using this truncation boundary {\tau}. The pseudo depth labels filtered out by the thresholded confidence map are finally used to supervise the monocular depth network. To apply the proposed method to various training dataset, we introduce the network-wise training strategy that transfers the knowledge learned from one dataset to another. Experimental results demonstrate superior performance to state-of-the-art monocular depth estimation methods. Lastly, we exhibit that the threshold network can also be used to improve the performance of existing confidence estimation approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge