Humayun Irshad

Class-specific Anchoring Proposal for 3D Object Recognition in LIDAR and RGB Images

Jul 22, 2019

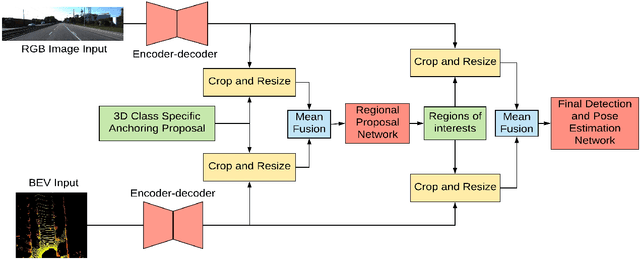

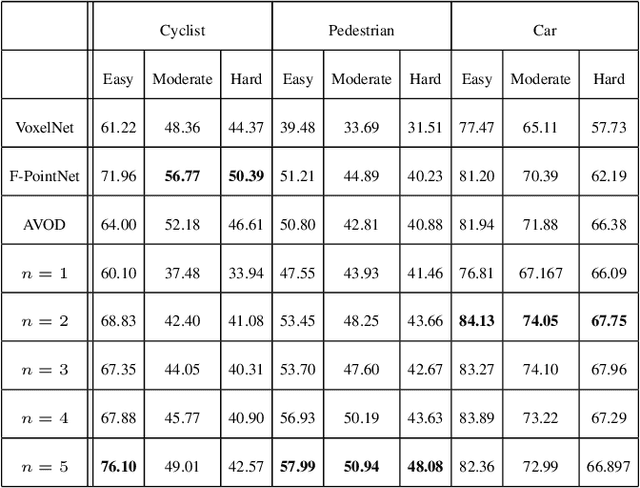

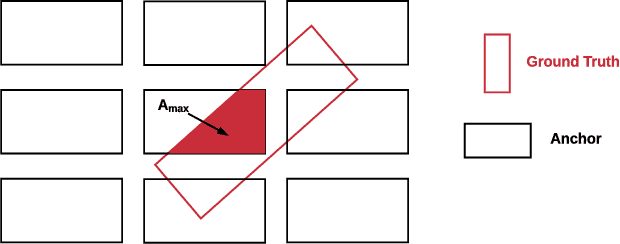

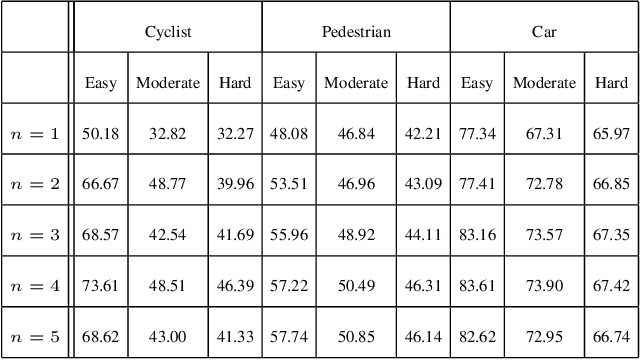

Abstract:Detecting objects in a two-dimensional setting is often insufficient in the context of real-life applications where the surrounding environment needs to be accurately recognized and oriented in three-dimension (3D), such as in the case of autonomous driving vehicles. Therefore, accurately and efficiently detecting objects in the three-dimensional setting is becoming increasingly relevant to a wide range of industrial applications, and thus is progressively attracting the attention of researchers. Building systems to detect objects in 3D is a challenging task though, because it relies on the multi-modal fusion of data derived from different sources. In this paper, we study the effects of anchoring using the current state-of-the-art 3D object detector and propose Class-specific Anchoring Proposal (CAP) strategy based on object sizes and aspect ratios based clustering of anchors. The proposed anchoring strategy significantly increased detection accuracy's by 7.19%, 8.13% and 8.8% on Easy, Moderate and Hard setting of the pedestrian class, 2.19%, 2.17% and 1.27% on Easy, Moderate and Hard setting of the car class and 12.1% on Easy setting of cyclist class. We also show that the clustering in anchoring process also enhances the performance of the regional proposal network in proposing regions of interests significantly. Finally, we propose the best cluster numbers for each class of objects in KITTI dataset that improves the performance of detection model significantly.

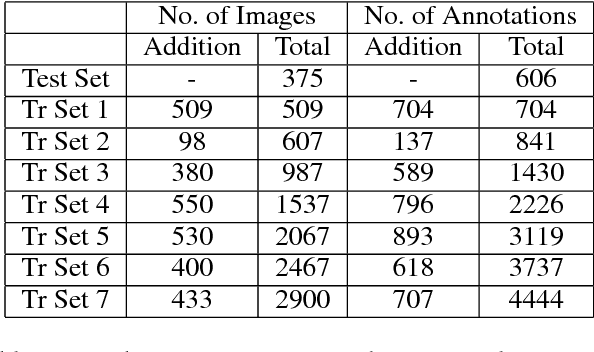

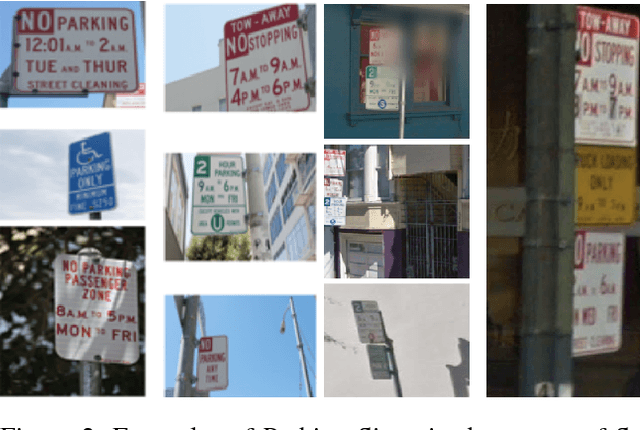

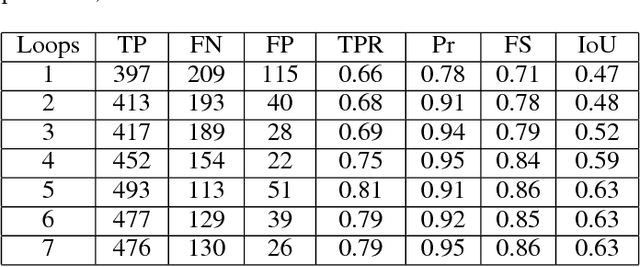

Crowd Sourcing based Active Learning Approach for Parking Sign Recognition

Dec 03, 2018

Abstract:Deep learning models have been used extensively to solve real-world problems in recent years. The performance of such models relies heavily on large amounts of labeled data for training. While the advances of data collection technology have enabled the acquisition of a massive volume of data, labeling the data remains an expensive and time-consuming task. Active learning techniques are being progressively adopted to accelerate the development of machine learning solutions by allowing the model to query the data they learn from. In this paper, we introduce a real-world problem, the recognition of parking signs, and present a framework that combines active learning techniques with a transfer learning approach and crowd-sourcing tools to create and train a machine learning solution to the problem. We discuss how such a framework contributes to building an accurate model in a cost-effective and fast way to solve the parking sign recognition problem in spite of the unevenness of the data associated with the fact that street-level images (such as parking signs) vary in shape, color, orientation and scale, and often appear on top of different types of background.

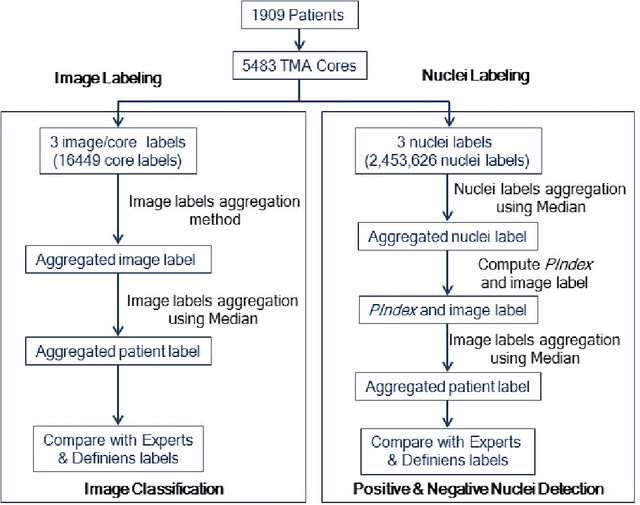

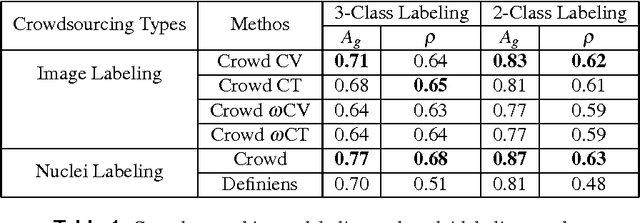

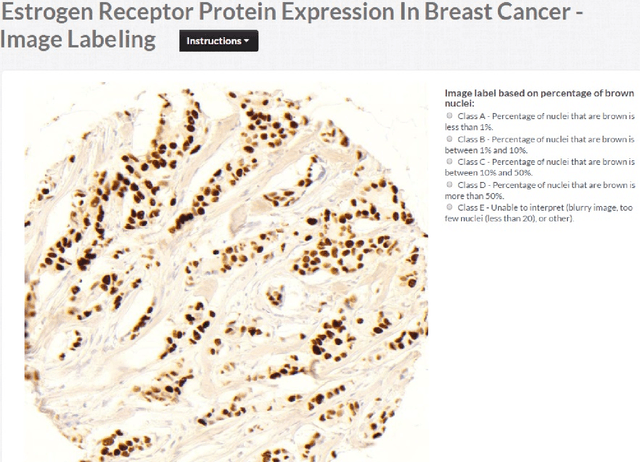

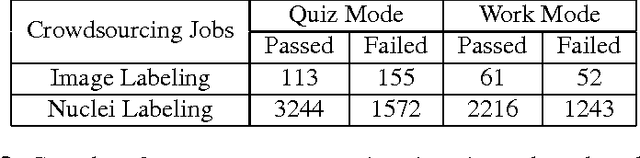

Crowdsourcing scoring of immunohistochemistry images: Evaluating Performance of the Crowd and an Automated Computational Method

Jun 23, 2016

Abstract:The assessment of protein expression in immunohistochemistry (IHC) images provides important diagnostic, prognostic and predictive information for guiding cancer diagnosis and therapy. Manual scoring of IHC images represents a logistical challenge, as the process is labor intensive and time consuming. Since the last decade, computational methods have been developed to enable the application of quantitative methods for the analysis and interpretation of protein expression in IHC images. These methods have not yet replaced manual scoring for the assessment of IHC in the majority of diagnostic laboratories and in many large-scale research studies. An alternative approach is crowdsourcing the quantification of IHC images to an undefined crowd. The aim of this study is to quantify IHC images for labeling of ER status with two different crowdsourcing approaches, image labeling and nuclei labeling, and compare their performance with automated methods. Crowdsourcing-derived scores obtained greater concordance with the pathologist interpretations for both image labeling and nuclei labeling tasks (83% and 87%), as compared to the pathologist concordance achieved by the automated method (81%) on 5,483 TMA images from 1,909 breast cancer patients. This analysis shows that crowdsourcing the scoring of protein expression in IHC images is a promising new approach for large scale cancer molecular pathology studies.

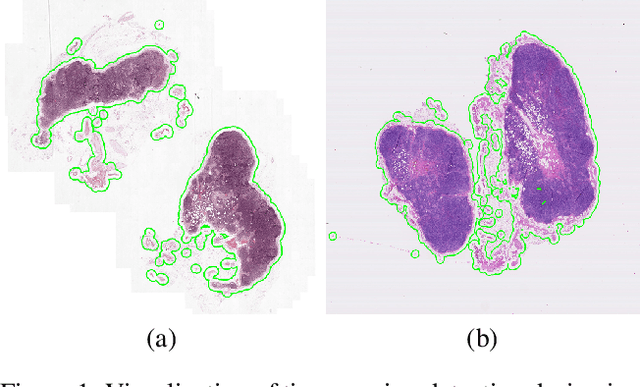

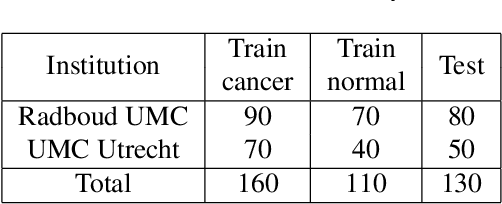

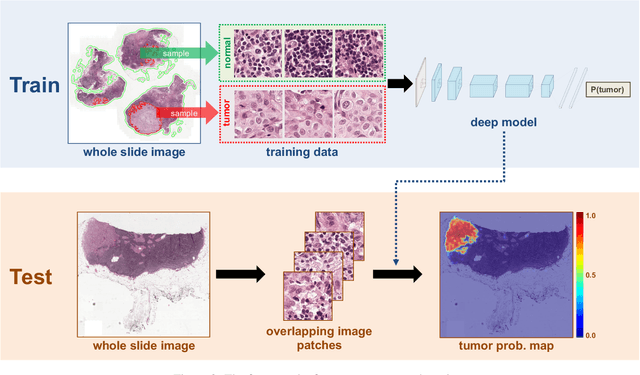

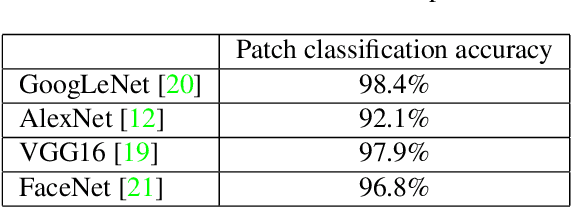

Deep Learning for Identifying Metastatic Breast Cancer

Jun 18, 2016

Abstract:The International Symposium on Biomedical Imaging (ISBI) held a grand challenge to evaluate computational systems for the automated detection of metastatic breast cancer in whole slide images of sentinel lymph node biopsies. Our team won both competitions in the grand challenge, obtaining an area under the receiver operating curve (AUC) of 0.925 for the task of whole slide image classification and a score of 0.7051 for the tumor localization task. A pathologist independently reviewed the same images, obtaining a whole slide image classification AUC of 0.966 and a tumor localization score of 0.733. Combining our deep learning system's predictions with the human pathologist's diagnoses increased the pathologist's AUC to 0.995, representing an approximately 85 percent reduction in human error rate. These results demonstrate the power of using deep learning to produce significant improvements in the accuracy of pathological diagnoses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge