Huanlin Xu

Detecting Deep Neural Network Defects with Data Flow Analysis

Sep 30, 2019

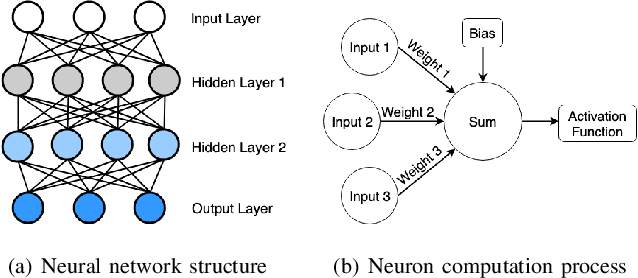

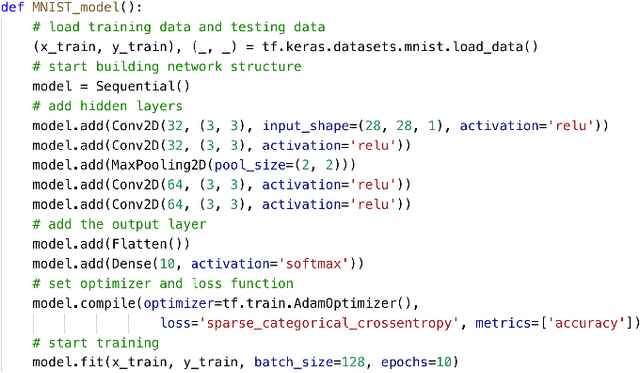

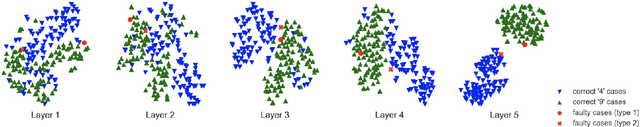

Abstract:Deep neural networks (DNNs) are shown to be promising solutions in many challenging artificial intelligence tasks. However, it is very hard to figure out whether the low precision of a DNN model is an inevitable result, or caused by defects. This paper aims at addressing this challenging problem. We find that the internal data flow footprints of a DNN model can provide insights to locate the root cause effectively. We develop DeepMorph (DNN Tomography) to analyze the root cause, which can guide a DNN developer to improve the model.

Data Sanity Check for Deep Learning Systems via Learnt Assertions

Sep 28, 2019

Abstract:Reliability is a critical consideration to DL-based systems. But the statistical nature of DL makes it quite vulnerable to invalid inputs, i.e., those cases that are not considered in the training phase of a DL model. This paper proposes to perform data sanity check to identify invalid inputs, so as to enhance the reliability of DL-based systems. We design and implement a tool to detect behavior deviation of a DL model when processing an input case. This tool extracts the data flow footprints and conducts an assertion-based validation mechanism. The assertions are built automatically, which are specifically-tailored for DL model data flow analysis. Our experiments conducted with real-world scenarios demonstrate that such an assertion-based data sanity check mechanism is effective in identifying invalid input cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge