Detecting Deep Neural Network Defects with Data Flow Analysis

Paper and Code

Sep 30, 2019

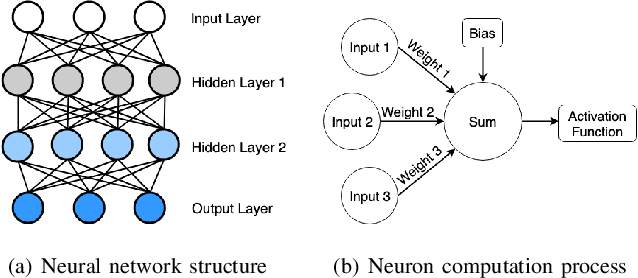

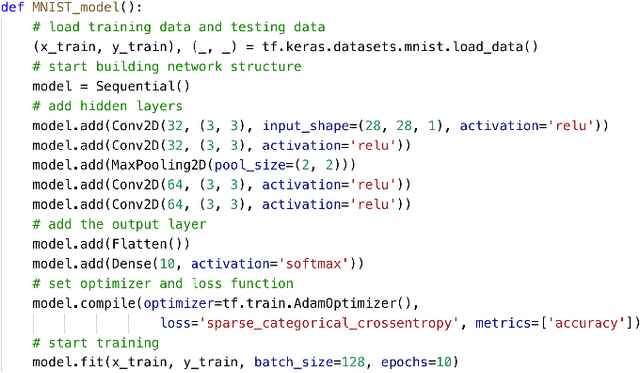

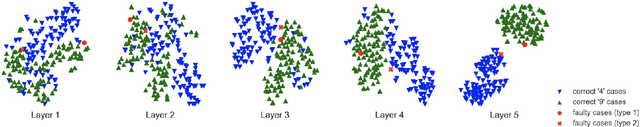

Deep neural networks (DNNs) are shown to be promising solutions in many challenging artificial intelligence tasks. However, it is very hard to figure out whether the low precision of a DNN model is an inevitable result, or caused by defects. This paper aims at addressing this challenging problem. We find that the internal data flow footprints of a DNN model can provide insights to locate the root cause effectively. We develop DeepMorph (DNN Tomography) to analyze the root cause, which can guide a DNN developer to improve the model.

* 2 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge