Hristo S. Paskov

Crosslingual Document Embedding as Reduced-Rank Ridge Regression

Apr 08, 2019

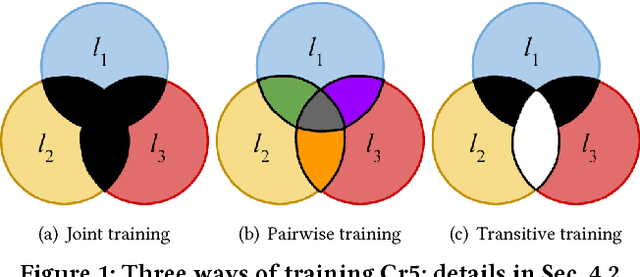

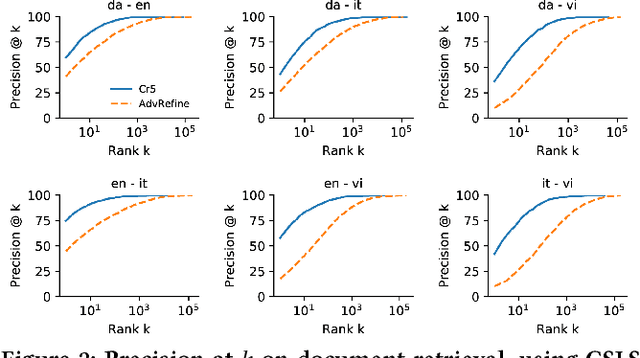

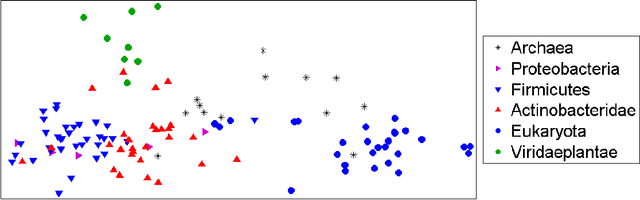

Abstract:There has recently been much interest in extending vector-based word representations to multiple languages, such that words can be compared across languages. In this paper, we shift the focus from words to documents and introduce a method for embedding documents written in any language into a single, language-independent vector space. For training, our approach leverages a multilingual corpus where the same concept is covered in multiple languages (but not necessarily via exact translations), such as Wikipedia. Our method, Cr5 (Crosslingual reduced-rank ridge regression), starts by training a ridge-regression-based classifier that uses language-specific bag-of-word features in order to predict the concept that a given document is about. We show that, when constraining the learned weight matrix to be of low rank, it can be factored to obtain the desired mappings from language-specific bags-of-words to language-independent embeddings. As opposed to most prior methods, which use pretrained monolingual word vectors, postprocess them to make them crosslingual, and finally average word vectors to obtain document vectors, Cr5 is trained end-to-end and is thus natively crosslingual as well as document-level. Moreover, since our algorithm uses the singular value decomposition as its core operation, it is highly scalable. Experiments show that our method achieves state-of-the-art performance on a crosslingual document retrieval task. Finally, although not trained for embedding sentences and words, it also achieves competitive performance on crosslingual sentence and word retrieval tasks.

Data Representation and Compression Using Linear-Programming Approximations

May 03, 2016

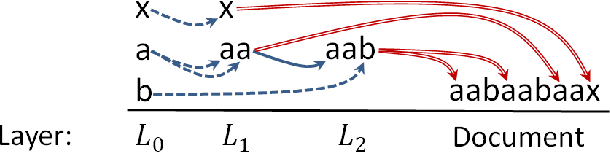

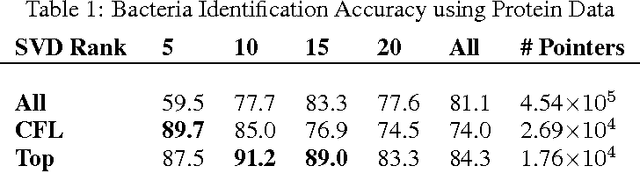

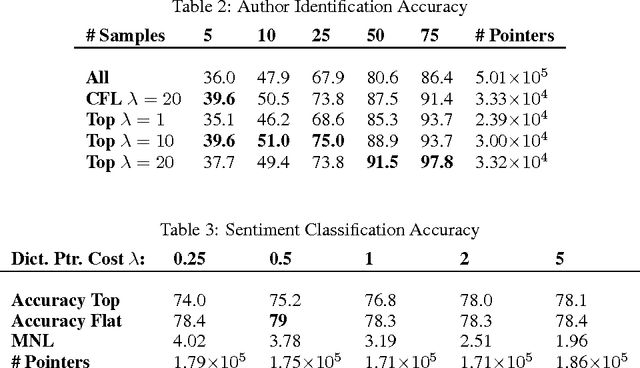

Abstract:We propose `Dracula', a new framework for unsupervised feature selection from sequential data such as text. Dracula learns a dictionary of $n$-grams that efficiently compresses a given corpus and recursively compresses its own dictionary; in effect, Dracula is a `deep' extension of Compressive Feature Learning. It requires solving a binary linear program that may be relaxed to a linear program. Both problems exhibit considerable structure, their solution paths are well behaved, and we identify parameters which control the depth and diversity of the dictionary. We also discuss how to derive features from the compressed documents and show that while certain unregularized linear models are invariant to the structure of the compressed dictionary, this structure may be used to regularize learning. Experiments are presented that demonstrate the efficacy of Dracula's features.

Exploiting Social Network Structure for Person-to-Person Sentiment Analysis

Sep 08, 2014Abstract:Person-to-person evaluations are prevalent in all kinds of discourse and important for establishing reputations, building social bonds, and shaping public opinion. Such evaluations can be analyzed separately using signed social networks and textual sentiment analysis, but this misses the rich interactions between language and social context. To capture such interactions, we develop a model that predicts individual A's opinion of individual B by synthesizing information from the signed social network in which A and B are embedded with sentiment analysis of the evaluative texts relating A to B. We prove that this problem is NP-hard but can be relaxed to an efficiently solvable hinge-loss Markov random field, and we show that this implementation outperforms text-only and network-only versions in two very different datasets involving community-level decision-making: the Wikipedia Requests for Adminship corpus and the Convote U.S. Congressional speech corpus.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge