Hossein Arabi

Attention-Enhanced Deep Learning Ensemble for Breast Density Classification in Mammography

Jul 08, 2025Abstract:Breast density assessment is a crucial component of mammographic interpretation, with high breast density (BI-RADS categories C and D) representing both a significant risk factor for developing breast cancer and a technical challenge for tumor detection. This study proposes an automated deep learning system for robust binary classification of breast density (low: A/B vs. high: C/D) using the VinDr-Mammo dataset. We implemented and compared four advanced convolutional neural networks: ResNet18, ResNet50, EfficientNet-B0, and DenseNet121, each enhanced with channel attention mechanisms. To address the inherent class imbalance, we developed a novel Combined Focal Label Smoothing Loss function that integrates focal loss, label smoothing, and class-balanced weighting. Our preprocessing pipeline incorporated advanced techniques, including contrast-limited adaptive histogram equalization (CLAHE) and comprehensive data augmentation. The individual models were combined through an optimized ensemble voting approach, achieving superior performance (AUC: 0.963, F1-score: 0.952) compared to any single model. This system demonstrates significant potential to standardize density assessments in clinical practice, potentially improving screening efficiency and early cancer detection rates while reducing inter-observer variability among radiologists.

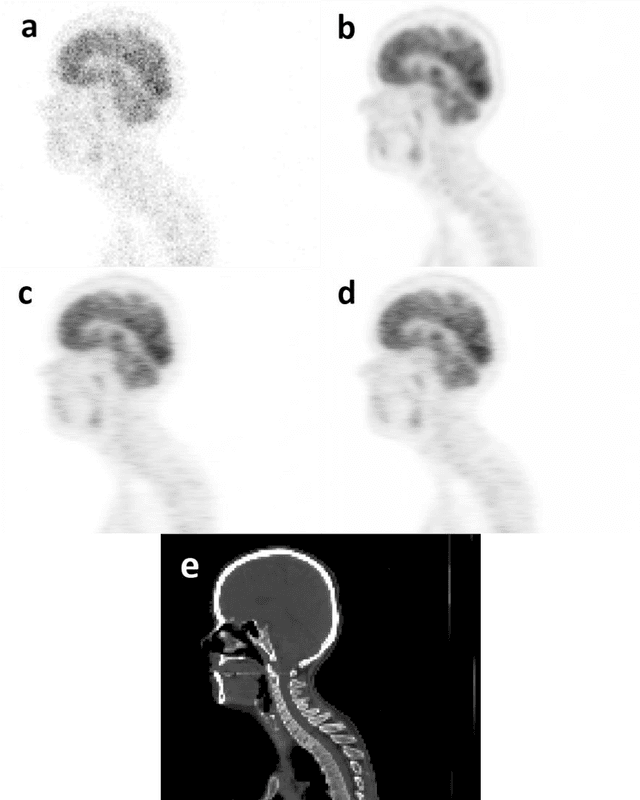

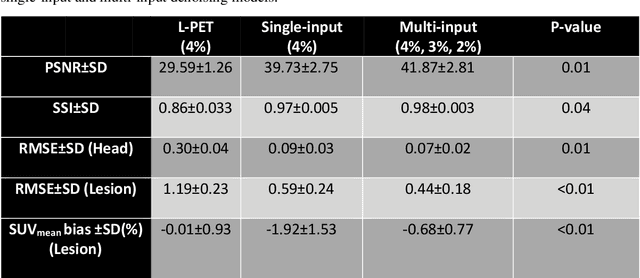

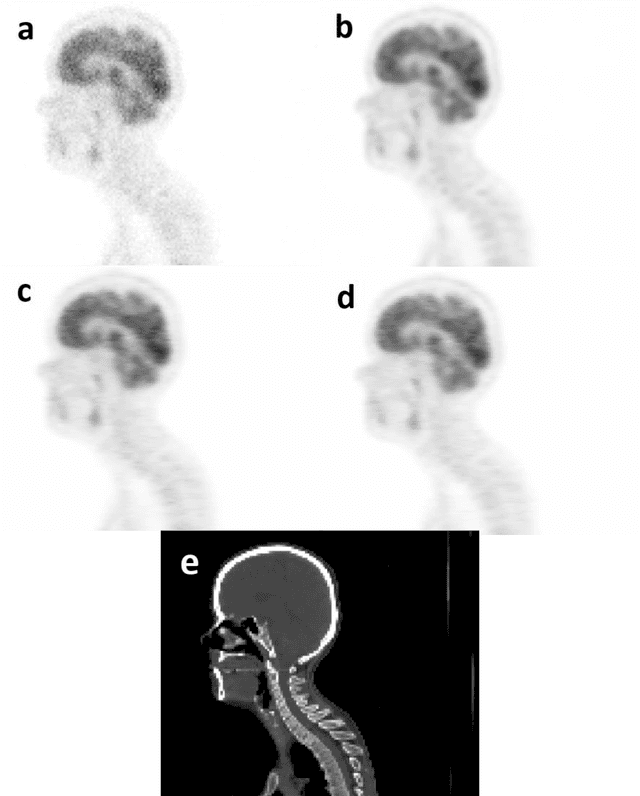

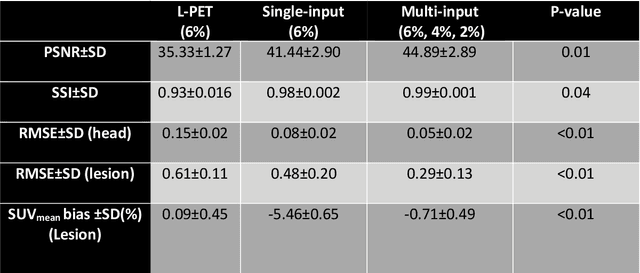

Deep Learning-Based Attenuation and Scatter Correction of Brain 18F-FDG PET Images in the Image Domain

Jun 29, 2022

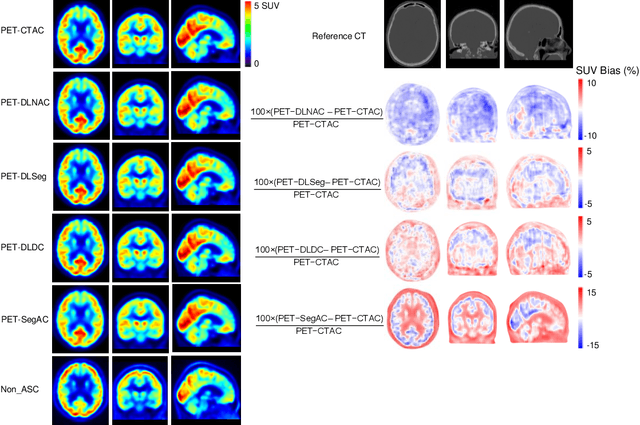

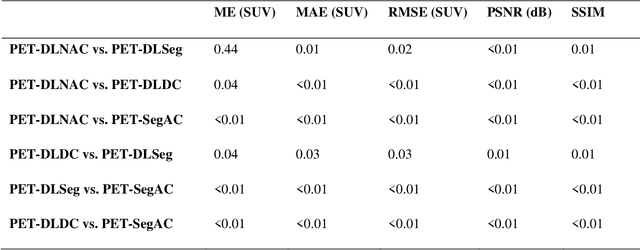

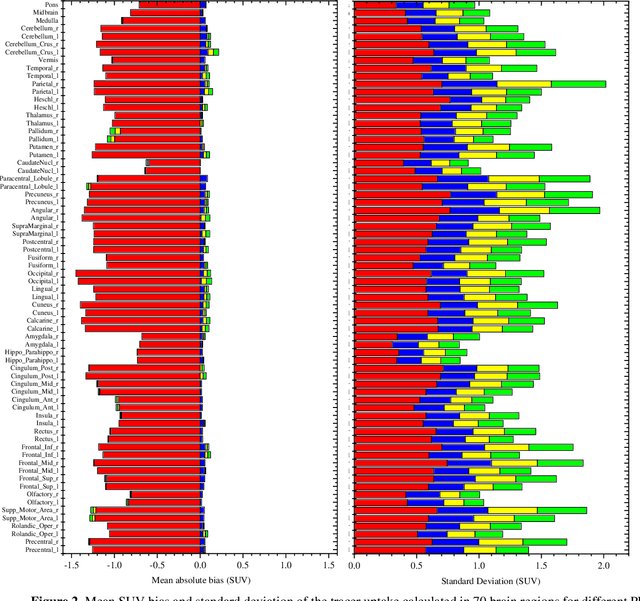

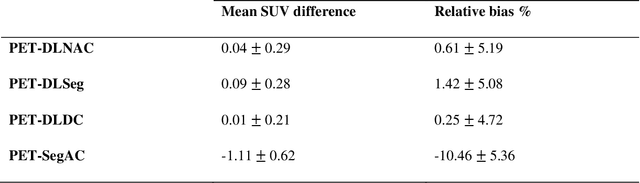

Abstract:Attenuation and scatter correction (AC) is crucial for quantitative Positron Emission Tomography (PET) imaging. Recently, direct application of AC in the image domain using deep learning approaches has been proposed for the hybrid PET/MR and dedicated PET systems that lack accompanying transmission or anatomical imaging. This study set out to investigate deep learning-based AC in the image domain using different input settings.

Joint brain tumor segmentation from multi MR sequences through a deep convolutional neural network

Mar 07, 2022

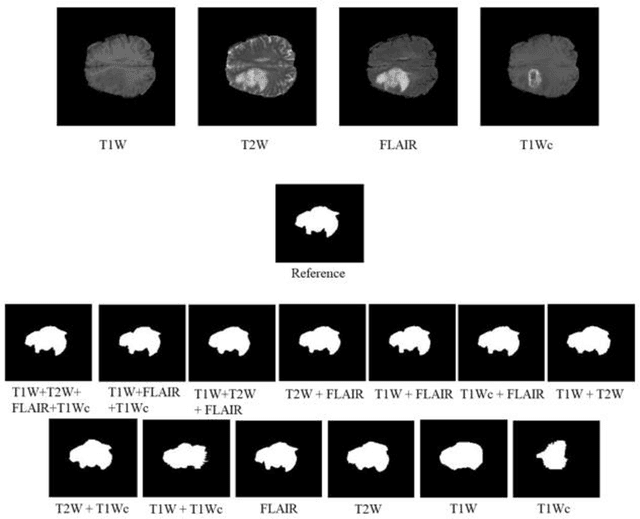

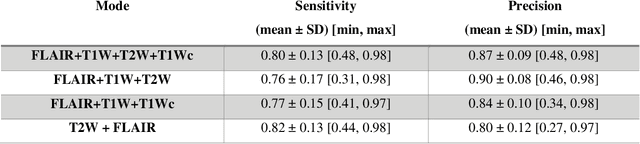

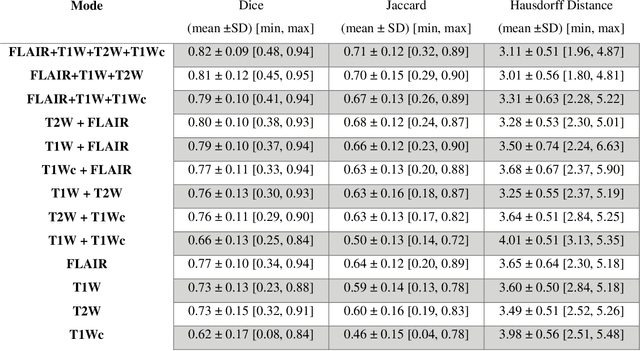

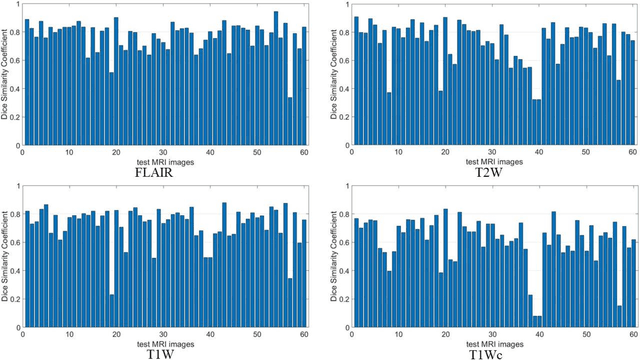

Abstract:Brain tumor segmentation is highly contributive in diagnosing and treatment planning. The manual brain tumor delineation is a time-consuming and tedious task and varies depending on the radiologists skill. Automated brain tumor segmentation is of high importance, and does not depend on either inter or intra-observation. The objective of this study is to automate the delineation of brain tumors from the FLAIR, T1 weighted, T2 weighted, and T1 weighted contrast-enhanced MR sequences through a deep learning approach, with a focus on determining which MR sequence alone or which combination thereof would lead to the highest accuracy therein.

A novel shape-based loss function for machine learning-based seminal organ segmentation in medical imaging

Mar 07, 2022

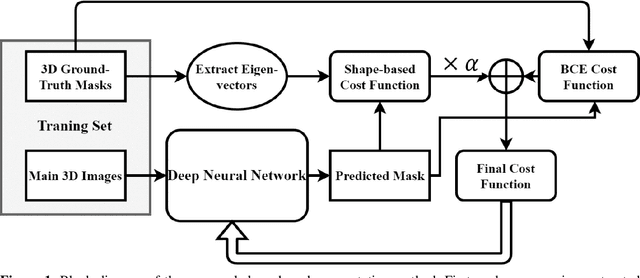

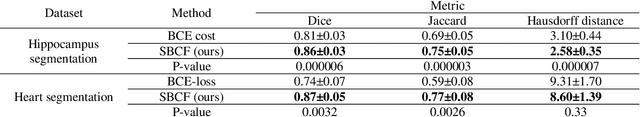

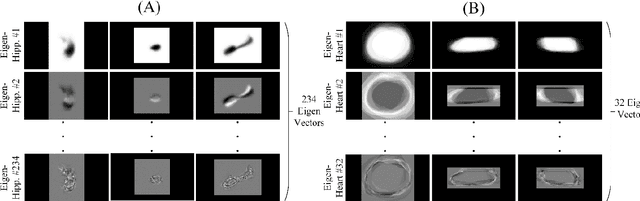

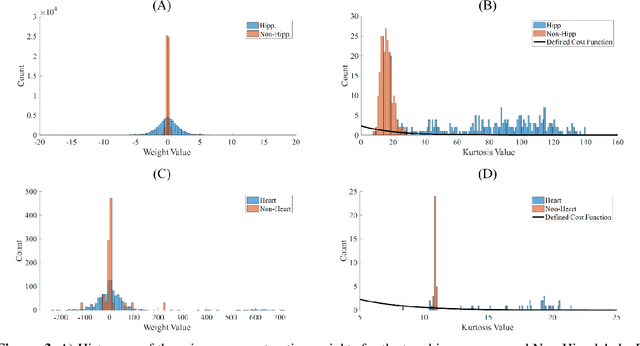

Abstract:Automated medical image segmentation is an essential task to aid/speed up diagnosis and treatment procedures in clinical practices. Deep convolutional neural networks have exhibited promising performance in accurate and automatic seminal segmentation. For segmentation tasks, these methods normally rely on minimizing a cost/loss function that is designed to maximize the overlap between the estimated target and the ground-truth mask delineated by the experts. A simple loss function based on the degrees of overlap (i.e., Dice metric) would not take into account the underlying shape and morphology of the target subject, as well as its realistic/natural variations; therefore, suboptimal segmentation results would be observed in the form of islands of voxels, holes, and unrealistic shapes or deformations. In this light, many studies have been conducted to refine/post-process the segmentation outcome and consider an initial guess as prior knowledge to avoid outliers and/or unrealistic estimations. In this study, a novel shape-based cost function is proposed which encourages/constrains the network to learn/capture the underlying shape features in order to generate a valid/realistic estimation of the target structure. To this end, the Principal Component Analysis (PCA) was performed on a vectorized training dataset to extract eigenvalues and eigenvectors of the target subjects. The key idea was to use the reconstruction weights to discriminate valid outcomes from outliers/erroneous estimations.

A novel unsupervised covid lung lesion segmentation based on the lung tissue identification

Feb 24, 2022

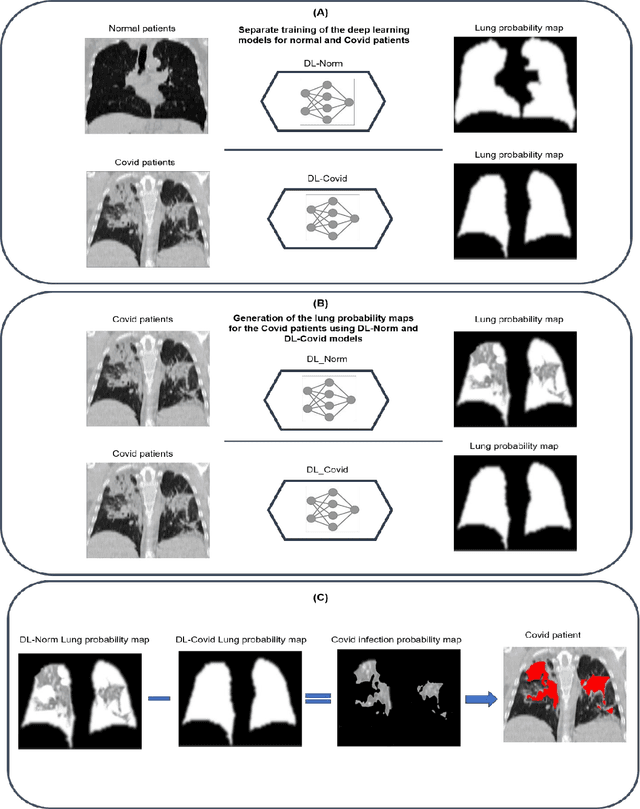

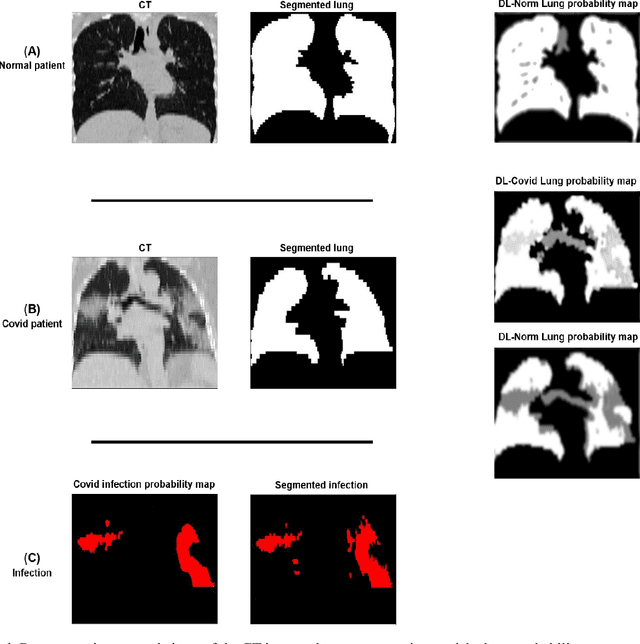

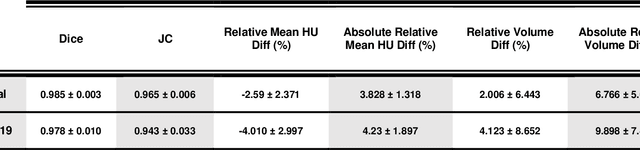

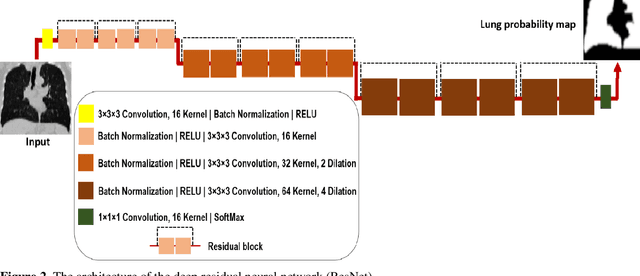

Abstract:This study aimed to evaluate the performance of a novel unsupervised deep learning-based framework for automated infections lesion segmentation from CT images of Covid patients. In the first step, two residual networks were independently trained to identify the lung tissue for normal and Covid patients in a supervised manner. These two models, referred to as DL-Covid and DL-Norm for Covid-19 and normal patients, respectively, generate the voxel-wise probability maps for lung tissue identification. To detect Covid lesions, the CT image of the Covid patient is processed by the DL-Covid and DL-Norm models to obtain two lung probability maps. Since the DL-Norm model is not familiar with Covid infections within the lung, this model would assign lower probabilities to the lesions than the DL-Covid. Hence, the probability maps of the Covid infections could be generated through the subtraction of the two lung probability maps obtained from the DL-Covid and DL-Norm models. Manual lesion segmentation of 50 Covid-19 CT images was used to assess the accuracy of the unsupervised lesion segmentation approach. The Dice coefficients of 0.985 and 0.978 were achieved for the lung segmentation of normal and Covid patients in the external validation dataset, respectively. Quantitative results of infection segmentation by the proposed unsupervised method showed the Dice coefficient and Jaccard index of 0.67 and 0.60, respectively. Quantitative evaluation of the proposed unsupervised approach for Covid-19 infectious lesion segmentation showed relatively satisfactory results. Since this framework does not require any annotated dataset, it could be used to generate very large training samples for the supervised machine learning algorithms dedicated to noisy and/or weakly annotated datasets.

Does prior knowledge in the form of multiple low-dose PET images improve standard-dose PET prediction?

Feb 22, 2022

Abstract:Reducing the injected dose would result in quality degradation and loss of information in PET imaging. To address this issue, deep learning methods have been introduced to predict standard PET images (S-PET) from the corresponding low-dose versions (L-PET). The existing deep learning-based denoising methods solely rely on a single dose level of PET images to predict the S-PET images. In this work, we proposed to exploit the prior knowledge in the form of multiple low-dose levels of PET images (in addition to the target low-dose level) to estimate the S-PET images.

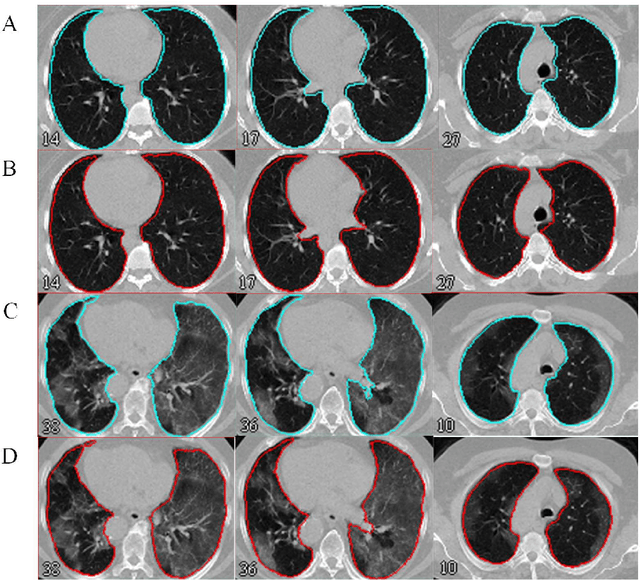

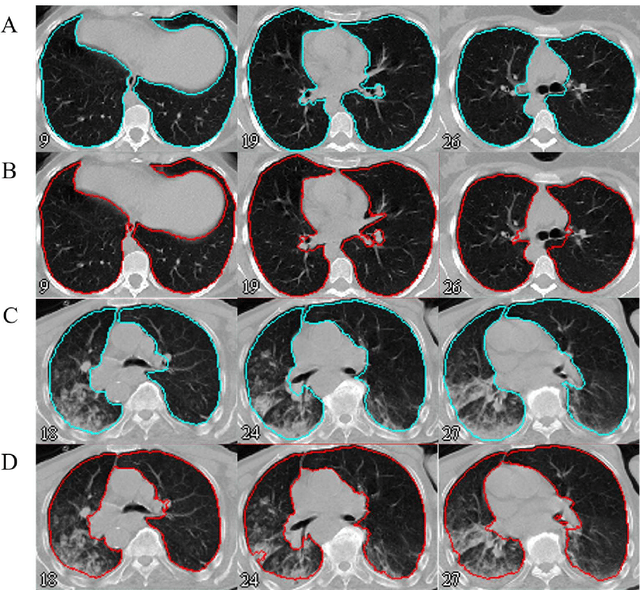

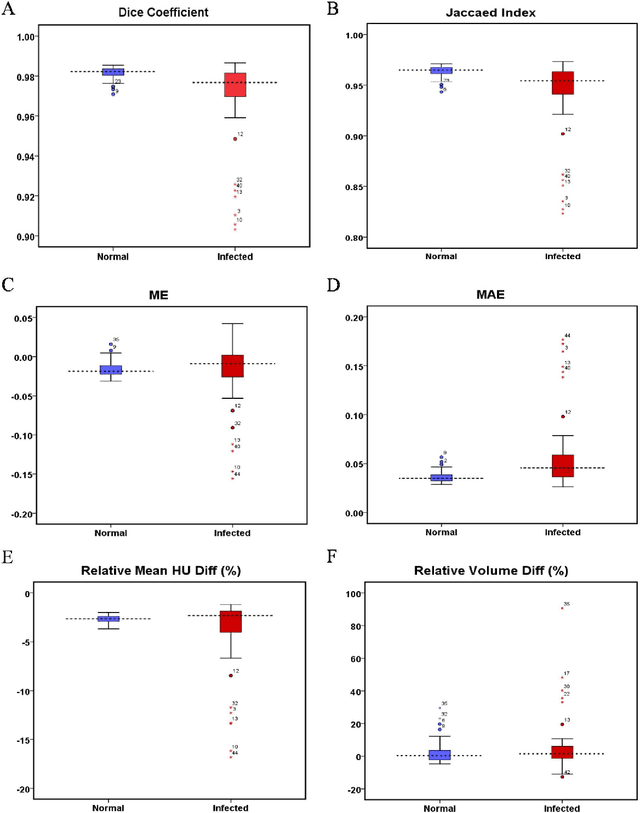

Automated lung segmentation from CT images of normal and COVID-19 pneumonia patients

Apr 05, 2021

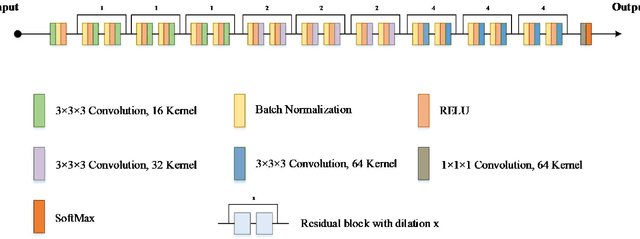

Abstract:Automated semantic image segmentation is an essential step in quantitative image analysis and disease diagnosis. This study investigates the performance of a deep learning-based model for lung segmentation from CT images for normal and COVID-19 patients. Chest CT images and corresponding lung masks of 1200 confirmed COVID-19 cases were used for training a residual neural network. The reference lung masks were generated through semi-automated/manual segmentation of the CT images. The performance of the model was evaluated on two distinct external test datasets including 120 normal and COVID-19 subjects, and the results of these groups were compared to each other. Different evaluation metrics such as dice coefficient (DSC), mean absolute error (MAE), relative mean HU difference, and relative volume difference were calculated to assess the accuracy of the predicted lung masks. The proposed deep learning method achieved DSC of 0.980 and 0.971 for normal and COVID-19 subjects, respectively, demonstrating significant overlap between predicted and reference lung masks. Moreover, MAEs of 0.037 HU and 0.061 HU, relative mean HU difference of -2.679% and -4.403%, and relative volume difference of 2.405% and 5.928% were obtained for normal and COVID-19 subjects, respectively. The comparable performance in lung segmentation of the normal and COVID-19 patients indicates the accuracy of the model for the identification of the lung tissue in the presence of the COVID-19 induced infections (though slightly better performance was observed for normal patients). The promising results achieved by the proposed deep learning-based model demonstrated its reliability in COVID-19 lung segmentation. This prerequisite step would lead to a more efficient and robust pneumonia lesion analysis.

Deep learning-based noise reduction in low dose SPECT Myocardial Perfusion Imaging: Quantitative assessment and clinical performance

Mar 22, 2021

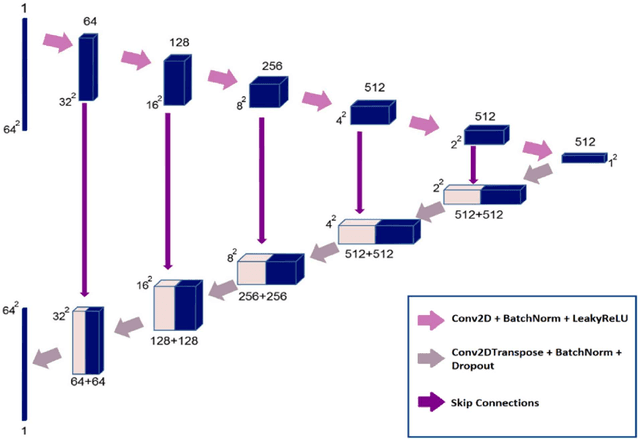

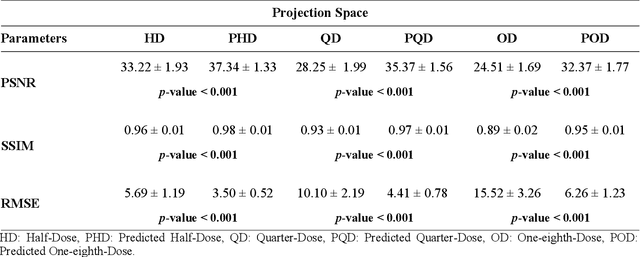

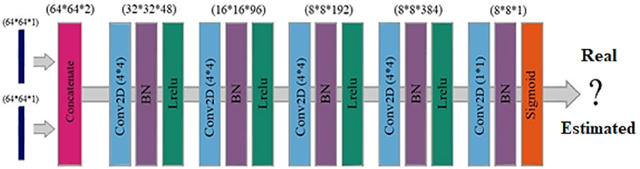

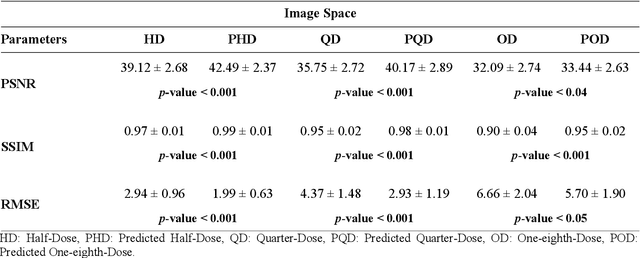

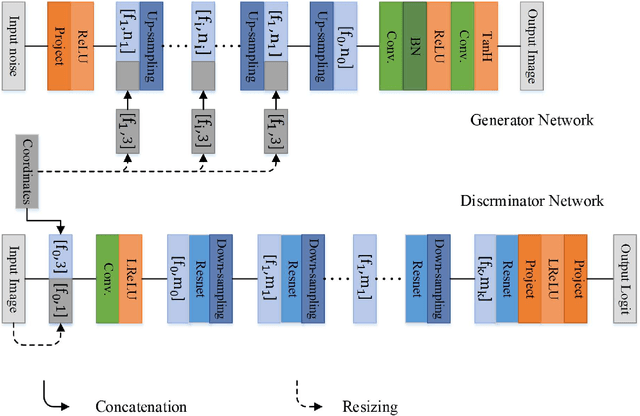

Abstract:Clinical SPECT-MPI images of 345 patients acquired from a dedicated cardiac SPECT in list-mode format were retrospectively employed to predict normal-dose images from low-dose data at the half, quarter, and one-eighth-dose levels. A generative adversarial network was implemented to predict non-gated normal-dose images in the projection space at the different reduced dose levels. Established metrics including the peak signal-to-noise ratio (PSNR), root mean squared error (RMSE), and structural similarity index metrics (SSIM) in addition to Pearson correlation coefficient analysis and derived parameters from Cedars-Sinai software were used to quantitatively assess the quality of the predicted normal-dose images. For clinical evaluation, the quality of the predicted normal-dose images was evaluated by a nuclear medicine specialist using a seven-point (-3 to +3) grading scheme. By considering PSNR, SSIM, and RMSE quantitative parameters among the different reduced dose levels, the highest PSNR (42.49) and SSIM (0.99), and the lowest RMSE (1.99) were obtained at the half-dose level in the reconstructed images. Pearson correlation coefficients were measured 0.997, 0.994, and 0.987 for the predicted normal-dose images at the half, quarter, and one-eighth-dose levels, respectively. Regarding the normal-dose images as the reference, the Bland-Altman plots sketched for the Cedars-Sinai selected parameters exhibited remarkably less bias and variance in the predicted normal-dose images compared with the low-dose data at the entire reduced dose levels. Overall, considering the clinical assessment performed by a nuclear medicine specialist, 100%, 80%, and 11% of the predicted normal-dose images were clinically acceptable at the half, quarter, and one-eighth-dose levels, respectively.

Deep learning-based synthetic CT generation from MR images: comparison of generative adversarial and residual neural networks

Mar 02, 2021

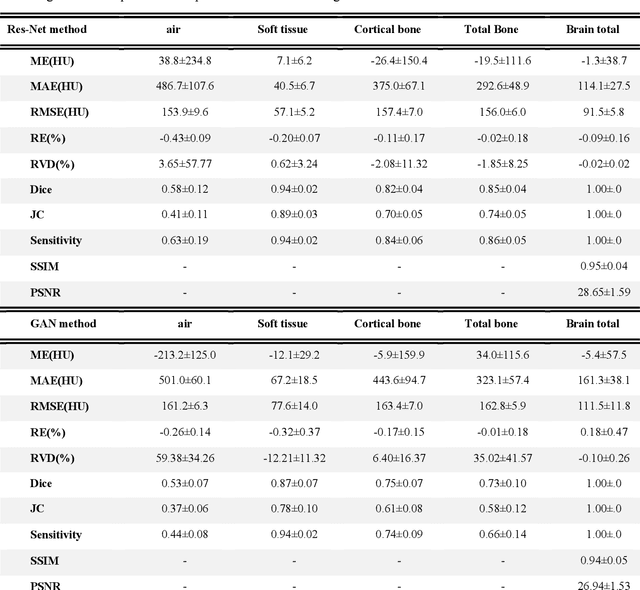

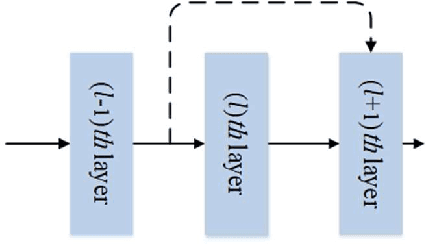

Abstract:Currently, MRI-only radiotherapy (RT) eliminates some of the concerns about using CT images in RT chains such as the registration of MR images to a separate CT, extra dose delivery, and the additional cost of repeated imaging. However, one remaining challenge is that the signal intensities of MRI are not related to the attenuation coefficient of the biological tissue. This work compares the performance of two state-of-the-art deep learning models; a generative adversarial network (GAN) and a residual network (ResNet) for synthetic CTs (sCT) generation from MR images. The brain MR and CT images of 86 participants were analyzed. GAN and ResNet models were implemented for the generation of synthetic CTs from the 3D T1-weighted MR images using a six-fold cross-validation scheme. The resulting sCTs were compared, considering the CT images as a reference using standard metrics such as the mean absolute error (MAE), peak signal-to-noise ratio (PSNR) and the structural similarity index (SSIM). Overall, the ResNet model exhibited higher accuracy in relation to the delineation of brain tissues. The ResNet model estimated the CT values for the entire head region with an MAE of 114.1 HU compared to MAE=-10.9 HU obtained from the GAN model. Moreover, both models offered comparable SSIM and PSNR values, although the ResNet method exhibited a slightly superior performance over the GAN method. We compared two state-of-the-art deep learning models for the task of MR-based sCT generation. The ResNet model exhibited superior results, thus demonstrating its potential to be used for the challenge of synthetic CT generation in PET/MR AC and MR-only RT planning.

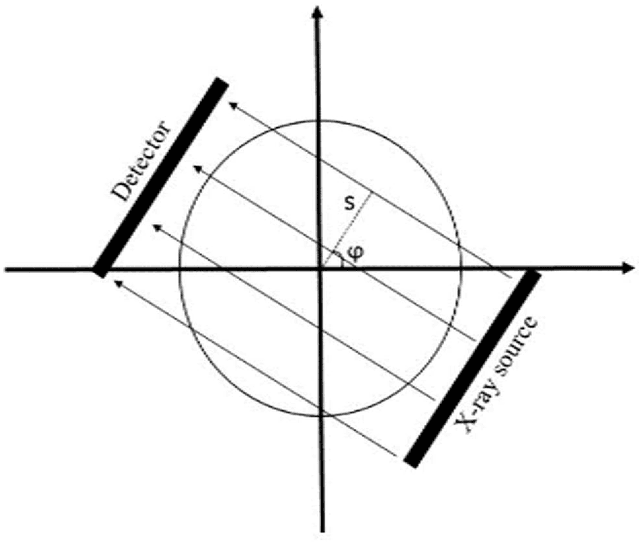

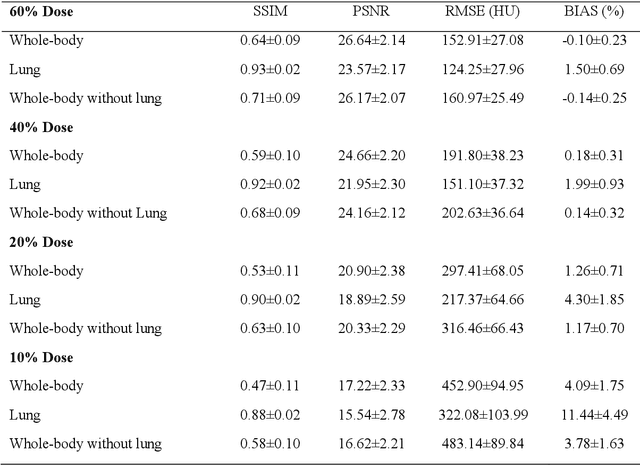

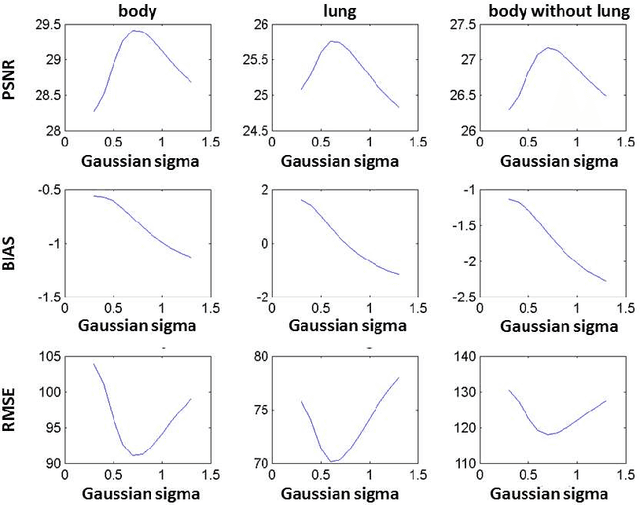

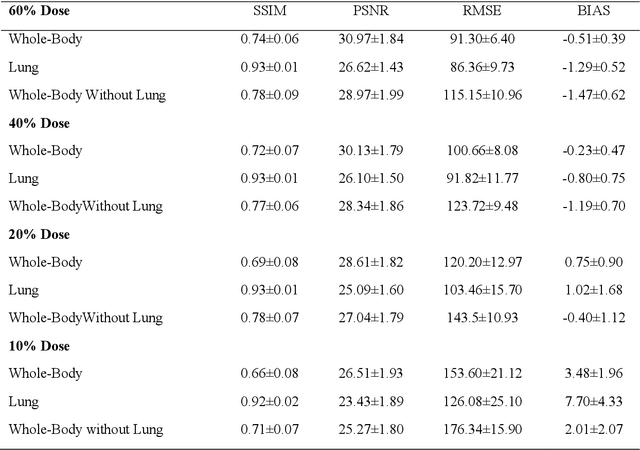

Quantitative analysis of image quality in low-dose CT imaging for Covid-19 patients

Feb 16, 2021

Abstract:We set out to simulate four reduced dose-levels (60%-dose, 40%-dose, 20%-dose, and 10%-dose) of standard CT imaging using Beer-Lambert's law across 49 patients infected with COVID-19. Then, three denoising filters, namely Gaussian, Bilateral, and Median, were applied to the different low-dose CT images, the quality of which was assessed prior to and after the application of the various filters via calculation of peak signal-to-noise ratio (PSNR), root mean square error (RMSE), structural similarity index measure (SSIM), and relative CT-value bias, separately for the lung tissue and whole-body. The quantitative evaluation indicated that 10%-dose CT images have inferior quality (with RMSE=322.1-+104.0 HU and bias=11.44-+4.49% in the lung) even after the application of the denoising filters. The bilateral filter exhibited superior performance to suppress the noise and recover the underlying signals in low-dose CT images compared to the other denoising techniques. The bilateral filter led to RMSE and bias of 100.21-+16.47 HU, -0.21-+1.20%, respectively in the lung regions for 20%-dose CT images compared to the Gaussian filter with RMSE=103.46-+15.70 HU and bias=1.02-+1.68%, median filter with RMSE=129.60-+18.09 HU and bias=-6.15-+2.24%, and the nonfiltered 20%-dose CT with RMSE=217.37-+64.66 HU and bias=4.30-+1.85%. In conclusion, the 20%-dose CT imaging followed by the bilateral filtering introduced a reasonable compromise between image quality and patient dose reduction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge