Hongwei Zeng

ASA: Activation Steering for Tool-Calling Domain Adaptation

Feb 04, 2026Abstract:For real-world deployment of general-purpose LLM agents, the core challenge is often not tool use itself, but efficient domain adaptation under rapidly evolving toolsets, APIs, and protocols. Repeated LoRA or SFT across domains incurs exponentially growing training and maintenance costs, while prompt or schema methods are brittle under distribution shift and complex interfaces. We propose \textbf{Activation Steering Adapter (ASA}), a lightweight, inference-time, training-free mechanism that reads routing signals from intermediate activations and uses an ultra-light router to produce adaptive control strengths for precise domain alignment. Across multiple model scales and domains, ASA achieves LoRA-comparable adaptation with substantially lower overhead and strong cross-model transferability, making it ideally practical for robust, scalable, and efficient multi-domain tool ecosystems with frequent interface churn dynamics.

Knowledge forest: a novel model to organize knowledge fragments

Dec 14, 2019

Abstract:With the rapid growth of knowledge, it shows a steady trend of knowledge fragmentization. Knowledge fragmentization manifests as that the knowledge related to a specific topic in a course is scattered in isolated and autonomous knowledge sources. We term the knowledge of a facet in a specific topic as a knowledge fragment. The problem of knowledge fragmentization brings two challenges: First, knowledge is scattered in various knowledge sources, which exerts users' considerable efforts to search for the knowledge of their interested topics, thereby leading to information overload. Second, learning dependencies which refer to the precedence relationships between topics in the learning process are concealed by the isolation and autonomy of knowledge sources, thus causing learning disorientation. To solve the knowledge fragmentization problem, we propose a novel knowledge organization model, knowledge forest, which consists of facet trees and learning dependencies. Facet trees can organize knowledge fragments with facet hyponymy to alleviate information overload. Learning dependencies can organize disordered topics to cope with learning disorientation. We conduct extensive experiments on three manually constructed datasets from the Data Structure, Data Mining, and Computer Network courses, and the experimental results show that knowledge forest can effectively organize knowledge fragments, and alleviate information overload and learning disorientation.

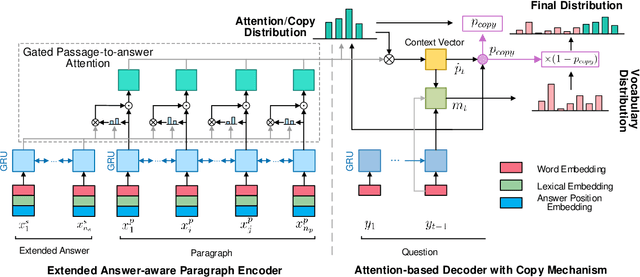

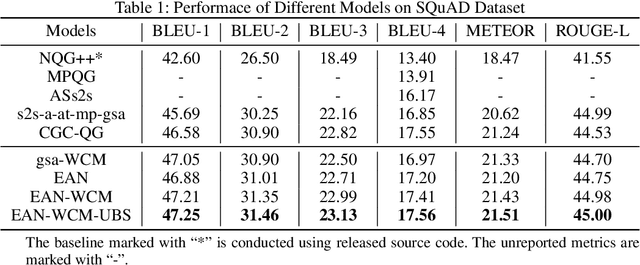

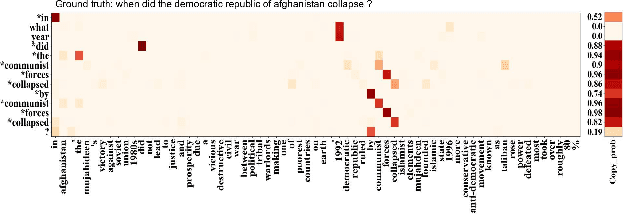

Extended Answer and Uncertainty Aware Neural Question Generation

Nov 19, 2019

Abstract:In this paper, we study automatic question generation, the task of creating questions from corresponding text passages where some certain spans of the text can serve as the answers. We propose an Extended Answer-aware Network (EAN) which is trained with Word-based Coverage Mechanism (WCM) and decodes with Uncertainty-aware Beam Search (UBS). The EAN represents the target answer by its surrounding sentence with an encoder, and incorporates the information of the extended answer into paragraph representation with gated paragraph-to-answer attention to tackle the problem of the inadequate representation of the target answer. To reduce undesirable repetition, the WCM penalizes repeatedly attending to the same words at different time-steps in the training stage. The UBS aims to seek a better balance between the model confidence in copying words from an input text paragraph and the confidence in generating words from a vocabulary. We conduct experiments on the SQuAD dataset, and the results show our approach achieves significant performance improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge