Hogeon Seo

SleePyCo: Automatic Sleep Scoring with Feature Pyramid and Contrastive Learning

Sep 20, 2022

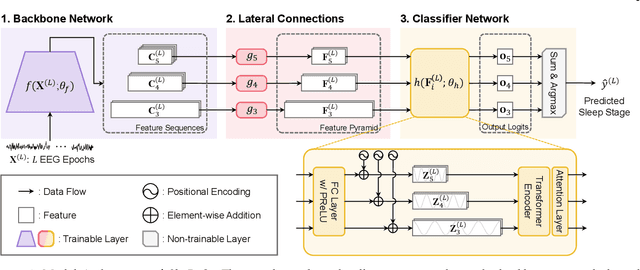

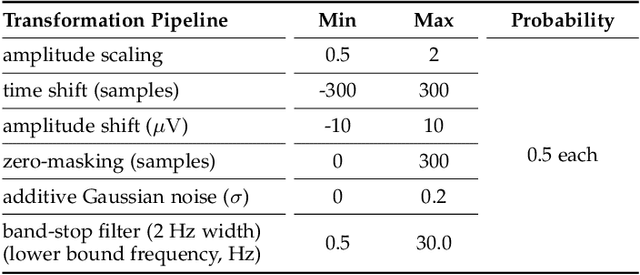

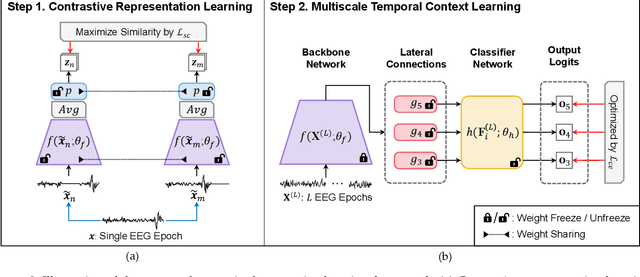

Abstract:Automatic sleep scoring is essential for the diagnosis and treatment of sleep disorders and enables longitudinal sleep tracking in home environments. Conventionally, learning-based automatic sleep scoring on single-channel electroencephalogram (EEG) is actively studied because obtaining multi-channel signals during sleep is difficult. However, learning representation from raw EEG signals is challenging owing to the following issues: 1) sleep-related EEG patterns occur on different temporal and frequency scales and 2) sleep stages share similar EEG patterns. To address these issues, we propose a deep learning framework named SleePyCo that incorporates 1) a feature pyramid and 2) supervised contrastive learning for automatic sleep scoring. For the feature pyramid, we propose a backbone network named SleePyCo-backbone to consider multiple feature sequences on different temporal and frequency scales. Supervised contrastive learning allows the network to extract class discriminative features by minimizing the distance between intra-class features and simultaneously maximizing that between inter-class features. Comparative analyses on four public datasets demonstrate that SleePyCo consistently outperforms existing frameworks based on single-channel EEG. Extensive ablation experiments show that SleePyCo exhibits enhanced overall performance, with significant improvements in discrimination between the N1 and rapid eye movement (REM) stages.

Intra- and Inter-epoch Temporal Context Network (IITNet) for Automatic Sleep Stage Scoring

Feb 18, 2019

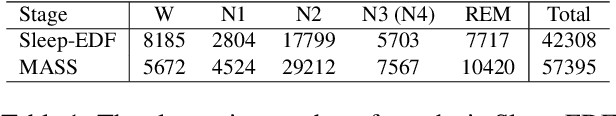

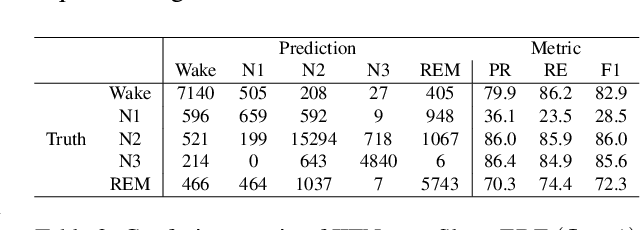

Abstract:This study proposes a novel deep learning model, called IITNet, to learn intra- and inter-epoch temporal contexts from a raw single channel electroencephalogram (EEG) for automatic sleep stage scoring. When sleep experts identify the sleep stage of a 30-second PSG data called an epoch, they investigate the sleep-related events such as sleep spindles, K-complex, and frequency components from local segments of an epoch (sub-epoch) and consider the relations between sleep-related events of successive epochs to follow the transition rules. Inspired by this, IITNet learns how to encode sub-epoch into representative feature via a deep residual network, then captures contextual information in the sequence of representative features via BiLSTM. Thus, IITNet can extract features in sub-epoch level and consider temporal context not only between epochs but also in an epoch. IITNet is an end-to-end architecture and does not need any preprocessing, handcrafted feature design, balanced sampling, pre-training, or fine-tuning. Our model was trained and evaluated in Sleep-EDF and MASS datasets and outperformed other state-of-the-art results on both the datasets with the overall accuracy (ACC) of 84.0% and 86.6%, macro F1-score (MF1) of 77.7 and 80.8, and Cohen's kappa of 0.78 and 0.80 in Sleep-EDF and MASS, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge