Hoang-Son Tran

Negative Dependence as a toolbox for machine learning : review and new developments

Feb 11, 2025

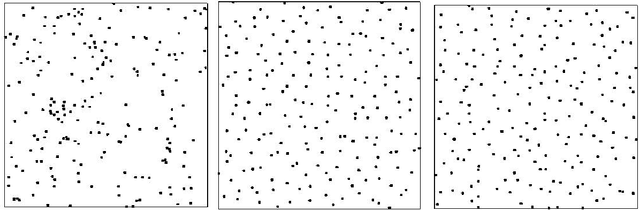

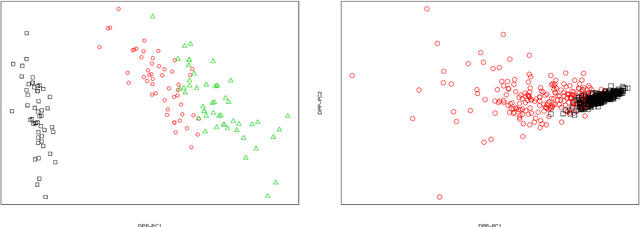

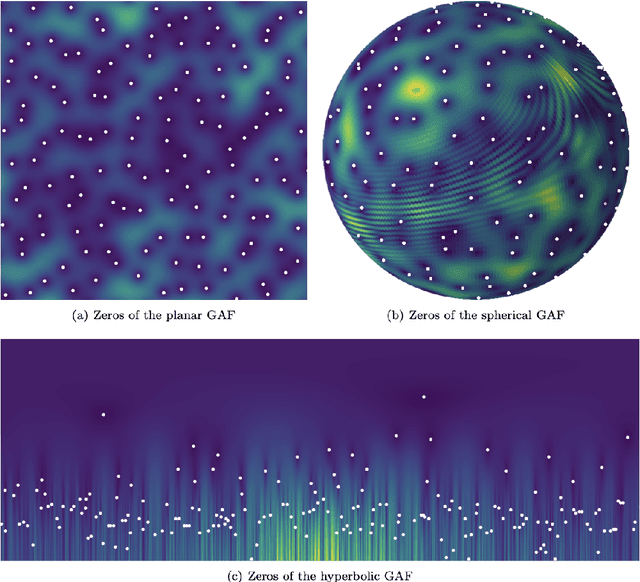

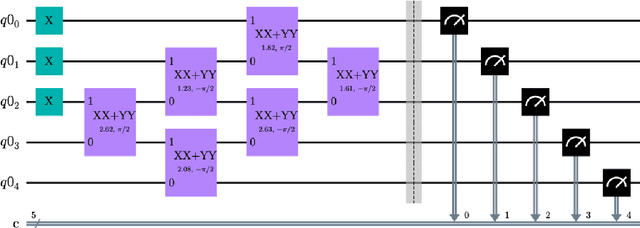

Abstract:Negative dependence is becoming a key driver in advancing learning capabilities beyond the limits of traditional independence. Recent developments have evidenced support towards negatively dependent systems as a learning paradigm in a broad range of fundamental machine learning challenges including optimization, sampling, dimensionality reduction and sparse signal recovery, often surpassing the performance of current methods based on statistical independence. The most popular negatively dependent model has been that of determinantal point processes (DPPs), which have their origins in quantum theory. However, other models, such as perturbed lattice models, strongly Rayleigh measures, zeros of random functions have gained salience in various learning applications. In this article, we review this burgeoning field of research, as it has developed over the past two decades or so. We also present new results on applications of DPPs to the parsimonious representation of neural networks. In the limited scope of the article, we mostly focus on aspects of this area to which the authors contributed over the recent years, including applications to Monte Carlo methods, coresets and stochastic gradient descent, stochastic networks, signal processing and connections to quantum computation. However, starting from basics of negative dependence for the uninitiated reader, extensive references are provided to a broad swath of related developments which could not be covered within our limited scope. While existing works and reviews generally focus on specific negatively dependent models (e.g. DPPs), a notable feature of this article is that it addresses negative dependence as a machine learning methodology as a whole. In this vein, it covers within its span an array of negatively dependent models and their applications well beyond DPPs, thereby putting forward a very general and rather unique perspective.

Small coresets via negative dependence: DPPs, linear statistics, and concentration

Nov 01, 2024

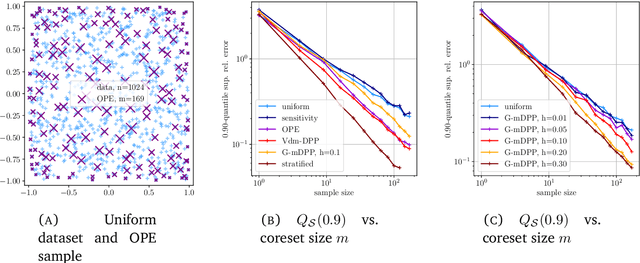

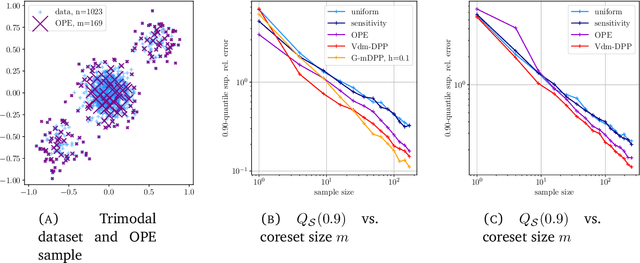

Abstract:Determinantal point processes (DPPs) are random configurations of points with tunable negative dependence. Because sampling is tractable, DPPs are natural candidates for subsampling tasks, such as minibatch selection or coreset construction. A \emph{coreset} is a subset of a (large) training set, such that minimizing an empirical loss averaged over the coreset is a controlled replacement for the intractable minimization of the original empirical loss. Typically, the control takes the form of a guarantee that the average loss over the coreset approximates the total loss uniformly across the parameter space. Recent work has provided significant empirical support in favor of using DPPs to build randomized coresets, coupled with interesting theoretical results that are suggestive but leave some key questions unanswered. In particular, the central question of whether the cardinality of a DPP-based coreset is fundamentally smaller than one based on independent sampling remained open. In this paper, we answer this question in the affirmative, demonstrating that \emph{DPPs can provably outperform independently drawn coresets}. In this vein, we contribute a conceptual understanding of coreset loss as a \emph{linear statistic} of the (random) coreset. We leverage this structural observation to connect the coresets problem to a more general problem of concentration phenomena for linear statistics of DPPs, wherein we obtain \emph{effective concentration inequalities that extend well-beyond the state-of-the-art}, encompassing general non-projection, even non-symmetric kernels. The latter have been recently shown to be of interest in machine learning beyond coresets, but come with a limited theoretical toolbox, to the extension of which our result contributes. Finally, we are also able to address the coresets problem for vector-valued objective functions, a novelty in the coresets literature.

Learning Networks from Gaussian Graphical Models and Gaussian Free Fields

Aug 04, 2023Abstract:We investigate the problem of estimating the structure of a weighted network from repeated measurements of a Gaussian Graphical Model (GGM) on the network. In this vein, we consider GGMs whose covariance structures align with the geometry of the weighted network on which they are based. Such GGMs have been of longstanding interest in statistical physics, and are referred to as the Gaussian Free Field (GFF). In recent years, they have attracted considerable interest in the machine learning and theoretical computer science. In this work, we propose a novel estimator for the weighted network (equivalently, its Laplacian) from repeated measurements of a GFF on the network, based on the Fourier analytic properties of the Gaussian distribution. In this pursuit, our approach exploits complex-valued statistics constructed from observed data, that are of interest on their own right. We demonstrate the effectiveness of our estimator with concrete recovery guarantees and bounds on the required sample complexity. In particular, we show that the proposed statistic achieves the parametric rate of estimation for fixed network size. In the setting of networks growing with sample size, our results show that for Erdos-Renyi random graphs $G(d,p)$ above the connectivity threshold, we demonstrate that network recovery takes place with high probability as soon as the sample size $n$ satisfies $n \gg d^4 \log d \cdot p^{-2}$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge