Hesam Mojtahedi

BIM-Discrepancy-Driven Active Sensing for Risk-Aware UAV-UGV Navigation

Nov 18, 2025Abstract:This paper presents a BIM-discrepancy-driven active sensing framework for cooperative navigation between unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs) in dynamic construction environments. Traditional navigation approaches rely on static Building Information Modeling (BIM) priors or limited onboard perception. In contrast, our framework continuously fuses real-time LiDAR data from aerial and ground robots with BIM priors to maintain an evolving 2D occupancy map. We quantify navigation safety through a unified corridor-risk metric integrating occupancy uncertainty, BIM-map discrepancy, and clearance. When risk exceeds safety thresholds, the UAV autonomously re-scans affected regions to reduce uncertainty and enable safe replanning. Validation in PX4-Gazebo simulation with Robotec GPU LiDAR demonstrates that risk-triggered re-scanning reduces mean corridor risk by 58% and map entropy by 43% compared to static BIM navigation, while maintaining clearance margins above 0.4 m. Compared to frontier-based exploration, our approach achieves similar uncertainty reduction in half the mission time. These results demonstrate that integrating BIM priors with risk-adaptive aerial sensing enables scalable, uncertainty-aware autonomy for construction robotics.

GLIDE: A Coordinated Aerial-Ground Framework for Search and Rescue in Unknown Environments

Sep 17, 2025Abstract:We present a cooperative aerial-ground search-and-rescue (SAR) framework that pairs two unmanned aerial vehicles (UAVs) with an unmanned ground vehicle (UGV) to achieve rapid victim localization and obstacle-aware navigation in unknown environments. We dub this framework Guided Long-horizon Integrated Drone Escort (GLIDE), highlighting the UGV's reliance on UAV guidance for long-horizon planning. In our framework, a goal-searching UAV executes real-time onboard victim detection and georeferencing to nominate goals for the ground platform, while a terrain-scouting UAV flies ahead of the UGV's planned route to provide mid-level traversability updates. The UGV fuses aerial cues with local sensing to perform time-efficient A* planning and continuous replanning as information arrives. Additionally, we present a hardware demonstration (using a GEM e6 golf cart as the UGV and two X500 UAVs) to evaluate end-to-end SAR mission performance and include simulation ablations to assess the planning stack in isolation from detection. Empirical results demonstrate that explicit role separation across UAVs, coupled with terrain scouting and guided planning, improves reach time and navigation safety in time-critical SAR missions.

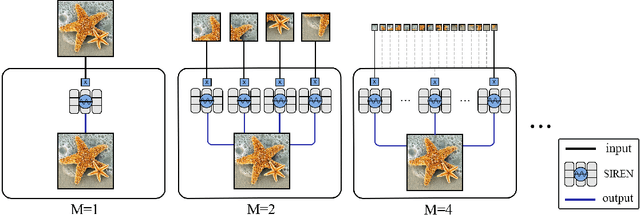

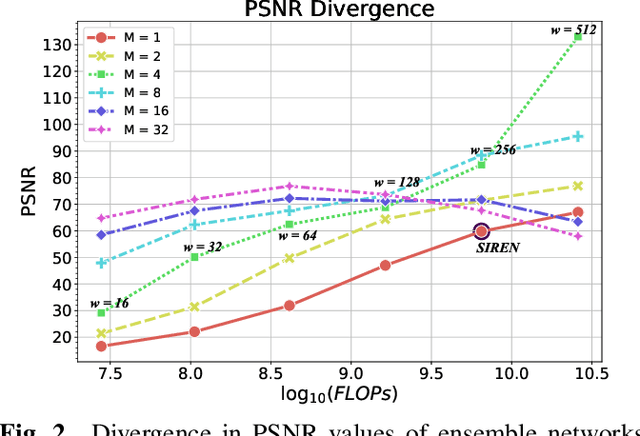

Ensemble Neural Representation Networks

Oct 07, 2021

Abstract:Implicit Neural Representation (INR) has recently attracted considerable attention for storing various types of signals in continuous forms. The existing INR networks require lengthy training processes and high-performance computational resources. In this paper, we propose a novel sub-optimal ensemble architecture for INR that resolves the aforementioned problems. In this architecture, the representation task is divided into several sub-tasks done by independent sub-networks. We show that the performance of the proposed ensemble INR architecture may decrease if the dimensions of sub-networks increase. Hence, it is vital to suggest an optimization algorithm to find the sub-optimal structure of the ensemble network, which is done in this paper. According to the simulation results, the proposed architecture not only has significantly fewer floating-point operations (FLOPs) and less training time, but it also has better performance in terms of Peak Signal to Noise Ratio (PSNR) compared to those of its counterparts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge