Heoun-taek Lim

Towards a Better Understanding of VR Sickness: Physical Symptom Prediction for VR Contents

Apr 14, 2021

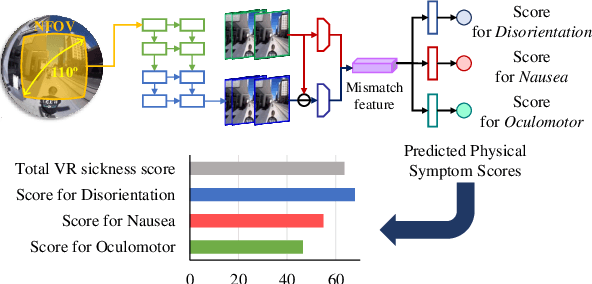

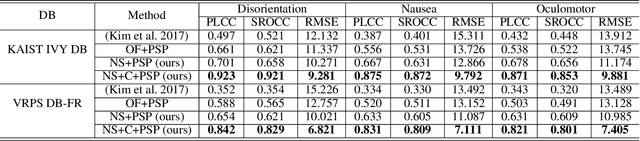

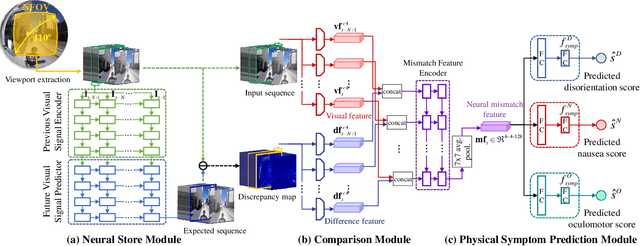

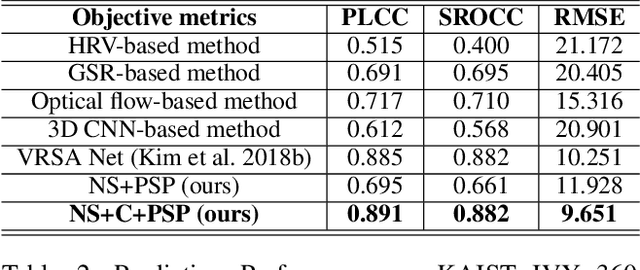

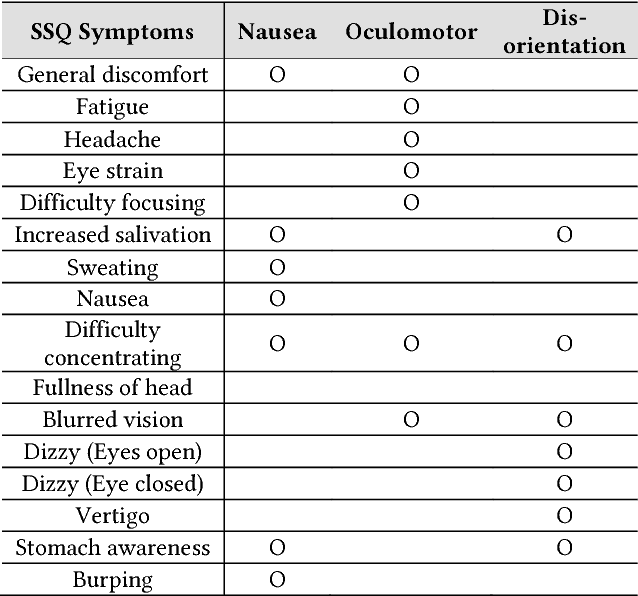

Abstract:We address the black-box issue of VR sickness assessment (VRSA) by evaluating the level of physical symptoms of VR sickness. For the VR contents inducing the similar VR sickness level, the physical symptoms can vary depending on the characteristics of the contents. Most of existing VRSA methods focused on assessing the overall VR sickness score. To make better understanding of VR sickness, it is required to predict and provide the level of major symptoms of VR sickness rather than overall degree of VR sickness. In this paper, we predict the degrees of main physical symptoms affecting the overall degree of VR sickness, which are disorientation, nausea, and oculomotor. In addition, we introduce a new large-scale dataset for VRSA including 360 videos with various frame rates, physiological signals, and subjective scores. On VRSA benchmark and our newly collected dataset, our approach shows a potential to not only achieve the highest correlation with subjective scores, but also to better understand which symptoms are the main causes of VR sickness.

VR IQA NET: Deep Virtual Reality Image Quality Assessment using Adversarial Learning

Apr 11, 2018

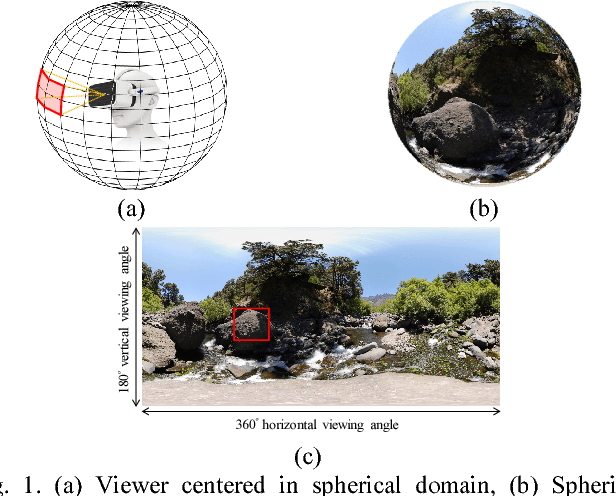

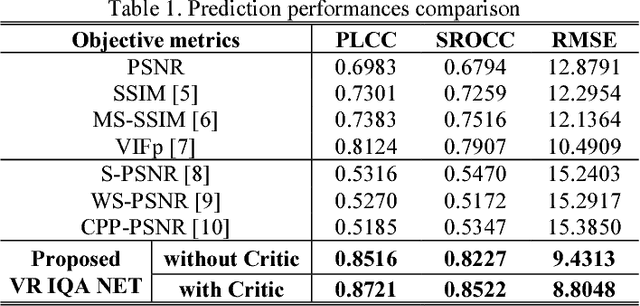

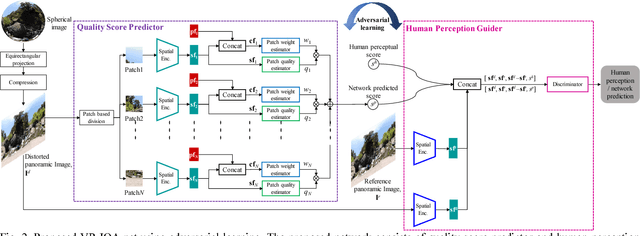

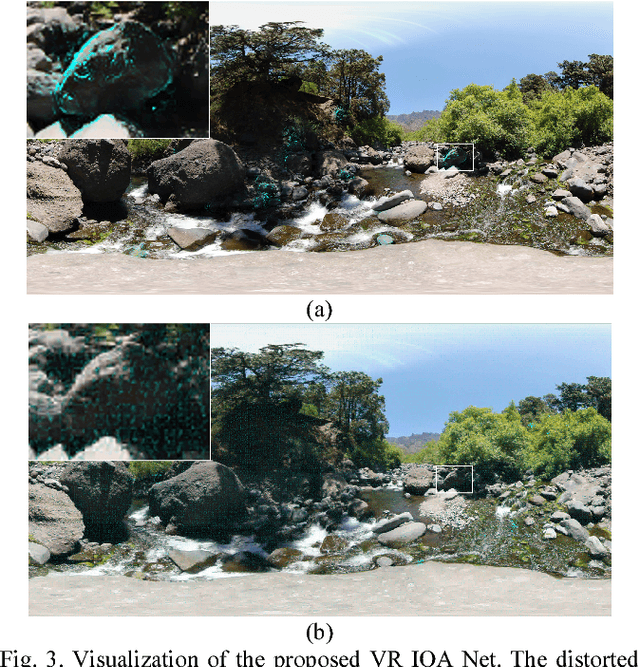

Abstract:In this paper, we propose a novel virtual reality image quality assessment (VR IQA) with adversarial learning for omnidirectional images. To take into account the characteristics of the omnidirectional image, we devise deep networks including novel quality score predictor and human perception guider. The proposed quality score predictor automatically predicts the quality score of distorted image using the latent spatial and position feature. The proposed human perception guider criticizes the predicted quality score of the predictor with the human perceptual score using adversarial learning. For evaluation, we conducted extensive subjective experiments with omnidirectional image dataset. Experimental results show that the proposed VR IQA metric outperforms the 2-D IQA and the state-of-the-arts VR IQA.

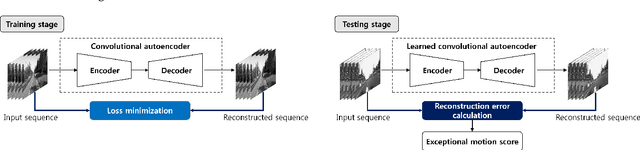

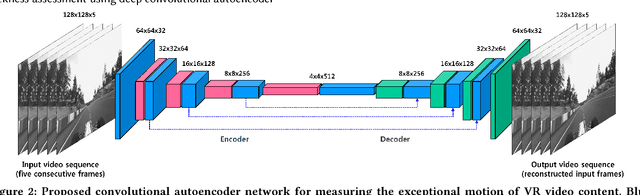

Measurement of exceptional motion in VR video contents for VR sickness assessment using deep convolutional autoencoder

Apr 11, 2018

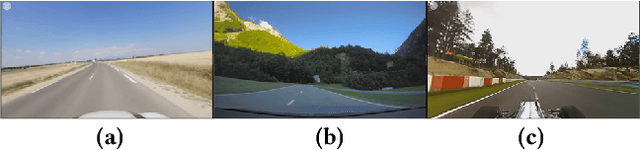

Abstract:This paper proposes a new objective metric of exceptional motion in VR video contents for VR sickness assessment. In VR environment, VR sickness can be caused by several factors which are mismatched motion, field of view, motion parallax, viewing angle, etc. Similar to motion sickness, VR sickness can induce a lot of physical symptoms such as general discomfort, headache, stomach awareness, nausea, vomiting, fatigue, and disorientation. To address the viewing safety issues in virtual environment, it is of great importance to develop an objective VR sickness assessment method that predicts and analyses the degree of VR sickness induced by the VR content. The proposed method takes into account motion information that is one of the most important factors in determining the overall degree of VR sickness. In this paper, we detect the exceptional motion that is likely to induce VR sickness. Spatio-temporal features of the exceptional motion in the VR video content are encoded using a convolutional autoencoder. For objectively assessing the VR sickness, the level of exceptional motion in VR video content is measured by using the convolutional autoencoder as well. The effectiveness of the proposed method has been successfully evaluated by subjective assessment experiment using simulator sickness questionnaires (SSQ) in VR environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge