Hengru Xu

From Random to Supervised: A Novel Dropout Mechanism Integrated with Global Information

Oct 10, 2018

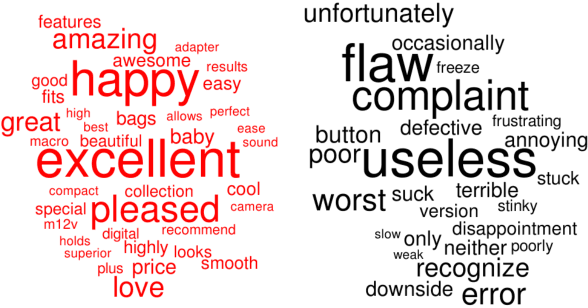

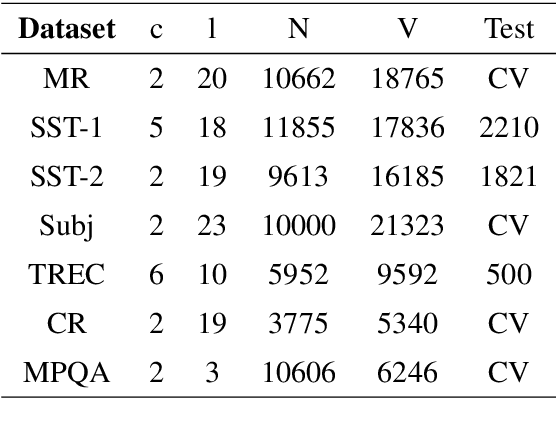

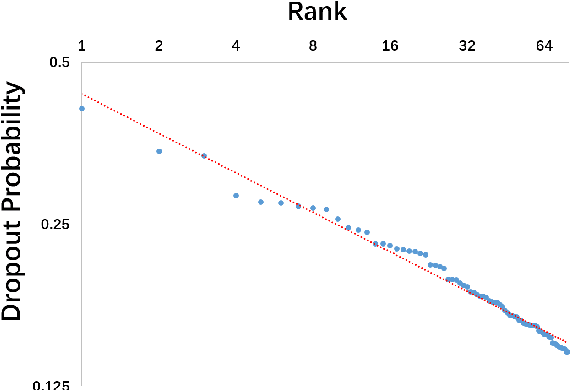

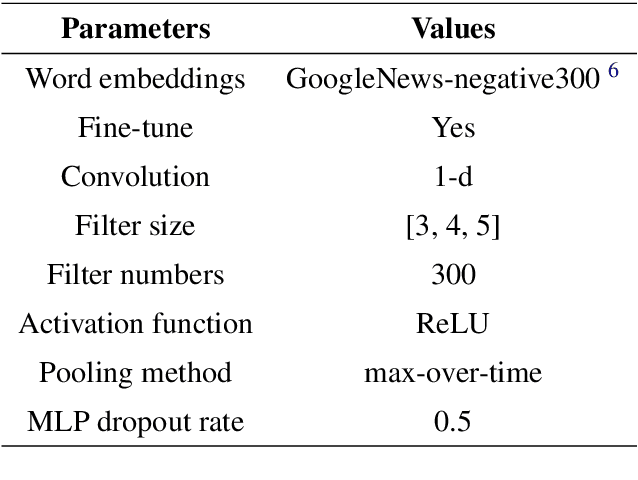

Abstract:Dropout is used to avoid overfitting by randomly dropping units from the neural networks during training. Inspired by dropout, this paper presents GI-Dropout, a novel dropout method integrating with global information to improve neural networks for text classification. Unlike the traditional dropout method in which the units are dropped randomly according to the same probability, we aim to use explicit instructions based on global information of the dataset to guide the training process. With GI-Dropout, the model is supposed to pay more attention to inapparent features or patterns. Experiments demonstrate the effectiveness of the dropout with global information on seven text classification tasks, including sentiment analysis and topic classification.

Generalize Symbolic Knowledge With Neural Rule Engine

Sep 04, 2018

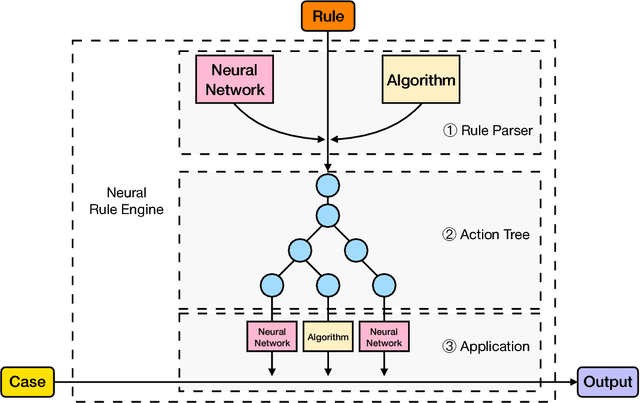

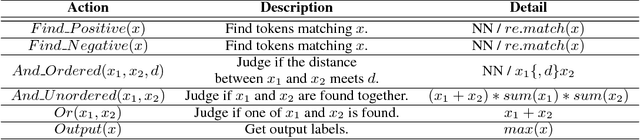

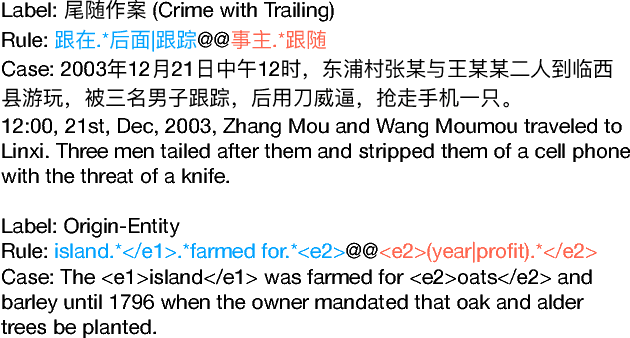

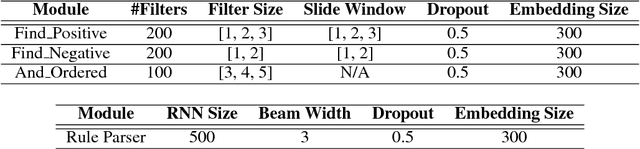

Abstract:Neural-symbolic learning aims to take the advantages of both neural networks and symbolic knowledge to build better intelligent systems. As neural networks have dominated the state-of-the-art results in a wide range of NLP tasks, it attracts considerable attention to improve the performance of neural models by integrating symbolic knowledge. Different from existing works, this paper investigates the combination of these two powerful paradigms from the knowledge-driven side. We propose Neural Rule Engine (NRE), which can learn knowledge explicitly from logic rules and then generalize them implicitly with neural networks. NRE is implemented with neural module networks in which each module represents an action of the logic rule. The experiments show that NRE could greatly improve the generalization abilities of logic rules with a significant increase on recall. Meanwhile, the precision is still maintained at a high level.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge