Helin Dutagaci

Local region-learning modules for point cloud classification

Mar 30, 2023Abstract:Data organization via forming local regions is an integral part of deep learning networks that process 3D point clouds in a hierarchical manner. At each level, the point cloud is sampled to extract representative points and these points are used to be centers of local regions. The organization of local regions is of considerable importance since it determines the location and size of the receptive field at a particular layer of feature aggregation. In this paper, we present two local region-learning modules: Center Shift Module to infer the appropriate shift for each center point, and Radius Update Module to alter the radius of each local region. The parameters of the modules are learned through optimizing the loss associated with the particular task within an end-to-end network. We present alternatives for these modules through various ways of modeling the interactions of the features and locations of 3D points in the point cloud. We integrated both modules independently and together to the PointNet++ object classification architecture, and demonstrated that the modules contributed to a significant increase in classification accuracy for the ScanObjectNN data set.

Using t-distributed stochastic neighbor embedding for visualization and segmentation of 3D point clouds of plants

Feb 07, 2023Abstract:In this work, the use of t-SNE is proposed to embed 3D point clouds of plants into 2D space for plant characterization. It is demonstrated that t-SNE operates as a practical tool to flatten and visualize a complete 3D plant model in 2D space. The perplexity parameter of t-SNE allows 2D rendering of plant structures at various organizational levels. Aside from the promise of serving as a visualization tool for plant scientists, t-SNE also provides a gateway for processing 3D point clouds of plants using their embedded counterparts in 2D. In this paper, simple methods were proposed to perform semantic segmentation and instance segmentation via grouping the embedded 2D points. The evaluation of these methods on a public 3D plant data set conveys the potential of t-SNE for enabling of 2D implementation of various steps involved in automatic 3D phenotyping pipelines.

Assigning Apples to Individual Trees in Dense Orchards using 3D Color Point Clouds

Dec 26, 2020

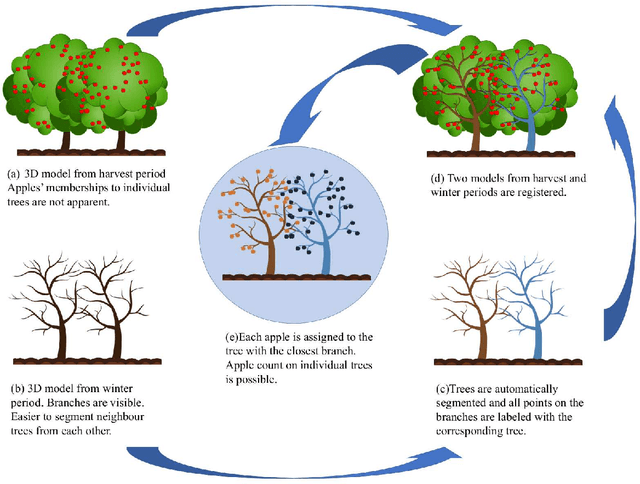

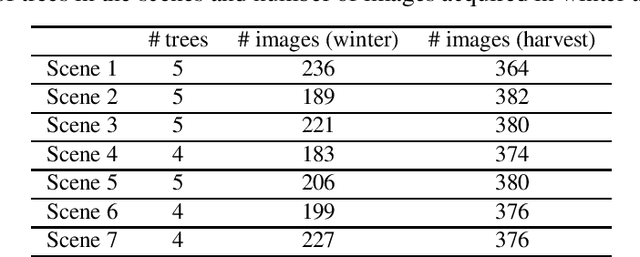

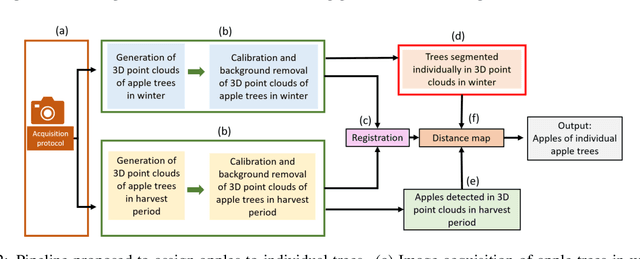

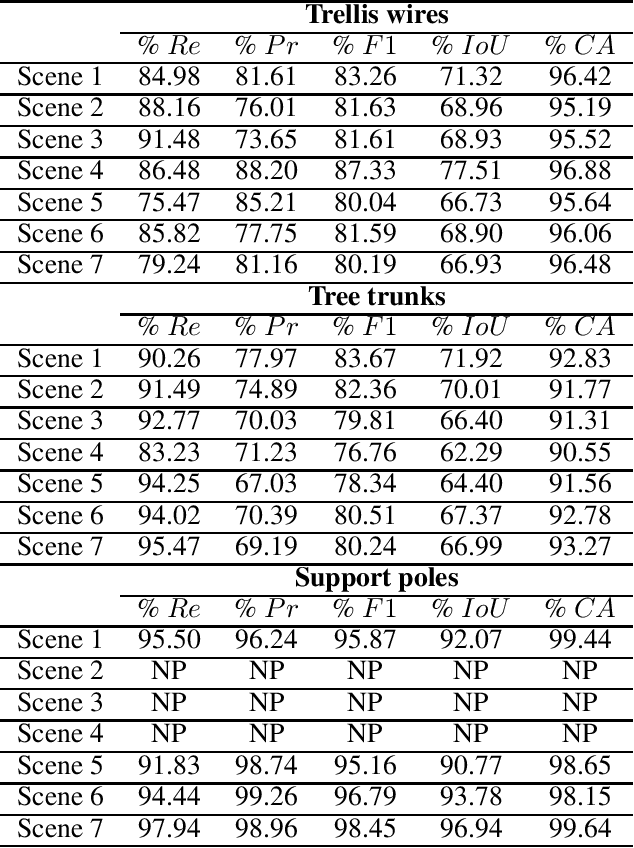

Abstract:We propose a 3D color point cloud processing pipeline to count apples on individual apple trees in trellis structured orchards. Fruit counting at the tree level requires separating trees, which is challenging in dense orchards. We employ point clouds acquired from the leaf-off orchard in winter period, where the branch structure is visible, to delineate tree crowns. We localize apples in point clouds acquired in harvest period. Alignment of the two point clouds enables mapping apple locations to the delineated winter cloud and assigning each apple to its bearing tree. Our apple assignment method achieves an accuracy rate higher than 95%. In addition to presenting a first proof of feasibility, we also provide suggestions for further improvement on our apple assignment pipeline.

Segmentation of structural parts of rosebush plants with 3D point-based deep learning methods

Dec 21, 2020

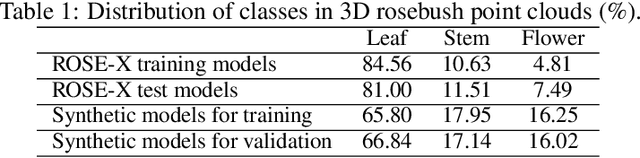

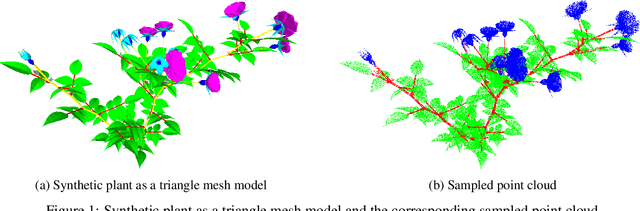

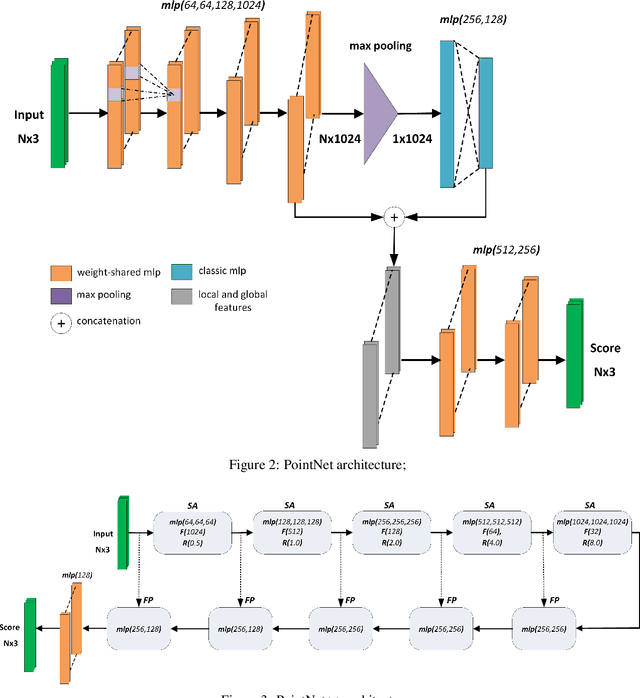

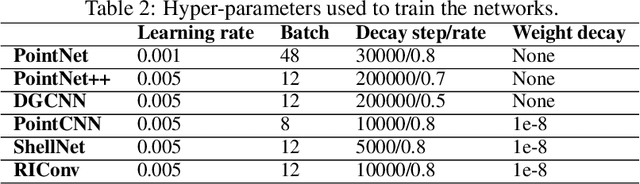

Abstract:Segmentation of structural parts of 3D models of plants is an important step for plant phenotyping, especially for monitoring architectural and morphological traits. This work introduces a benchmark for assessing the performance of 3D point-based deep learning methods on organ segmentation of 3D plant models, specifically rosebush models. Six recent deep learning architectures that segment 3D point clouds into semantic parts were adapted and compared. The methods were tested on the ROSE-X data set, containing fully annotated 3D models of real rosebush plants. The contribution of incorporating synthetic 3D models generated through Lindenmayer systems into training data was also investigated.

Benchmarks, Performance Evaluation and Contests for 3D Shape Retrieval

May 18, 2011

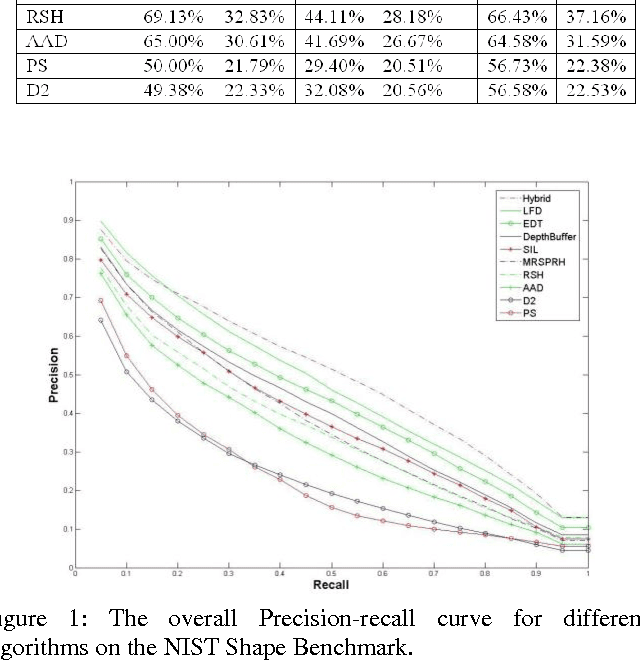

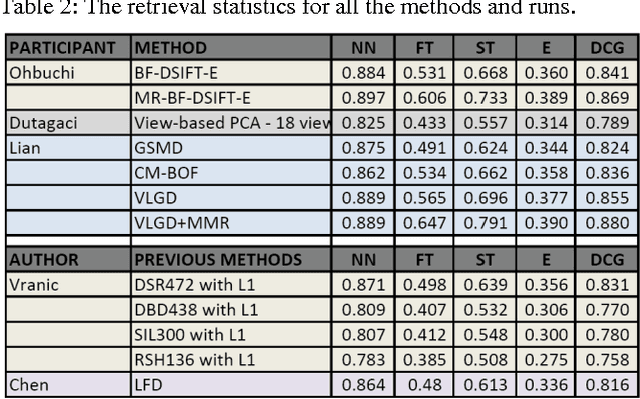

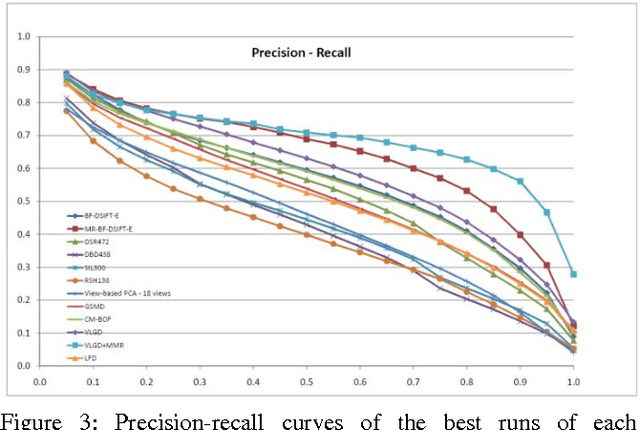

Abstract:Benchmarking of 3D Shape retrieval allows developers and researchers to compare the strengths of different algorithms on a standard dataset. Here we describe the procedures involved in developing a benchmark and issues involved. We then discuss some of the current 3D shape retrieval benchmarks efforts of our group and others. We also review the different performance evaluation measures that are developed and used by researchers in the community. After that we give an overview of the 3D shape retrieval contest (SHREC) tracks run under the EuroGraphics Workshop on 3D Object Retrieval and give details of tracks that we organized for SHREC 2010. Finally we demonstrate some of the results based on the different SHREC contest tracks and the NIST shape benchmark.

View subspaces for indexing and retrieval of 3D models

May 13, 2011Abstract:View-based indexing schemes for 3D object retrieval are gaining popularity since they provide good retrieval results. These schemes are coherent with the theory that humans recognize objects based on their 2D appearances. The viewbased techniques also allow users to search with various queries such as binary images, range images and even 2D sketches. The previous view-based techniques use classical 2D shape descriptors such as Fourier invariants, Zernike moments, Scale Invariant Feature Transform-based local features and 2D Digital Fourier Transform coefficients. These methods describe each object independent of others. In this work, we explore data driven subspace models, such as Principal Component Analysis, Independent Component Analysis and Nonnegative Matrix Factorization to describe the shape information of the views. We treat the depth images obtained from various points of the view sphere as 2D intensity images and train a subspace to extract the inherent structure of the views within a database. We also show the benefit of categorizing shapes according to their eigenvalue spread. Both the shape categorization and data-driven feature set conjectures are tested on the PSB database and compared with the competitor view-based 3D shape retrieval algorithms

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge