Haoxiang Yu

ML Mule: Mobile-Driven Context-Aware Collaborative Learning

Jan 13, 2025

Abstract:Artificial intelligence has been integrated into nearly every aspect of daily life, powering applications from object detection with computer vision to large language models for writing emails and compact models in smart homes. These machine learning models cater to individual users but are often detached from them, as they are typically stored and processed in centralized data centers. This centralized approach raises privacy concerns, incurs high infrastructure costs, and struggles with personalization. Federated and fully decentralized learning methods have been proposed to address these issues, but they still depend on centralized servers or face slow convergence due to communication constraints. To overcome these challenges, we propose ML Mule, a approach that utilizes individual mobile devices as 'Mules' to train and transport model snapshots as they move through physical spaces, sharing these models with the physical 'Spaces' they inhabit. This method implicitly forms affinity groups among devices associated with users who share particular spaces, enabling collaborative model evolution, and protecting users' privacy. Our approach addresses several major shortcomings of traditional, federated, and fully decentralized learning systems. The proposed framework represents a new class of machine learning methods that are more robust, distributed, and personalized, bringing the field closer to realizing the original vision of intelligent, adaptive, and genuinely context-aware smart environments. The results show that ML Mule converges faster and achieves higher model accuracy compared to other existing methods.

Thoughtful Things: Building Human-Centric Smart Devices with Small Language Models

May 06, 2024

Abstract:Everyday devices like light bulbs and kitchen appliances are now embedded with so many features and automated behaviors that they have become complicated to actually use. While such "smart" capabilities can better support users' goals, the task of learning the "ins and outs" of different devices is daunting. Voice assistants aim to solve this problem by providing a natural language interface to devices, yet such assistants cannot understand loosely-constrained commands, they lack the ability to reason about and explain devices' behaviors to users, and they rely on connectivity to intrusive cloud infrastructure. Toward addressing these issues, we propose thoughtful things: devices that leverage lightweight, on-device language models to take actions and explain their behaviors in response to unconstrained user commands. We propose an end-to-end framework that leverages formal modeling, automated training data synthesis, and generative language models to create devices that are both capable and thoughtful in the presence of unconstrained user goals and inquiries. Our framework requires no labeled data and can be deployed on-device, with no cloud dependency. We implement two thoughtful things (a lamp and a thermostat) and deploy them on real hardware, evaluating their practical performance.

Cheating off your neighbors: Improving activity recognition through corroboration

May 27, 2023Abstract:Understanding the complexity of human activities solely through an individual's data can be challenging. However, in many situations, surrounding individuals are likely performing similar activities, while existing human activity recognition approaches focus almost exclusively on individual measurements and largely ignore the context of the activity. Consider two activities: attending a small group meeting and working at an office desk. From solely an individual's perspective, it can be difficult to differentiate between these activities as they may appear very similar, even though they are markedly different. Yet, by observing others nearby, it can be possible to distinguish between these activities. In this paper, we propose an approach to enhance the prediction accuracy of an individual's activities by incorporating insights from surrounding individuals. We have collected a real-world dataset from 20 participants with over 58 hours of data including activities such as attending lectures, having meetings, working in the office, and eating together. Compared to observing a single person in isolation, our proposed approach significantly improves accuracy. We regard this work as a first step in collaborative activity recognition, opening new possibilities for understanding human activity in group settings.

Sasha: creative goal-oriented reasoning in smart homes with large language models

May 16, 2023

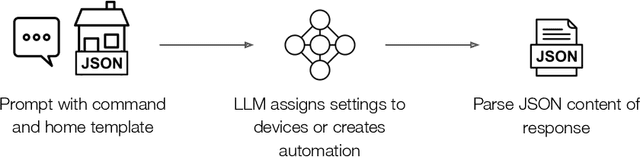

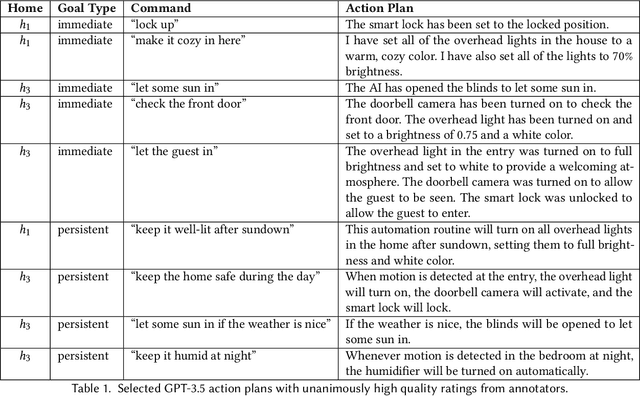

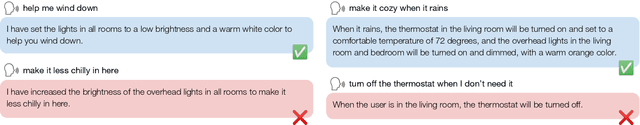

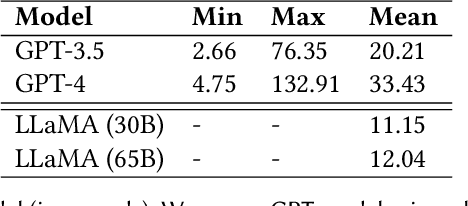

Abstract:Every smart home user interaction has an explicit or implicit goal. Existing home assistants easily achieve explicit goals, e.g., "turn on the light". In more natural communication, however, humans tend to describe implicit goals. We can, for example, ask someone to "make it cozy" rather than describe the specific steps involved. Current systems struggle with this ambiguity since it requires them to relate vague intent to specific devices. We approach this problem of flexibly achieving user goals from the perspective of general-purpose large language models (LLMs) trained on gigantic corpora and adapted to downstream tasks with remarkable flexibility. We explore the use of LLMs for controlling devices and creating automation routines to meet the implicit goals of user commands. In a user-focused study, we find that LLMs can reason creatively to achieve challenging goals, while also revealing gaps that diminish their usefulness. We address these gaps with Sasha: a system for creative, goal-oriented reasoning in smart homes. Sasha responds to commands like "make it cozy" or "help me sleep better" by executing plans to achieve user goals, e.g., setting a mood with available devices, or devising automation routines. We demonstrate Sasha in a real smart home.

iDML: Incentivized Decentralized Machine Learning

Apr 10, 2023Abstract:With the rising emergence of decentralized and opportunistic approaches to machine learning, end devices are increasingly tasked with training deep learning models on-devices using crowd-sourced data that they collect themselves. These approaches are desirable from a resource consumption perspective and also from a privacy preservation perspective. When the devices benefit directly from the trained models, the incentives are implicit - contributing devices' resources are incentivized by the availability of the higher-accuracy model that results from collaboration. However, explicit incentive mechanisms must be provided when end-user devices are asked to contribute their resources (e.g., computation, communication, and data) to a task performed primarily for the benefit of others, e.g., training a model for a task that a neighbor device needs but the device owner is uninterested in. In this project, we propose a novel blockchain-based incentive mechanism for completely decentralized and opportunistic learning architectures. We leverage a smart contract not only for providing explicit incentives to end devices to participate in decentralized learning but also to create a fully decentralized mechanism to inspect and reflect on the behavior of the learning architecture.

"Get ready for a party": Exploring smarter smart spaces with help from large language models

Mar 24, 2023

Abstract:The right response to someone who says "get ready for a party" is deeply influenced by meaning and context. For a smart home assistant (e.g., Google Home), the ideal response might be to survey the available devices in the home and change their state to create a festive atmosphere. Current practical systems cannot service such requests since they require the ability to (1) infer meaning behind an abstract statement and (2) map that inference to a concrete course of action appropriate for the context (e.g., changing the settings of specific devices). In this paper, we leverage the observation that recent task-agnostic large language models (LLMs) like GPT-3 embody a vast amount of cross-domain, sometimes unpredictable contextual knowledge that existing rule-based home assistant systems lack, which can make them powerful tools for inferring user intent and generating appropriate context-dependent responses during smart home interactions. We first explore the feasibility of a system that places an LLM at the center of command inference and action planning, showing that LLMs have the capacity to infer intent behind vague, context-dependent commands like "get ready for a party" and respond with concrete, machine-parseable instructions that can be used to control smart devices. We furthermore demonstrate a proof-of-concept implementation that puts an LLM in control of real devices, showing its ability to infer intent and change device state appropriately with no fine-tuning or task-specific training. Our work hints at the promise of LLM-driven systems for context-awareness in smart environments, motivating future research in this area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge