Haoning Xi

STLLM-DF: A Spatial-Temporal Large Language Model with Diffusion for Enhanced Multi-Mode Traffic System Forecasting

Sep 08, 2024

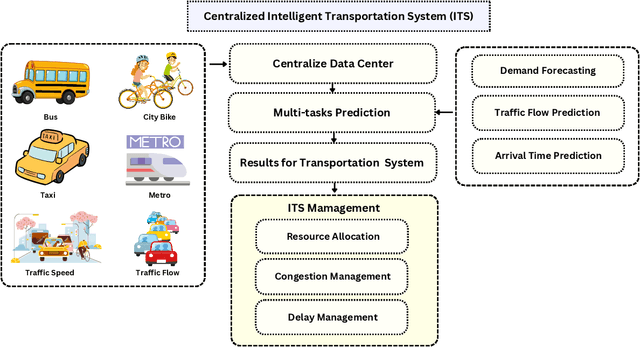

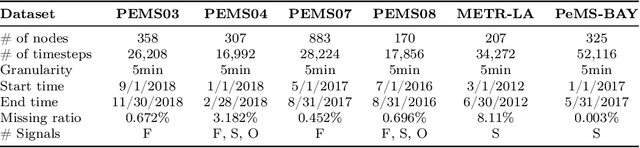

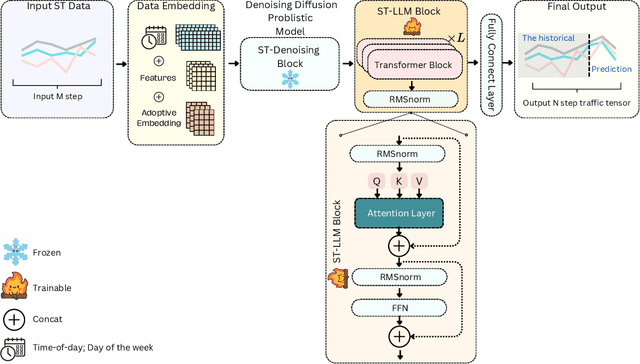

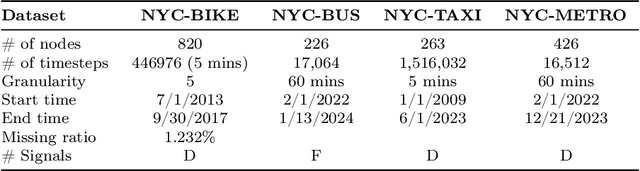

Abstract:The rapid advancement of Intelligent Transportation Systems (ITS) presents challenges, particularly with missing data in multi-modal transportation and the complexity of handling diverse sequential tasks within a centralized framework. To address these issues, we propose the Spatial-Temporal Large Language Model Diffusion (STLLM-DF), an innovative model that leverages Denoising Diffusion Probabilistic Models (DDPMs) and Large Language Models (LLMs) to improve multi-task transportation prediction. The DDPM's robust denoising capabilities enable it to recover underlying data patterns from noisy inputs, making it particularly effective in complex transportation systems. Meanwhile, the non-pretrained LLM dynamically adapts to spatial-temporal relationships within multi-modal networks, allowing the system to efficiently manage diverse transportation tasks in both long-term and short-term predictions. Extensive experiments demonstrate that STLLM-DF consistently outperforms existing models, achieving an average reduction of 2.40\% in MAE, 4.50\% in RMSE, and 1.51\% in MAPE. This model significantly advances centralized ITS by enhancing predictive accuracy, robustness, and overall system performance across multiple tasks, thus paving the way for more effective spatio-temporal traffic forecasting through the integration of frozen transformer language models and diffusion techniques.

ST-SSMs: Spatial-Temporal Selective State of Space Model for Traffic Forecasting

Apr 20, 2024

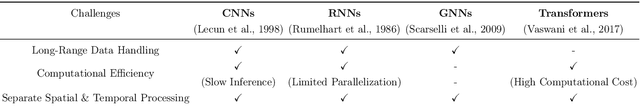

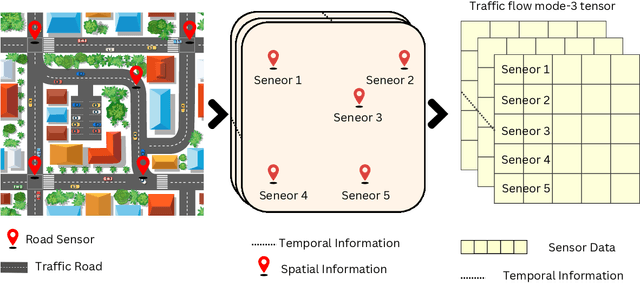

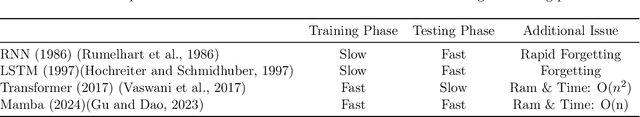

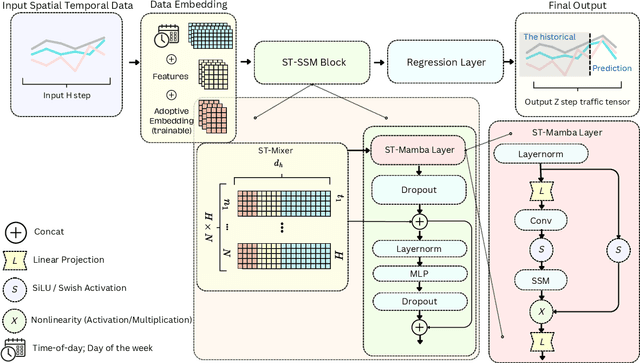

Abstract:Accurate and efficient traffic prediction is crucial for planning, management, and control of intelligent transportation systems. Most state-of-the-art methods for traffic prediction effectively predict both long-term and short-term by employing spatio-temporal neural networks as prediction models, together with transformers to learn global information on prediction objects (e.g., traffic states of road segments). However, these methods often have a high computational cost to obtain good performance. This paper introduces an innovative approach to traffic flow prediction, the Spatial-Temporal Selective State Space Model (ST-SSMs), featuring the novel ST-Mamba block, which can achieve good prediction accuracy with less computational cost. A comparative analysis highlights the ST-Mamba layer's efficiency, revealing its equivalence to three attention layers, yet with markedly reduced processing time. Through rigorous testing on diverse real-world datasets, the ST-SSMs model demonstrates exceptional improvements in prediction accuracy and computational simplicity, setting new benchmarks in the domain of traffic flow forecasting

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge