Hansol Kim

Federated Learning for Face Recognition via Intra-subject Self-supervised Learning

Jul 23, 2024Abstract:Federated Learning (FL) for face recognition aggregates locally optimized models from individual clients to construct a generalized face recognition model. However, previous studies present two major challenges: insufficient incorporation of self-supervised learning and the necessity for clients to accommodate multiple subjects. To tackle these limitations, we propose FedFS (Federated Learning for personalized Face recognition via intra-subject Self-supervised learning framework), a novel federated learning architecture tailored to train personalized face recognition models without imposing subjects. Our proposed FedFS comprises two crucial components that leverage aggregated features of the local and global models to cooperate with representations of an off-the-shelf model. These components are (1) adaptive soft label construction, utilizing dot product operations to reformat labels within intra-instances, and (2) intra-subject self-supervised learning, employing cosine similarity operations to strengthen robust intra-subject representations. Additionally, we introduce a regularization loss to prevent overfitting and ensure the stability of the optimized model. To assess the effectiveness of FedFS, we conduct comprehensive experiments on the DigiFace-1M and VGGFace datasets, demonstrating superior performance compared to previous methods.

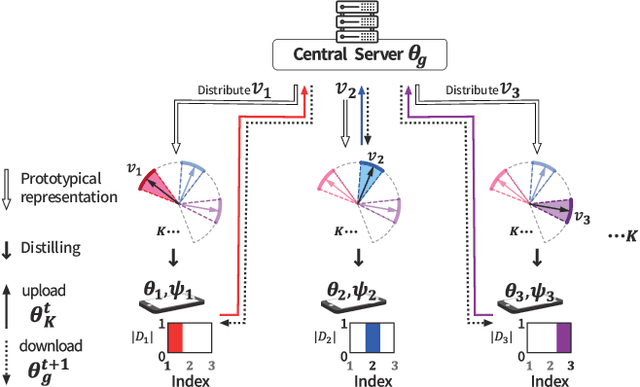

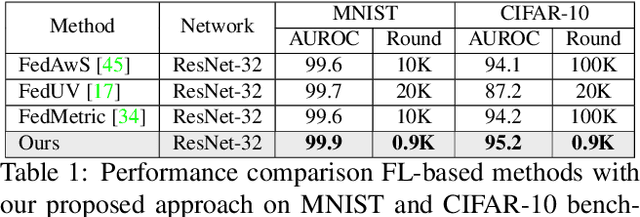

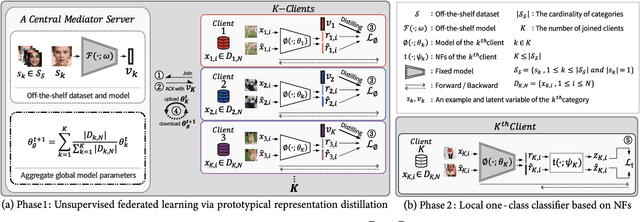

ProtoFL: Unsupervised Federated Learning via Prototypical Distillation

Aug 08, 2023

Abstract:Federated learning (FL) is a promising approach for enhancing data privacy preservation, particularly for authentication systems. However, limited round communications, scarce representation, and scalability pose significant challenges to its deployment, hindering its full potential. In this paper, we propose 'ProtoFL', Prototypical Representation Distillation based unsupervised Federated Learning to enhance the representation power of a global model and reduce round communication costs. Additionally, we introduce a local one-class classifier based on normalizing flows to improve performance with limited data. Our study represents the first investigation of using FL to improve one-class classification performance. We conduct extensive experiments on five widely used benchmarks, namely MNIST, CIFAR-10, CIFAR-100, ImageNet-30, and Keystroke-Dynamics, to demonstrate the superior performance of our proposed framework over previous methods in the literature.

Over-the-Air Consensus for Distributed Vehicle Platooning Control (Extended version)

Nov 11, 2022

Abstract:A distributed control of vehicle platooning is referred to as distributed consensus (DC) since many autonomous vehicles (AVs) reach a consensus to move as one body with the same velocity and inter-distance. For DC control to be stable, other AVs' real-time position information should be inputted to each AV's controller via vehicle-to-vehicle (V2V) communications. On the other hand, too many V2V links should be simultaneously established and frequently retrained, causing frequent packet loss and longer communication latency. We propose a novel DC algorithm called over-the-air consensus (AirCons), a joint communication-and-control design with two key features to overcome the above limitations. First, exploiting a wireless signal's superposition and broadcasting properties renders all AVs' signals to converge to a specific value proportional to participating AVs' average position without individual V2V channel information. Second, the estimated average position is used to control each AV's dynamics instead of each AV's individual position. Through analytic and numerical studies, the effectiveness of the proposed AirCons designed on the state-of-the-art New Radio architecture is verified by showing a $14.22\%$ control gain compared to the benchmark without the average position.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge