Hanif Heidari

Neural Network Entropy (NNetEn): EEG Signals and Chaotic Time Series Separation by Entropy Features, Python Package for NNetEn Calculation

Mar 31, 2023

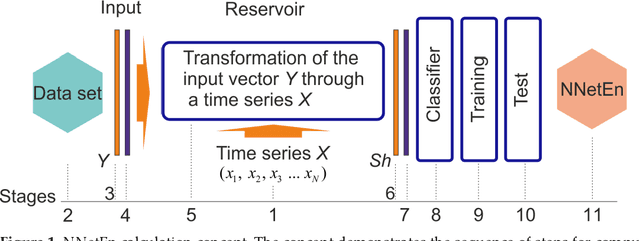

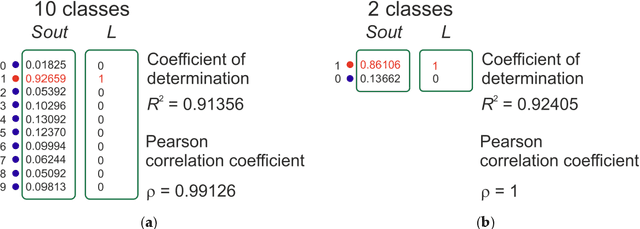

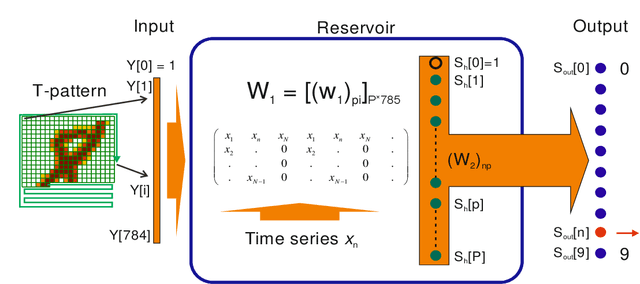

Abstract:Entropy measures are effective features for time series classification problems. Traditional entropy measures, such as Shannon entropy, use probability distribution function. However, for the effective separation of time series, new entropy estimation methods are required to characterize the chaotic dynamic of the system. Our concept of Neural Network Entropy (NNetEn) is based on the classification of special datasets (MNIST-10 and SARS-CoV-2-RBV1) in relation to the entropy of the time series recorded in the reservoir of the LogNNet neural network. NNetEn estimates the chaotic dynamics of time series in an original way. Based on the NNetEn algorithm, we propose two new classification metrics: R2 Efficiency and Pearson Efficiency. The efficiency of NNetEn is verified on separation of two chaotic time series of sine mapping using dispersion analysis (ANOVA). For two close dynamic time series (r = 1.1918 and r = 1.2243), the F-ratio has reached the value of 124 and reflects high efficiency of the introduced method in classification problems. The EEG signal classification for healthy persons and patients with Alzheimer disease illustrates the practical application of the NNetEn features. Our computations demonstrate the synergistic effect of increasing classification accuracy when applying traditional entropy measures and the NNetEn concept conjointly. An implementation of the algorithms in Python is presented.

Early heart disease prediction using hybrid quantum classification

Aug 17, 2022

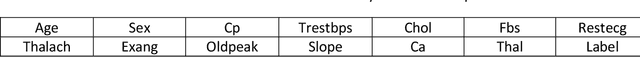

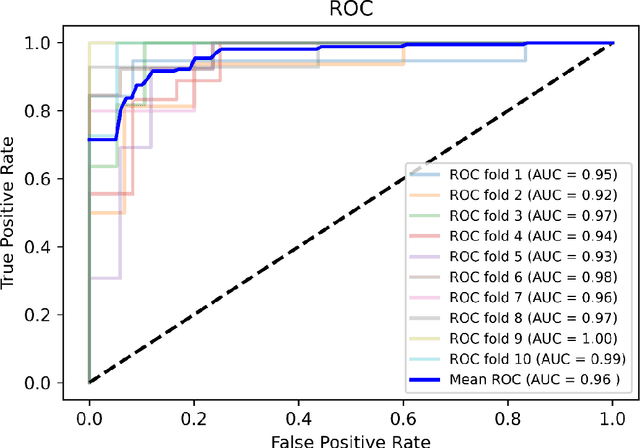

Abstract:The rate of heart morbidity and heart mortality increases significantly which affect the global public health and world economy. Early prediction of heart disease is crucial for reducing heart morbidity and mortality. This paper proposes two quantum machine learning methods i.e. hybrid quantum neural network and hybrid random forest quantum neural network for early detection of heart disease. The methods are applied on the Cleveland and Statlog datasets. The results show that hybrid quantum neural network and hybrid random forest quantum neural network are suitable for high dimensional and low dimensional problems respectively. The hybrid quantum neural network is sensitive to outlier data while hybrid random forest is robust on outlier data. A comparison between different machine learning methods shows that the proposed quantum methods are more appropriate for early heart disease prediction where 96.43% and 97.78% area under curve are obtained for Cleveland and Statlog dataset respectively.

Novel techniques for improvement the NNetEn entropy calculation for short and noisy time series

Feb 25, 2022

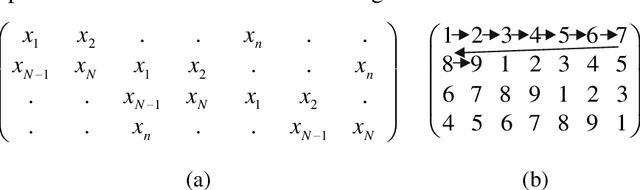

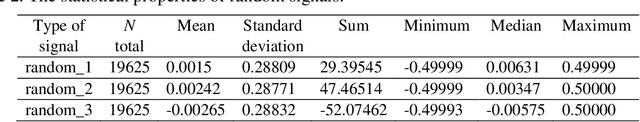

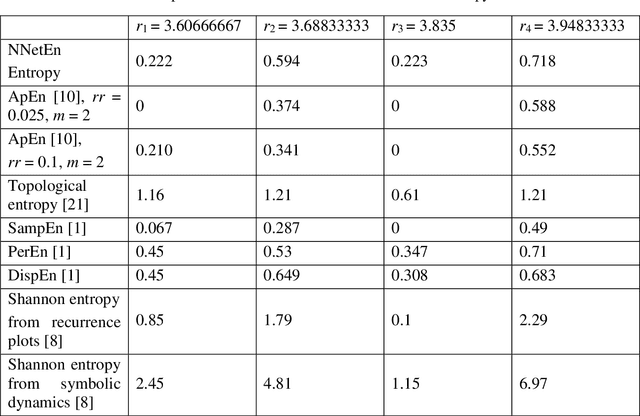

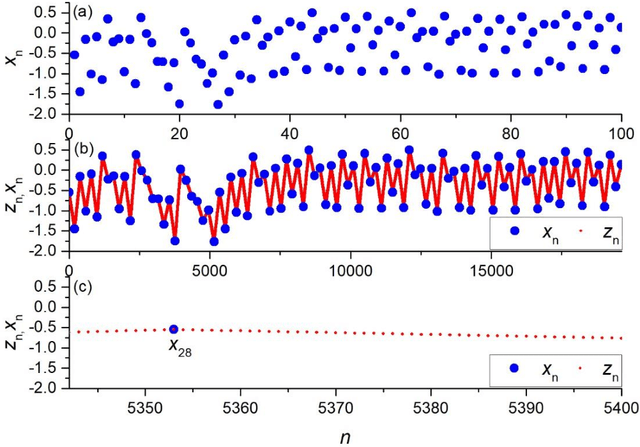

Abstract:Entropy is a fundamental concept of information theory. It is widely used in the analysis of analog and digital signals. Conventional entropy measures have drawbacks, such as sensitivity to the length and amplitude of time series and low robustness to external noise. Recently, the NNetEn entropy measure has been introduced to overcome these problems. The NNetEn entropy uses a modified version of the LogNNet neural network classification model. The algorithm contains a reservoir matrix with N = 19625 elements, which the given time series should fill. Many practical time series have less than 19625 elements. Against this background, this paper investigates different duplicating and stretching techniques for filling to overcome this difficulty. The most successful technique is identified for practical applications. The presence of external noise and bias are other important issues affecting the efficiency of entropy measures. In order to perform meaningful analysis, three time series with different dynamics (chaotic, periodic, and binary), with a variation of signal-to-noise ratio (SNR) and offsets, are considered. It is shown that the error in the calculation of the NNetEn entropy does not exceed 10% when the SNR exceeds 30 dB. This opens the possibility of measuring the NNetEn of experimental signals in the presence of noise of various nature, white noise, or 1/f noise, without the need for noise filtering.

A method for estimating the entropy of time series using artificial neural network

Jul 18, 2021

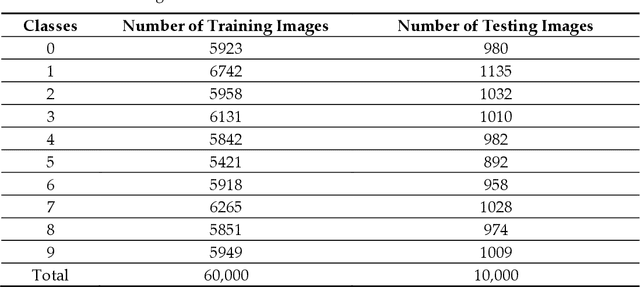

Abstract:Measuring the predictability and complexity of time series is an essential tool in designing and controlling the nonlinear system. There exist different entropy measures in the literature to analyze the predictability and complexity of time series. However, these measures have some drawbacks especially in short time series. To overcome the difficulties, this paper proposes a new method for estimating the entropy of a time series using the LogNNet 784:25:10 neural network model. The LogNNet reservoir matrix consists of 19625 elements which is filled with the time series elements. After that, the network is trained on MNIST-10 dataset and the classification accuracy is calculated. The accuracy is considered as the entropy measure and denoted by NNetEn. A more complex transformation of the input information by the time series in the reservoir leads to higher NNetEn values. Many practical time series data have less than 19625 elements. Some duplicating or stretching methods are investigated to overcome this difficulty and the most successful method is identified for practical applications. The epochs number in the training process of LogNNet is considered as the input parameter. A new time series characteristic called time series learning inertia is introduced to investigate the effect of epochs number in the efficiency of neural network. To show the robustness and efficiency of the proposed method, it is applied on some chaotic, periodic, random, binary and constant time series. The NNetEn is compared with some existing entropy measures. The results show that the proposed method is more robust and accurate than existing methods.

An improved LogNNet classifier for IoT application

May 30, 2021

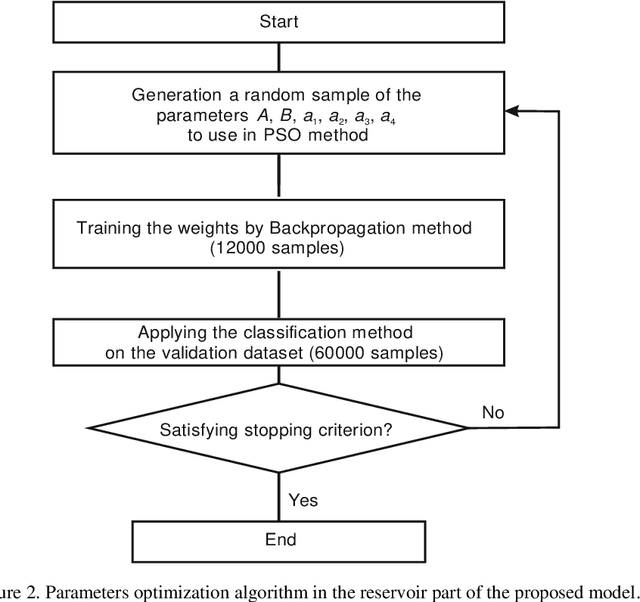

Abstract:The internet of things devices suffer of low memory while good accuracy is needed. Designing suitable algorithms is vital in this subject. This paper proposes a feed forward LogNNet neural network which uses a semi-linear Henon type discrete chaotic map to classify MNIST-10 dataset. The model is composed of reservoir part and trainable classifier. The aim of reservoir part is transforming the inputs to maximize the classification accuracy using a special matrix filing method and a time series generated by the chaotic map. The parameters of the chaotic map are optimized using particle swarm optimization with random immigrants. The results show that the proposed LogNNet/Henon classifier has higher accuracy and same RAM saving comparable to the original version of LogNNet and has broad prospects for implementation in IoT devices. In addition, the relation between the entropy and accuracy of the classification is investigated. It is shown that there exists a direct relation between the value of entropy and accuracy of the classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge