Andrei Velichko

Optimizing Parallel Schemes with Lyapunov Exponents and kNN-LLE Estimation

Jan 20, 2026Abstract:Inverse parallel schemes remain indispensable tools for computing the roots of nonlinear systems, yet their dynamical behavior can be unexpectedly rich, ranging from strong contraction to oscillatory or chaotic transients depending on the choice of algorithmic parameters and initial states. A unified analytical-data-driven methodology for identifying, measuring, and reducing such instabilities in a family of uni-parametric inverse parallel solvers is presented in this study. On the theoretical side, we derive stability and bifurcation characterizations of the underlying iterative maps, identifying parameter regions associated with periodic or chaotic behavior. On the computational side, we introduce a micro-series pipeline based on kNN-driven estimation of the local largest Lyapunov exponent (LLE), applied to scalar time series derived from solver trajectories. The resulting sliding-window Lyapunov profiles provide fine-grained, real-time diagnostics of contractive or unstable phases and reveal transient behaviors not captured by coarse linearized analysis. Leveraging this correspondence, we introduce a Lyapunov-informed parameter selection strategy that identifies solver settings associated with stable behavior, particularly when the estimated LLE indicates persistent instability. Comprehensive experiments on ensembles of perturbed initial guesses demonstrate close agreement between the theoretical stability diagrams and empirical Lyapunov profiles, and show that the proposed adaptive mechanism significantly improves robustness. The study establishes micro-series Lyapunov analysis as a practical, interpretable tool for constructing self-stabilizing root-finding schemes and opens avenues for extending such diagnostics to higher-dimensional or noise-contaminated problems.

Recursive Threshold Median Filter and Autoencoder for Salt-and-Pepper Denoising: SSIM analysis of Images and Entropy Maps

Nov 15, 2025Abstract:This paper studies the removal of salt-and-pepper noise from images using median filter (MF) and simple three-layer autoencoder (AE) within recursive threshold algorithm. The performance of denoising is assessed with two metrics: the standard Structural Similarity Index SSIMImg of restored and clean images and a newly applied metric SSIMMap - the SSIM of entropy maps of these images computed via 2D Sample Entropy in sliding windows. We shown that SSIMMap is more sensitive to blur and local intensity transitions and complements SSIMImg. Experiments on low- and high-resolution grayscales images demonstrate that recursive threshold MF robustly restores images even under strong noise (50-60 %), whereas simple AE is only capable of restoring images with low levels of noise (<30 %). We propose two scalable schemes: (i) 2MF, which uses two MFs with different window sizes and a final thresholding step, effective for highlighting sharp local details at low resolution; and (ii) MFs-AE, which aggregates features from multiple MFs via an AE and is beneficial for restoring the overall scene structure at higher resolution. Owing to its simplicity and computational efficiency, MF remains preferable for deployment on resource-constrained platforms (edge/IoT), whereas AE underperforms without prior denoising. The results also validate the practical value of SSIMMap for objective blur assessment and denoising parameter tuning.

Evaluating COVID 19 Feature Contributions to Bitcoin Return Forecasting: Methodology Based on LightGBM and Genetic Optimization

Jul 31, 2025Abstract:This study proposes a novel methodological framework integrating a LightGBM regression model and genetic algorithm (GA) optimization to systematically evaluate the contribution of COVID-19-related indicators to Bitcoin return prediction. The primary objective was not merely to forecast Bitcoin returns but rather to determine whether including pandemic-related health data significantly enhances prediction accuracy. A comprehensive dataset comprising daily Bitcoin returns and COVID-19 metrics (vaccination rates, hospitalizations, testing statistics) was constructed. Predictive models, trained with and without COVID-19 features, were optimized using GA over 31 independent runs, allowing robust statistical assessment. Performance metrics (R2, RMSE, MAE) were statistically compared through distribution overlaps and Mann-Whitney U tests. Permutation Feature Importance (PFI) analysis quantified individual feature contributions. Results indicate that COVID-19 indicators significantly improved model performance, particularly in capturing extreme market fluctuations (R2 increased by 40%, RMSE decreased by 2%, both highly significant statistically). Among COVID-19 features, vaccination metrics, especially the 75th percentile of fully vaccinated individuals, emerged as dominant predictors. The proposed methodology extends existing financial analytics tools by incorporating public health signals, providing investors and policymakers with refined indicators to navigate market uncertainty during systemic crises.

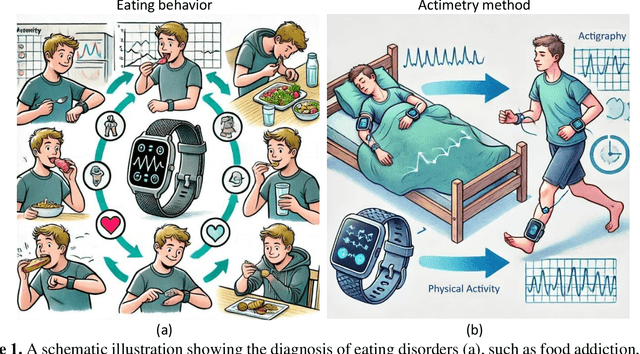

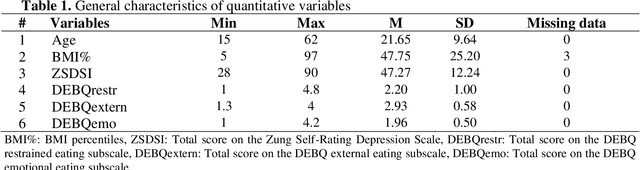

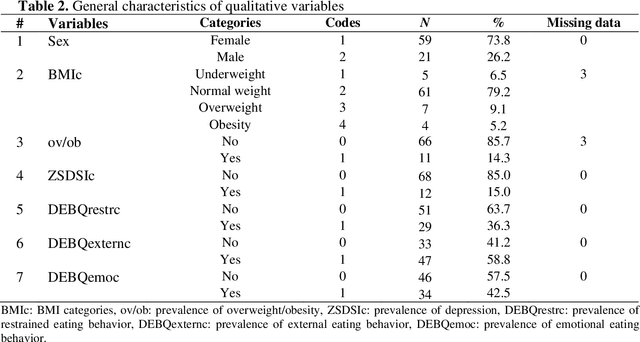

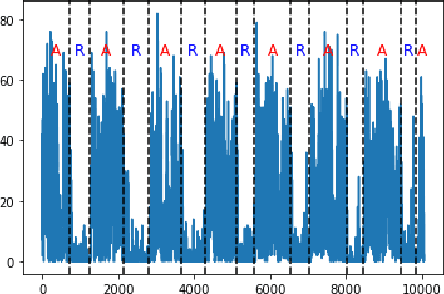

Objective Features Extracted from Motor Activity Time Series for Food Addiction Analysis Using Machine Learning

Aug 31, 2024

Abstract:This study investigates machine learning algorithms to identify objective features for diagnosing food addiction (FA) and assessing confirmed symptoms (SC). Data were collected from 81 participants (mean age: 21.5 years, range: 18-61 years, women: 77.8%) whose FA and SC were measured using the Yale Food Addiction Scale (YFAS). Participants provided demographic and anthropometric data, completed the YFAS, the Zung Self-Rating Depression Scale, and the Dutch Eating Behavior Questionnaire, and wore an actimeter on the non-dominant wrist for a week to record motor activity. Analysis of the actimetric data identified significant statistical and entropy-based features that accurately predicted FA and SC using ML. The Matthews correlation coefficient (MCC) was the primary metric. Activity-related features were more effective for FA prediction (MCC=0.88) than rest-related features (MCC=0.68). For SC, activity segments yielded MCC=0.47, rest segments MCC=0.38, and their combination MCC=0.51. Significant correlations were also found between actimetric features related to FA, emotional, and restrained eating behaviors, supporting the model's validity. Our results support the concept of a human bionic suite composed of IoT devices and ML sensors, which implements health digital assistance with real-time monitoring and analysis of physiological indicators related to FA and SC.

Entropy-statistical approach to phase-locking detection of pulse oscillations: application for the analysis of biosignal synchronization

Jun 12, 2024Abstract:In this study a new method for analyzing synchronization in oscillator systems is proposed using the example of modeling the dynamics of a circuit of two resistively coupled pulse oscillators. The dynamic characteristic of synchronization is fuzzy entropy (FuzzyEn) calculated a time series composed of the ratios of the number of pulse periods (subharmonic ratio, SHR) during phase-locking intervals. Low entropy values indicate strong synchronization, whereas high entropy values suggest weak synchronization between the two oscillators. This method effectively visualizes synchronized modes of the circuit using entropy maps of synchronization states. Additionally, a classification of synchronization states is proposed based on the dependencies of FuzzyEn on the length of embedding vectors of SHR time series. An extension of this method for analyzing non-relaxation (non-spike) type signals is illustrated using the example of phase-phase coupling rhythms of local field potential of rat hippocampus. The entropy-statistical approach using rational fractions and pulse signal forms makes this method promising for analyzing biosignal synchronization and implementing the algorithm in mobile digital platforms.

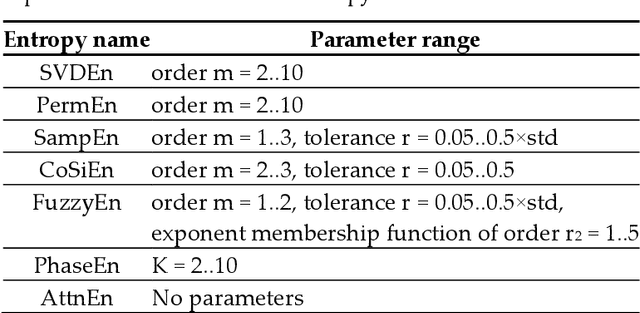

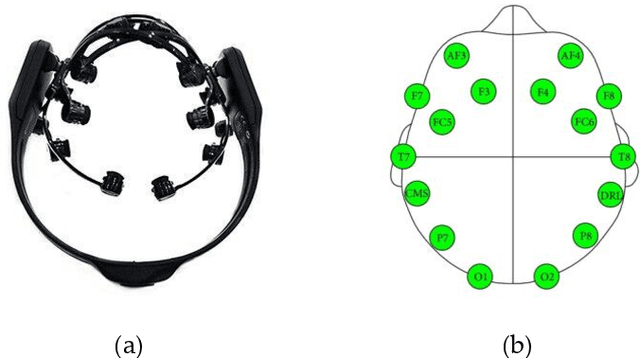

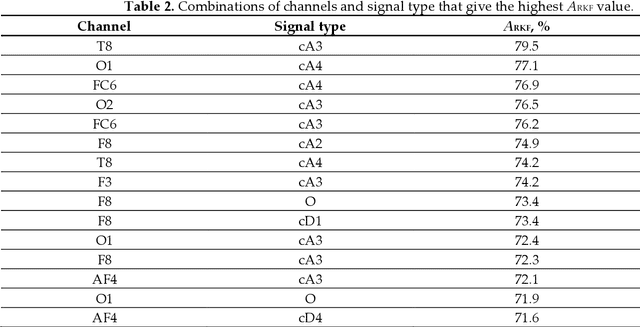

Entropy-based machine learning model for diagnosis and monitoring of Parkinson's Disease in smart IoT environment

Aug 28, 2023

Abstract:The study presents the concept of a computationally efficient machine learning (ML) model for diagnosing and monitoring Parkinson's disease (PD) in an Internet of Things (IoT) environment using rest-state EEG signals (rs-EEG). We computed different types of entropy from EEG signals and found that Fuzzy Entropy performed the best in diagnosing and monitoring PD using rs-EEG. We also investigated different combinations of signal frequency ranges and EEG channels to accurately diagnose PD. Finally, with a fewer number of features (11 features), we achieved a maximum classification accuracy (ARKF) of ~99.9%. The most prominent frequency range of EEG signals has been identified, and we have found that high classification accuracy depends on low-frequency signal components (0-4 Hz). Moreover, the most informative signals were mainly received from the right hemisphere of the head (F8, P8, T8, FC6). Furthermore, we assessed the accuracy of the diagnosis of PD using three different lengths of EEG data (150-1000 samples). Because the computational complexity is reduced by reducing the input data. As a result, we have achieved a maximum mean accuracy of 99.9% for a sample length (LEEG) of 1000 (~7.8 seconds), 98.2% with a LEEG of 800 (~6.2 seconds), and 79.3% for LEEG = 150 (~1.2 seconds). By reducing the number of features and segment lengths, the computational cost of classification can be reduced. Lower-performance smart ML sensors can be used in IoT environments for enhances human resilience to PD.

Imagery Tracking of Sun Activity Using 2D Circular Kernel Time Series Transformation, Entropy Measures and Machine Learning Approaches

Jun 14, 2023Abstract:The sun is highly complex in nature and its observatory imagery features is one of the most important sources of information about the sun activity, space and Earth's weather conditions. The NASA, solar Dynamics Observatory captures approximately 70,000 images of the sun activity in a day and the continuous visual inspection of this solar observatory images is challenging. In this study, we developed a technique of tracking the sun's activity using 2D circular kernel time series transformation, statistical and entropy measures, with machine learning approaches. The technique involves transforming the solar observatory image section into 1-Dimensional time series (1-DTS) while the statistical and entropy measures (Approach 1) and direct classification (Approach 2) is used to capture the extraction features from the 1-DTS for machine learning classification into 'solar storm' and 'no storm'. We found that the potential accuracy of the model in tracking the activity of the sun is approximately 0.981 for Approach 1 and 0.999 for Approach 2. The stability of the developed approach to rotational transformation of the solar observatory image is evident. When training on the original dataset for Approach 1, the match index (T90) of the distribution of solar storm areas reaches T90 ~ 0.993, and T90 ~ 0.951 for Approach 2. In addition, when using the extended training base, the match indices increased to T90 ~ 0.994 and T90 ~ 1, respectively. This model consistently classifies areas with swirling magnetic lines associated with solar storms and is robust to image rotation, glare, and optical artifacts.

A Bio-Inspired Chaos Sensor Based on the Perceptron Neural Network: Concept and Application for Computational Neuro-science

Jun 03, 2023Abstract:The study presents a bio-inspired chaos sensor based on the perceptron neural network. After training, the sensor on perceptron, having 50 neurons in the hidden layer and 1 neuron at the output, approximates the fuzzy entropy of short time series with high accuracy with a determination coefficient R2 ~ 0.9. The Hindmarsh-Rose spike model was used to generate time series of spike intervals, and datasets for training and testing the perceptron. The selection of the hyperparameters of the perceptron model and the estimation of the sensor accuracy were performed using the K-block cross-validation method. Even for a hidden layer with 1 neuron, the model approximates the fuzzy entropy with good results and the metric R2 ~ 0.5-0.8. In a simplified model with 1 neuron and equal weights in the first layer, the principle of approximation is based on the linear transformation of the average value of the time series into the entropy value. The bio-inspired chaos sensor model based on an ensemble of neurons is able to dynamically track the chaotic behavior of a spiked biosystem and transmit this information to other parts of the bio-system for further processing. The study will be useful for specialists in the field of computational neuroscience.

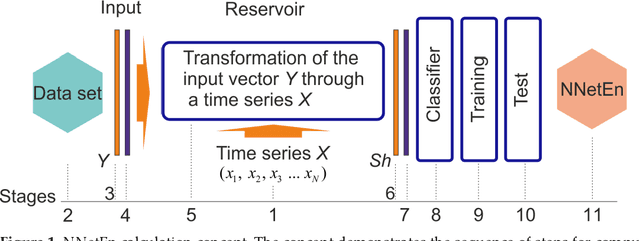

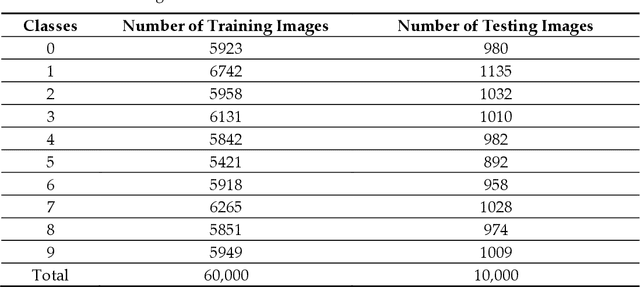

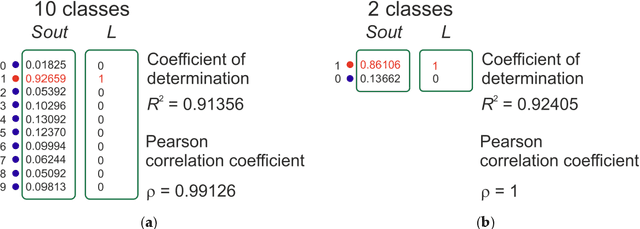

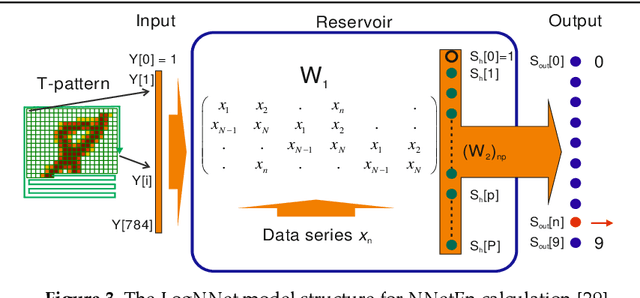

Neural Network Entropy (NNetEn): EEG Signals and Chaotic Time Series Separation by Entropy Features, Python Package for NNetEn Calculation

Mar 31, 2023

Abstract:Entropy measures are effective features for time series classification problems. Traditional entropy measures, such as Shannon entropy, use probability distribution function. However, for the effective separation of time series, new entropy estimation methods are required to characterize the chaotic dynamic of the system. Our concept of Neural Network Entropy (NNetEn) is based on the classification of special datasets (MNIST-10 and SARS-CoV-2-RBV1) in relation to the entropy of the time series recorded in the reservoir of the LogNNet neural network. NNetEn estimates the chaotic dynamics of time series in an original way. Based on the NNetEn algorithm, we propose two new classification metrics: R2 Efficiency and Pearson Efficiency. The efficiency of NNetEn is verified on separation of two chaotic time series of sine mapping using dispersion analysis (ANOVA). For two close dynamic time series (r = 1.1918 and r = 1.2243), the F-ratio has reached the value of 124 and reflects high efficiency of the introduced method in classification problems. The EEG signal classification for healthy persons and patients with Alzheimer disease illustrates the practical application of the NNetEn features. Our computations demonstrate the synergistic effect of increasing classification accuracy when applying traditional entropy measures and the NNetEn concept conjointly. An implementation of the algorithms in Python is presented.

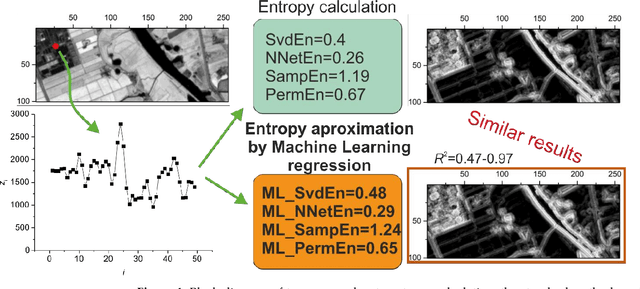

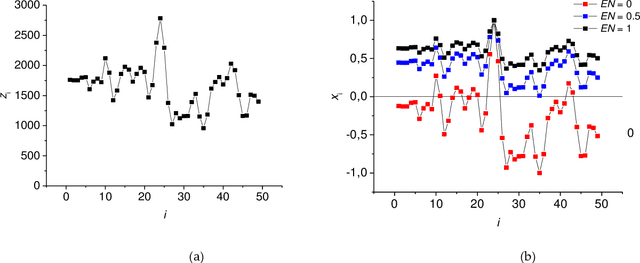

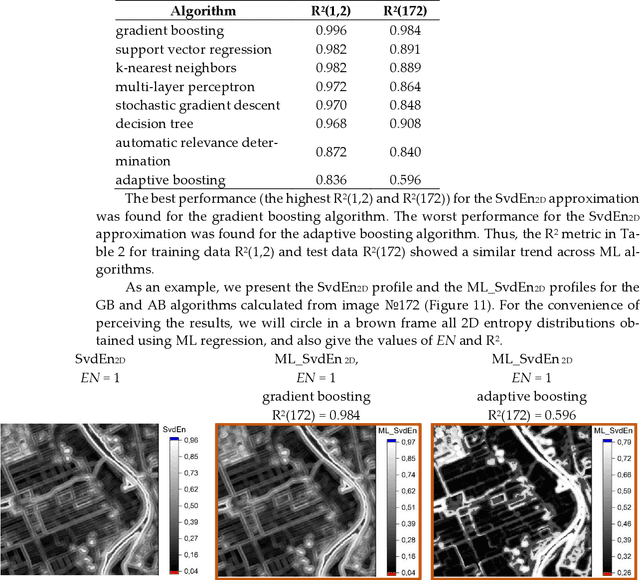

Entropy Approximation by Machine Learning Regression: Application for Irregularity Evaluation of Images in Remote Sensing

Oct 13, 2022

Abstract:Approximation of entropies of various types using machine learning (ML) regression methods is shown for the first time. The ML models presented in this study defines the complexity of short time series by approximating dissimilar entropy techniques such as Singular value decomposition entropy (SvdEn), Permutation entropy (PermEn), Sample entropy (SampEn) and Neural Network entropy (NNetEn) and their 2D analogies. A new method for calculating SvdEn2D, PermEn2D and SampEn2D for 2D images was tested using the technique of circular kernels. Training and test datasets on the basis of Sentinel-2 images are presented (2 train images and 198 test images). The results of entropy approximation are demonstrated using the example of calculating the 2D entropy of Sentinel-2 images and R2 metric evaluation. Applicability of the method for short time series with length from N = 5 to N = 113 elements is shown. A tendency for the R2 metric to decrease with an increase in the length of the time series was found. For SvdEn entropy, the regression accuracy is R2 > 0.99 for N = 5 and R2 > 0.82 for N = 113. The best metrics are observed for the ML_SvdEn2D and ML_NNetEn2D models. The results of the study can be used for fundamental research of entropy approximations of various types using ML regression, as well as for accelerating entropy calculations in remote sensing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge