Hang Ruan

Weizmann Institute of Science

IPEC: Test-Time Incremental Prototype Enhancement Classifier for Few-Shot Learning

Jan 16, 2026Abstract:Metric-based few-shot approaches have gained significant popularity due to their relatively straightforward implementation, high interpret ability, and computational efficiency. However, stemming from the batch-independence assumption during testing, which prevents the model from leveraging valuable knowledge accumulated from previous batches. To address these challenges, we propose a novel test-time method called Incremental Prototype Enhancement Classifier (IPEC), a test-time method that optimizes prototype estimation by leveraging information from previous query samples. IPEC maintains a dynamic auxiliary set by selectively incorporating query samples that are classified with high confidence. To ensure sample quality, we design a robust dual-filtering mechanism that assesses each query sample based on both global prediction confidence and local discriminative ability. By aggregating this auxiliary set with the support set in subsequent tasks, IPEC builds progressively more stable and representative prototypes, effectively reducing its reliance on the initial support set. We ground this approach in a Bayesian interpretation, conceptualizing the support set as a prior and the auxiliary set as a data-driven posterior, which in turn motivates the design of a practical "warm-up and test" two-stage inference protocol. Extensive empirical results validate the superior performance of our proposed method across multiple few-shot classification tasks.

InfoSculpt: Sculpting the Latent Space for Generalized Category Discovery

Jan 15, 2026Abstract:Generalized Category Discovery (GCD) aims to classify instances from both known and novel categories within a large-scale unlabeled dataset, a critical yet challenging task for real-world, open-world applications. However, existing methods often rely on pseudo-labeling, or two-stage clustering, which lack a principled mechanism to explicitly disentangle essential, category-defining signals from instance-specific noise. In this paper, we address this fundamental limitation by re-framing GCD from an information-theoretic perspective, grounded in the Information Bottleneck (IB) principle. We introduce InfoSculpt, a novel framework that systematically sculpts the representation space by minimizing a dual Conditional Mutual Information (CMI) objective. InfoSculpt uniquely combines a Category-Level CMI on labeled data to learn compact and discriminative representations for known classes, and a complementary Instance-Level CMI on all data to distill invariant features by compressing augmentation-induced noise. These two objectives work synergistically at different scales to produce a disentangled and robust latent space where categorical information is preserved while noisy, instance-specific details are discarded. Extensive experiments on 8 benchmarks demonstrate that InfoSculpt validating the effectiveness of our information-theoretic approach.

EfficientFSL: Enhancing Few-Shot Classification via Query-Only Tuning in Vision Transformers

Jan 13, 2026Abstract:Large models such as Vision Transformers (ViTs) have demonstrated remarkable superiority over smaller architectures like ResNet in few-shot classification, owing to their powerful representational capacity. However, fine-tuning such large models demands extensive GPU memory and prolonged training time, making them impractical for many real-world low-resource scenarios. To bridge this gap, we propose EfficientFSL, a query-only fine-tuning framework tailored specifically for few-shot classification with ViT, which achieves competitive performance while significantly reducing computational overhead. EfficientFSL fully leverages the knowledge embedded in the pre-trained model and its strong comprehension ability, achieving high classification accuracy with an extremely small number of tunable parameters. Specifically, we introduce a lightweight trainable Forward Block to synthesize task-specific queries that extract informative features from the intermediate representations of the pre-trained model in a query-only manner. We further propose a Combine Block to fuse multi-layer outputs, enhancing the depth and robustness of feature representations. Finally, a Support-Query Attention Block mitigates distribution shift by adjusting prototypes to align with the query set distribution. With minimal trainable parameters, EfficientFSL achieves state-of-the-art performance on four in-domain few-shot datasets and six cross-domain datasets, demonstrating its effectiveness in real-world applications.

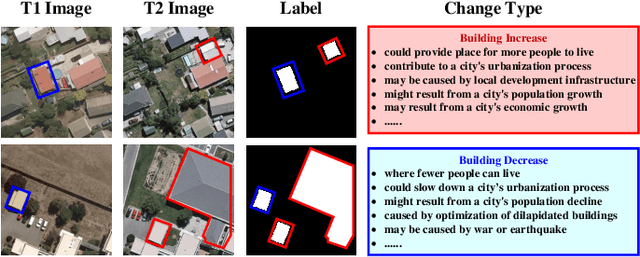

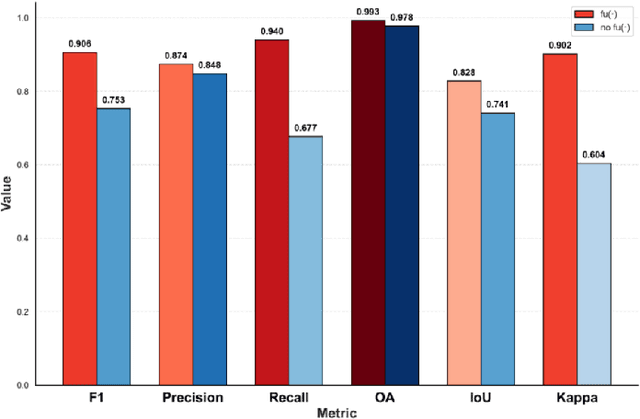

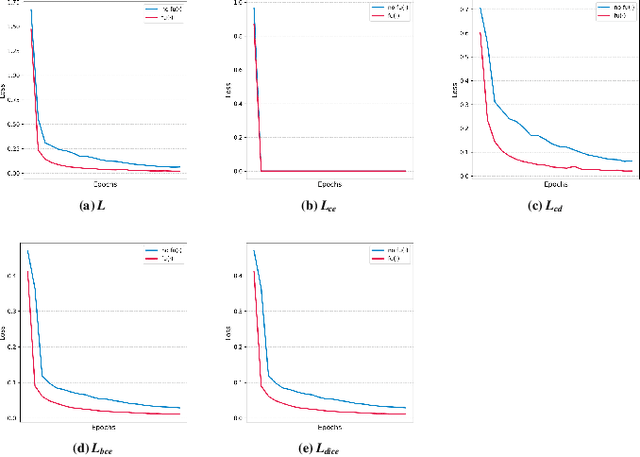

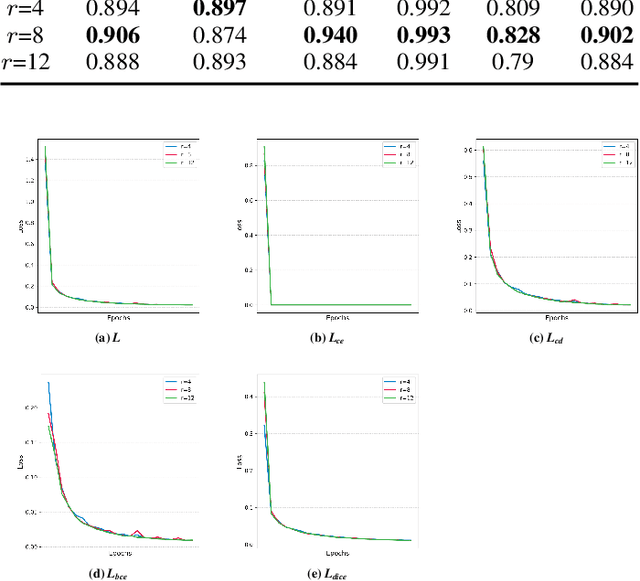

ReasonCD: A Multimodal Reasoning Large Model for Implicit Change-of-Interest Semantic Mining

Dec 22, 2025

Abstract:Remote sensing image change detection is one of the fundamental tasks in remote sensing intelligent interpretation. Its core objective is to identify changes within change regions of interest (CRoI). Current multimodal large models encode rich human semantic knowledge, which is utilized for guidance in tasks such as remote sensing change detection. However, existing methods that use semantic guidance for detecting users' CRoI overly rely on explicit textual descriptions of CRoI, leading to the problem of near-complete performance failure when presented with implicit CRoI textual descriptions. This paper proposes a multimodal reasoning change detection model named ReasonCD, capable of mining users' implicit task intent. The model leverages the powerful reasoning capabilities of pre-trained large language models to mine users' implicit task intents and subsequently obtains different change detection results based on these intents. Experiments on public datasets demonstrate that the model achieves excellent change detection performance, with an F1 score of 92.1\% on the BCDD dataset. Furthermore, to validate its superior reasoning functionality, this paper annotates a subset of reasoning data based on the SECOND dataset. Experimental results show that the model not only excels at basic reasoning-based change detection tasks but can also explain the reasoning process to aid human decision-making.

RIS-Assisted Near-Field ISAC for Multi-Target Indication in NLoS Scenarios

Sep 10, 2025Abstract:Enabling multi-target sensing in near-field integrated sensing and communication (ISAC) systems is a key challenge, particularly when line-of-sight paths are blocked. This paper proposes a beamforming framework that leverages a reconfigurable intelligent surface (RIS) to achieve multi-target indication. Our contribution is the extension of classic beampattern gain and inter-target cross-correlation metrics to the near-field, leveraging both angle and distance information to discriminate between multiple users and targets. We formulate a problem to maximize the worst-case sensing performance by jointly designing the beamforming at the base station and the phase shifts at the RIS, while guaranteeing communication rates. The non-convex problem is solved via an efficient alternating optimization (AO) algorithm that utilizes semidefinite relaxation (SDR). Simulations demonstrate that our RIS-assisted framework enables high-resolution sensing of co-angle targets in blocked scenarios.

Task-Based Quantizer Design for Sensing With Random Signals

Mar 17, 2024Abstract:In integrated sensing and communication (ISAC) systems, random signaling is used to convey useful information as well as sense the environment. Such randomness poses challenges in various components in sensing signal processing. In this paper, we investigate quantizer design for sensing in ISAC systems. Unlike quantizers for channel estimation in massive multiple-input-multiple-out (MIMO) communication systems, sensing in ISAC systems needs to deal with random nonorthogonal transmitted signals rather than a fixed orthogonal pilot. Considering sensing performance and hardware implementation, we focus on task-based hardware-limited quantization with spatial analog combining. We propose two strategies of quantizer optimization, i.e., data-dependent (DD) and data-independent (DI). The former achieves optimized sensing performance with high implementation overhead. To reduce hardware complexity, the latter optimizes the quantizer with respect to the random signal from a stochastic perspective. We derive the optimal quantizers for both strategies and formulate an algorithm based on sample average approximation (SAA) to solve the optimization in the DI strategy. Numerical results show that the optimized quantizers outperform digital-only quantizers in terms of sensing performance. Additionally, the DI strategy, despite its lower computational complexity compared to the DD strategy, achieves near-optimal sensing performance.

Rethinking and Benchmarking Predict-then-Optimize Paradigm for Combinatorial Optimization Problems

Nov 19, 2023

Abstract:Numerous web applications rely on solving combinatorial optimization problems, such as energy cost-aware scheduling, budget allocation on web advertising, and graph matching on social networks. However, many optimization problems involve unknown coefficients, and improper predictions of these factors may lead to inferior decisions which may cause energy wastage, inefficient resource allocation, inappropriate matching in social networks, etc. Such a research topic is referred to as "Predict-Then-Optimize (PTO)" which considers the performance of prediction and decision-making in a unified system. A noteworthy recent development is the end-to-end methods by directly optimizing the ultimate decision quality which claims to yield better results in contrast to the traditional two-stage approach. However, the evaluation benchmarks in this field are fragmented and the effectiveness of various models in different scenarios remains unclear, hindering the comprehensive assessment and fast deployment of these methods. To address these issues, we provide a comprehensive categorization of current approaches and integrate existing experimental scenarios to establish a unified benchmark, elucidating the circumstances under which end-to-end training yields improvements, as well as the contexts in which it performs ineffectively. We also introduce a new dataset for the industrial combinatorial advertising problem for inclusive finance to open-source. We hope the rethinking and benchmarking of PTO could facilitate more convenient evaluation and deployment, and inspire further improvements both in the academy and industry within this field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge