Hanan Hindy

A Novel Contrastive Loss for Zero-Day Network Intrusion Detection

Jan 14, 2026Abstract:Machine learning has achieved state-of-the-art results in network intrusion detection; however, its performance significantly degrades when confronted by a new attack class -- a zero-day attack. In simple terms, classical machine learning-based approaches are adept at identifying attack classes on which they have been previously trained, but struggle with those not included in their training data. One approach to addressing this shortcoming is to utilise anomaly detectors which train exclusively on benign data with the goal of generalising to all attack classes -- both known and zero-day. However, this comes at the expense of a prohibitively high false positive rate. This work proposes a novel contrastive loss function which is able to maintain the advantages of other contrastive learning-based approaches (robustness to imbalanced data) but can also generalise to zero-day attacks. Unlike anomaly detectors, this model learns the distributions of benign traffic using both benign and known malign samples, i.e. other well-known attack classes (not including the zero-day class), and consequently, achieves significant performance improvements. The proposed approach is experimentally verified on the Lycos2017 dataset where it achieves an AUROC improvement of .000065 and .060883 over previous models in known and zero-day attack detection, respectively. Finally, the proposed method is extended to open-set recognition achieving OpenAUC improvements of .170883 over existing approaches.

* Published in: IEEE Transactions on Network Service and Management (TNSM), 2026. Official version: https://ieeexplore.ieee.org/document/11340750 Code: https://github.com/jackwilkie/CLOSR

TapToTab : Video-Based Guitar Tabs Generation using AI and Audio Analysis

Sep 13, 2024

Abstract:The automation of guitar tablature generation from video inputs holds significant promise for enhancing music education, transcription accuracy, and performance analysis. Existing methods face challenges with consistency and completeness, particularly in detecting fretboards and accurately identifying notes. To address these issues, this paper introduces an advanced approach leveraging deep learning, specifically YOLO models for real-time fretboard detection, and Fourier Transform-based audio analysis for precise note identification. Experimental results demonstrate substantial improvements in detection accuracy and robustness compared to traditional techniques. This paper outlines the development, implementation, and evaluation of these methodologies, aiming to revolutionize guitar instruction by automating the creation of guitar tabs from video recordings.

An Evaluation of Lightweight Deep Learning Techniques in Medical Imaging for High Precision COVID-19 Diagnostics

May 30, 2023Abstract:Timely and rapid diagnoses are core to informing on optimum interventions that curb the spread of COVID-19. The use of medical images such as chest X-rays and CTs has been advocated to supplement the Reverse-Transcription Polymerase Chain Reaction (RT-PCR) test, which in turn has stimulated the application of deep learning techniques in the development of automated systems for the detection of infections. Decision support systems relax the challenges inherent to the physical examination of images, which is both time consuming and requires interpretation by highly trained clinicians. A review of relevant reported studies to date shows that most deep learning algorithms utilised approaches are not amenable to implementation on resource-constrained devices. Given the rate of infections is increasing, rapid, trusted diagnoses are a central tool in the management of the spread, mandating a need for a low-cost and mobile point-of-care detection systems, especially for middle- and low-income nations. The paper presents the development and evaluation of the performance of lightweight deep learning technique for the detection of COVID-19 using the MobileNetV2 model. Results demonstrate that the performance of the lightweight deep learning model is competitive with respect to heavyweight models but delivers a significant increase in the efficiency of deployment, notably in the lowering of the cost and memory requirements of computing resources.

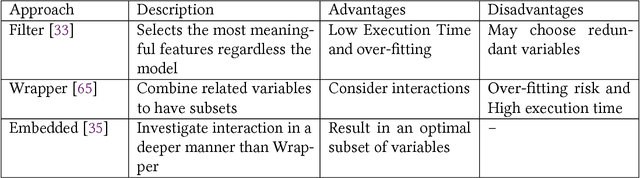

Utilising Flow Aggregation to Classify Benign Imitating Attacks

Mar 06, 2021

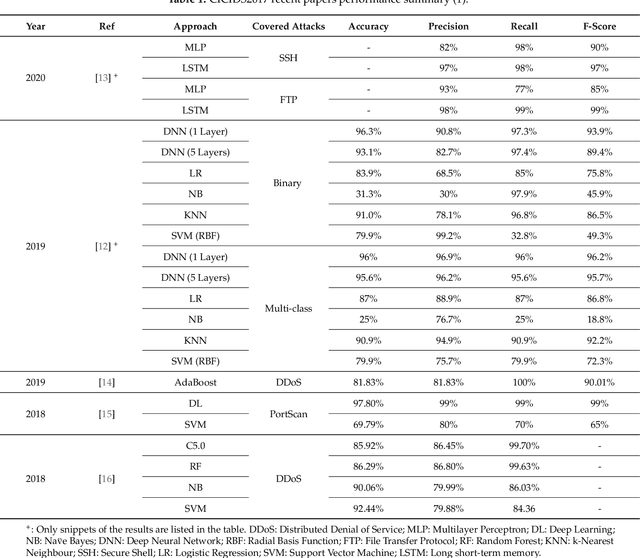

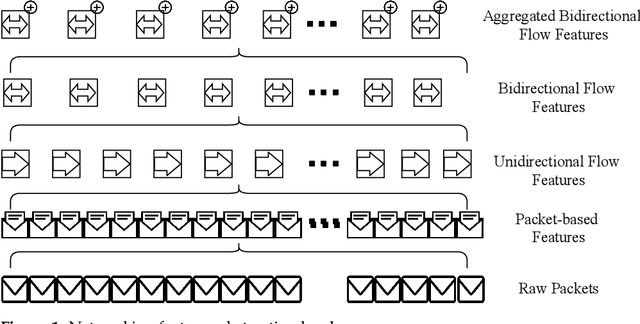

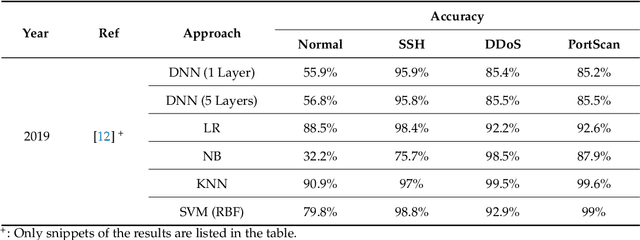

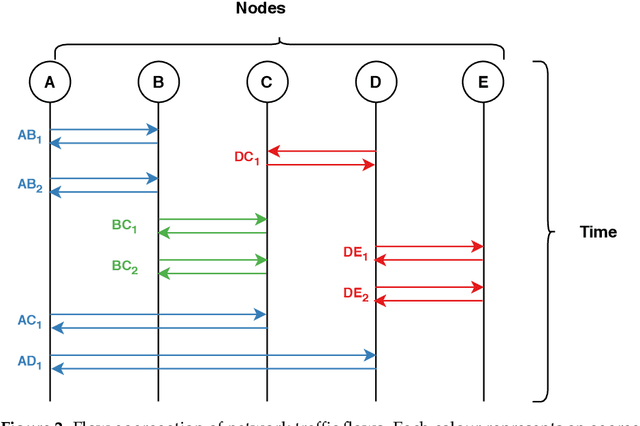

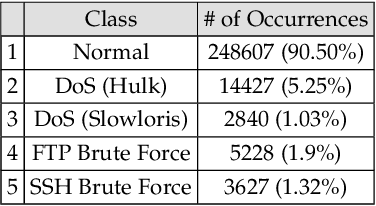

Abstract:Cyber-attacks continue to grow, both in terms of volume and sophistication. This is aided by an increase in available computational power, expanding attack surfaces, and advancements in the human understanding of how to make attacks undetectable. Unsurprisingly, machine learning is utilised to defend against these attacks. In many applications, the choice of features is more important than the choice of model. A range of studies have, with varying degrees of success, attempted to discriminate between benign traffic and well-known cyber-attacks. The features used in these studies are broadly similar and have demonstrated their effectiveness in situations where cyber-attacks do not imitate benign behaviour. To overcome this barrier, in this manuscript, we introduce new features based on a higher level of abstraction of network traffic. Specifically, we perform flow aggregation by grouping flows with similarities. This additional level of feature abstraction benefits from cumulative information, thus qualifying the models to classify cyber-attacks that mimic benign traffic. The performance of the new features is evaluated using the benchmark CICIDS2017 dataset, and the results demonstrate their validity and effectiveness. This novel proposal will improve the detection accuracy of cyber-attacks and also build towards a new direction of feature extraction for complex ones.

* 21 pages, 6 figures

Leveraging Siamese Networks for One-Shot Intrusion Detection Model

Jun 27, 2020

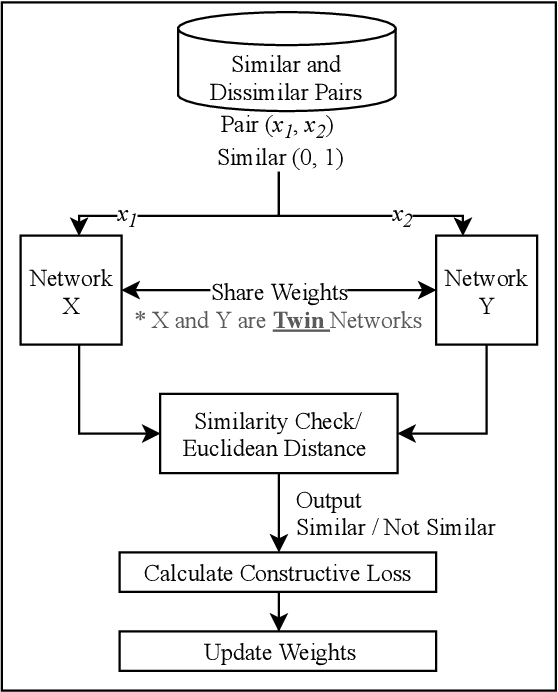

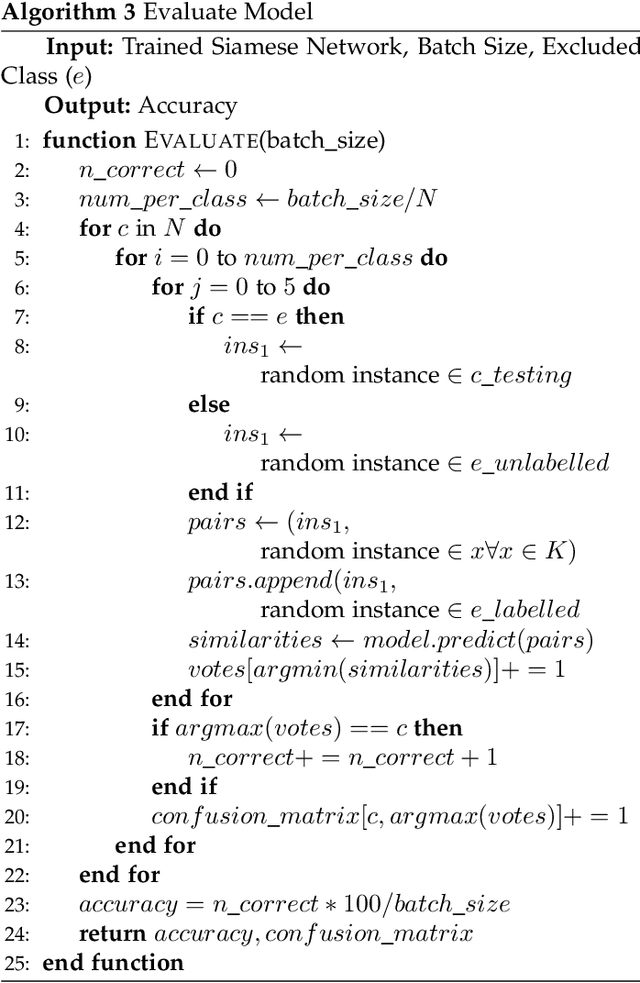

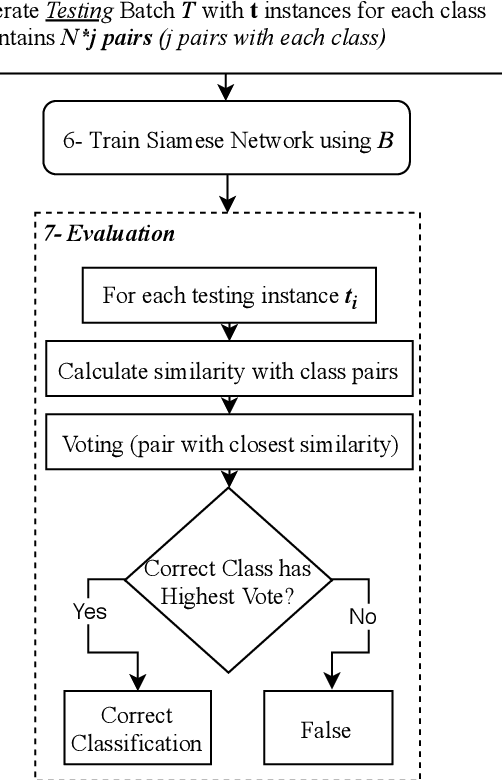

Abstract:The use of supervised Machine Learning (ML) to enhance Intrusion Detection Systems has been the subject of significant research. Supervised ML is based upon learning by example, demanding significant volumes of representative instances for effective training and the need to re-train the model for every unseen cyber-attack class. However, retraining the models in-situ renders the network susceptible to attacks owing to the time-window required to acquire a sufficient volume of data. Although anomaly detection systems provide a coarse-grained defence against unseen attacks, these approaches are significantly less accurate and suffer from high false-positive rates. Here, a complementary approach referred to as 'One-Shot Learning', whereby a limited number of examples of a new attack-class is used to identify a new attack-class (out of many) is detailed. The model grants a new cyber-attack classification without retraining. A Siamese Network is trained to differentiate between classes based on pairs similarities, rather than features, allowing to identify new and previously unseen attacks. The performance of a pre-trained model to classify attack-classes based only on one example is evaluated using three datasets. Results confirm the adaptability of the model in classifying unseen attacks and the trade-off between performance and the need for distinctive class representation.

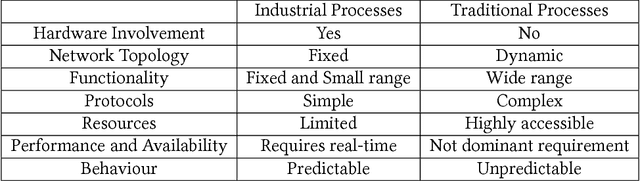

Improving SIEM for Critical SCADA Water Infrastructures Using Machine Learning

Mar 06, 2019

Abstract:Network Control Systems (NAC) have been used in many industrial processes. They aim to reduce the human factor burden and efficiently handle the complex process and communication of those systems. Supervisory control and data acquisition (SCADA) systems are used in industrial, infrastructure and facility processes (e.g. manufacturing, fabrication, oil and water pipelines, building ventilation, etc.) Like other Internet of Things (IoT) implementations, SCADA systems are vulnerable to cyber-attacks, therefore, a robust anomaly detection is a major requirement. However, having an accurate anomaly detection system is not an easy task, due to the difficulty to differentiate between cyber-attacks and system internal failures (e.g. hardware failures). In this paper, we present a model that detects anomaly events in a water system controlled by SCADA. Six Machine Learning techniques have been used in building and evaluating the model. The model classifies different anomaly events including hardware failures (e.g. sensor failures), sabotage and cyber-attacks (e.g. DoS and Spoofing). Unlike other detection systems, our proposed work focuses on notifying the operator when an anomaly occurs with a probability of the event occurring. This additional information helps in accelerating the mitigation process. The model is trained and tested using a real-world dataset.

* 17 pages, 8 figures, 4 tables. In the proceeding of International Workshop on the Security of Industrial Control Systems and Cyber-Physical Systems CyberICPS, In Conjunction With ESORICS 2018

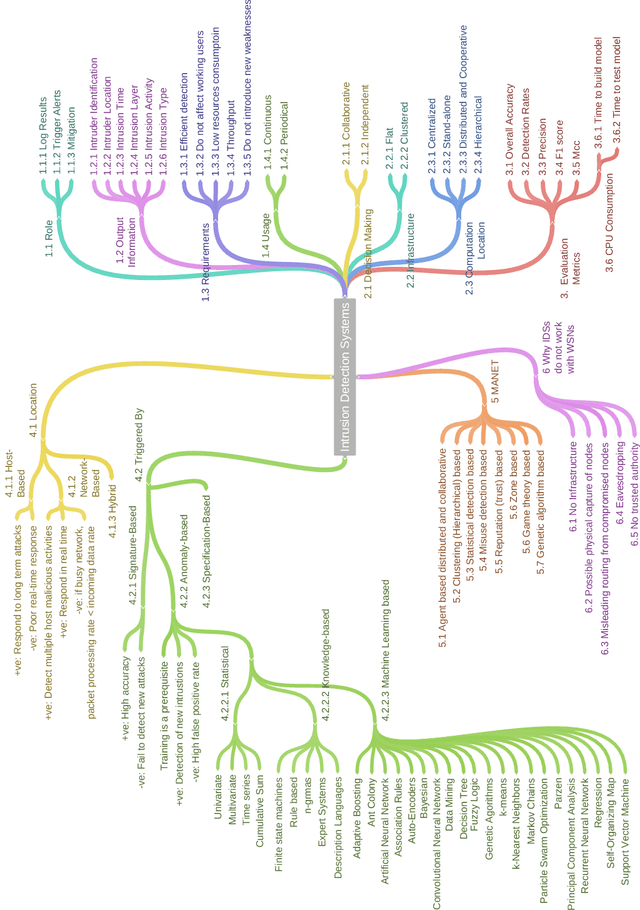

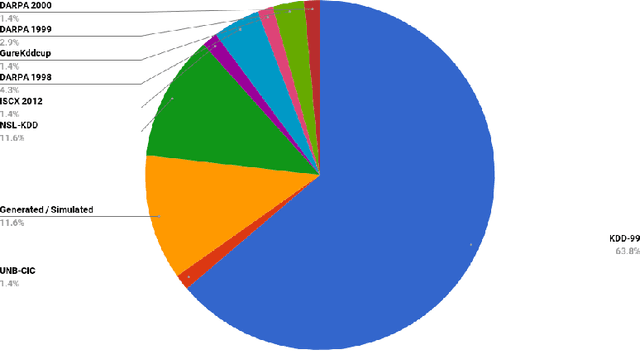

A Taxonomy and Survey of Intrusion Detection System Design Techniques, Network Threats and Datasets

Jun 09, 2018

Abstract:With the world moving towards being increasingly dependent on computers and automation, one of the main challenges in the current decade has been to build secure applications, systems and networks. Alongside these challenges, the number of threats is rising exponentially due to the attack surface increasing through numerous interfaces offered for each service. To alleviate the impact of these threats, researchers have proposed numerous solutions; however, current tools often fail to adapt to ever-changing architectures, associated threats and 0-days. This manuscript aims to provide researchers with a taxonomy and survey of current dataset composition and current Intrusion Detection Systems (IDS) capabilities and assets. These taxonomies and surveys aim to improve both the efficiency of IDS and the creation of datasets to build the next generation IDS as well as to reflect networks threats more accurately in future datasets. To this end, this manuscript also provides a taxonomy and survey or network threats and associated tools. The manuscript highlights that current IDS only cover 25% of our threat taxonomy, while current datasets demonstrate clear lack of real-network threats and attack representation, but rather include a large number of deprecated threats, hence limiting the accuracy of current machine learning IDS. Moreover, the taxonomies are open-sourced to allow public contributions through a Github repository.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge