Hajar Falahati

ORIGAMI: A Heterogeneous Split Architecture for In-Memory Acceleration of Learning

Jan 09, 2019

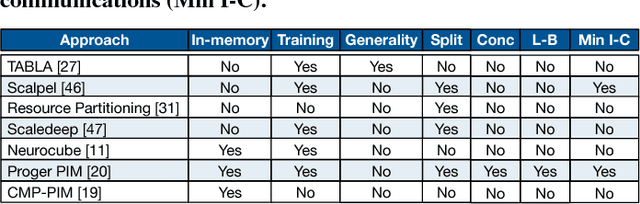

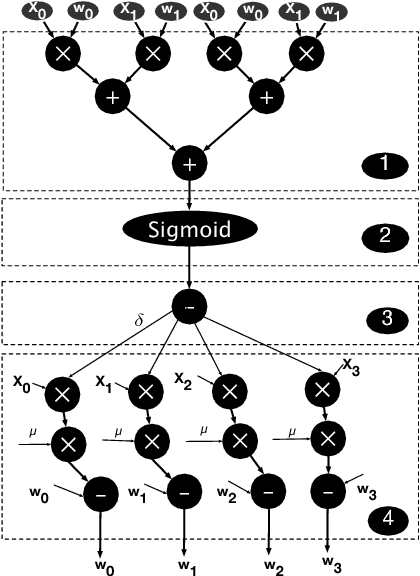

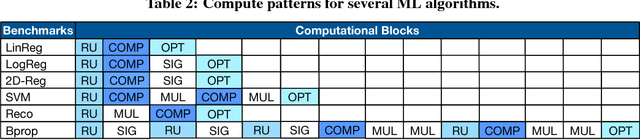

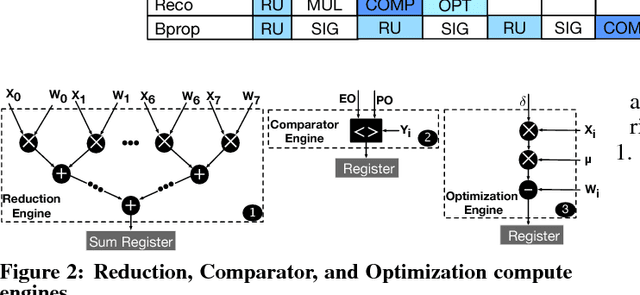

Abstract:Memory bandwidth bottleneck is a major challenges in processing machine learning (ML) algorithms. In-memory acceleration has potential to address this problem; however, it needs to address two challenges. First, in-memory accelerator should be general enough to support a large set of different ML algorithms. Second, it should be efficient enough to utilize bandwidth while meeting limited power and area budgets of logic layer of a 3D-stacked memory. We observe that previous work fails to simultaneously address both challenges. We propose ORIGAMI, a heterogeneous set of in-memory accelerators, to support compute demands of different ML algorithms, and also uses an off-the-shelf compute platform (e.g.,FPGA,GPU,TPU,etc.) to utilize bandwidth without violating strict area and power budgets. ORIGAMI offers a pattern-matching technique to identify similar computation patterns of ML algorithms and extracts a compute engine for each pattern. These compute engines constitute heterogeneous accelerators integrated on logic layer of a 3D-stacked memory. Combination of these compute engines can execute any type of ML algorithms. To utilize available bandwidth without violating area and power budgets of logic layer, ORIGAMI comes with a computation-splitting compiler that divides an ML algorithm between in-memory accelerators and an out-of-the-memory platform in a balanced way and with minimum inter-communications. Combination of pattern matching and split execution offers a new design point for acceleration of ML algorithms. Evaluation results across 12 popular ML algorithms show that ORIGAMI outperforms state-of-the-art accelerator with 3D-stacked memory in terms of performance and energy-delay product (EDP) by 1.5x and 29x (up to 1.6x and 31x), respectively. Furthermore, results are within a 1% margin of an ideal system that has unlimited compute resources on logic layer of a 3D-stacked memory.

GANAX: A Unified MIMD-SIMD Acceleration for Generative Adversarial Networks

May 10, 2018

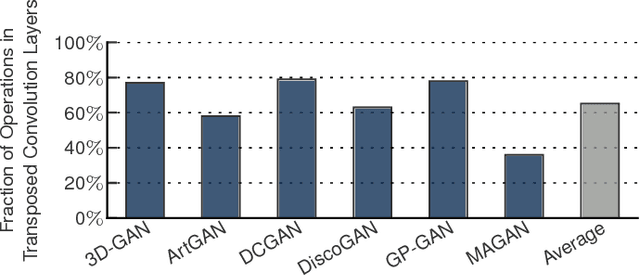

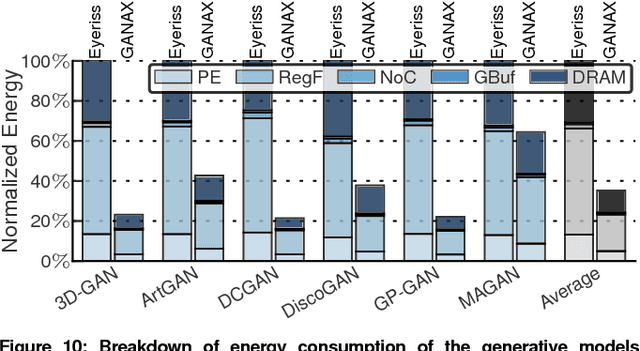

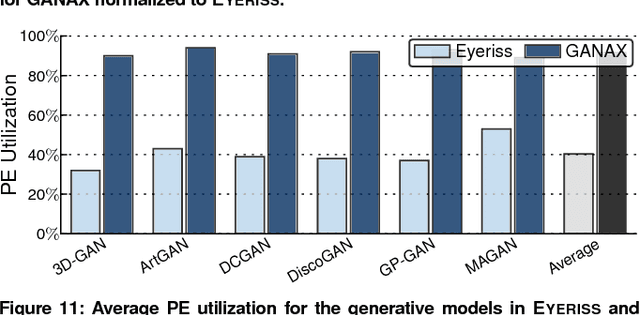

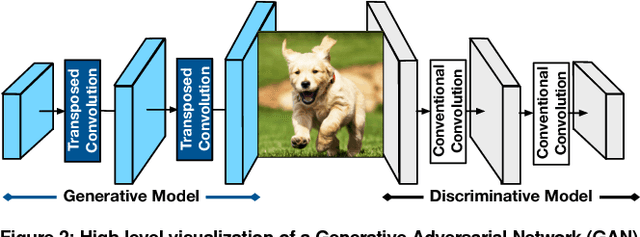

Abstract:Generative Adversarial Networks (GANs) are one of the most recent deep learning models that generate synthetic data from limited genuine datasets. GANs are on the frontier as further extension of deep learning into many domains (e.g., medicine, robotics, content synthesis) requires massive sets of labeled data that is generally either unavailable or prohibitively costly to collect. Although GANs are gaining prominence in various fields, there are no accelerators for these new models. In fact, GANs leverage a new operator, called transposed convolution, that exposes unique challenges for hardware acceleration. This operator first inserts zeros within the multidimensional input, then convolves a kernel over this expanded array to add information to the embedded zeros. Even though there is a convolution stage in this operator, the inserted zeros lead to underutilization of the compute resources when a conventional convolution accelerator is employed. We propose the GANAX architecture to alleviate the sources of inefficiency associated with the acceleration of GANs using conventional convolution accelerators, making the first GAN accelerator design possible. We propose a reorganization of the output computations to allocate compute rows with similar patterns of zeros to adjacent processing engines, which also avoids inconsequential multiply-adds on the zeros. This compulsory adjacency reclaims data reuse across these neighboring processing engines, which had otherwise diminished due to the inserted zeros. The reordering breaks the full SIMD execution model, which is prominent in convolution accelerators. Therefore, we propose a unified MIMD-SIMD design for GANAX that leverages repeated patterns in the computation to create distinct microprograms that execute concurrently in SIMD mode.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge