Haider Raza

Segmentation of Mental Foramen in Orthopantomographs: A Deep Learning Approach

Aug 08, 2024Abstract:Precise identification and detection of the Mental Foramen are crucial in dentistry, impacting procedures such as impacted tooth removal, cyst surgeries, and implants. Accurately identifying this anatomical feature facilitates post-surgery issues and improves patient outcomes. Moreover, this study aims to accelerate dental procedures, elevating patient care and healthcare efficiency in dentistry. This research used Deep Learning methods to accurately detect and segment the Mental Foramen from panoramic radiograph images. Two mask types, circular and square, were used during model training. Multiple segmentation models were employed to identify and segment the Mental Foramen, and their effectiveness was evaluated using diverse metrics. An in-house dataset comprising 1000 panoramic radiographs was created for this study. Our experiments demonstrated that the Classical UNet model performed exceptionally well on the test data, achieving a Dice Coefficient of 0.79 and an Intersection over Union (IoU) of 0.67. Moreover, ResUNet++ and UNet Attention models showed competitive performance, with Dice scores of 0.675 and 0.676, and IoU values of 0.683 and 0.671, respectively. We also investigated transfer learning models with varied backbone architectures, finding LinkNet to produce the best outcomes. In conclusion, our research highlights the efficacy of the classical Unet model in accurately identifying and outlining the Mental Foramen in panoramic radiographs. While vital, this task is comparatively simpler than segmenting complex medical datasets such as brain tumours or skin cancer, given their diverse sizes and shapes. This research also holds value in optimizing dental practice, benefiting practitioners and patients.

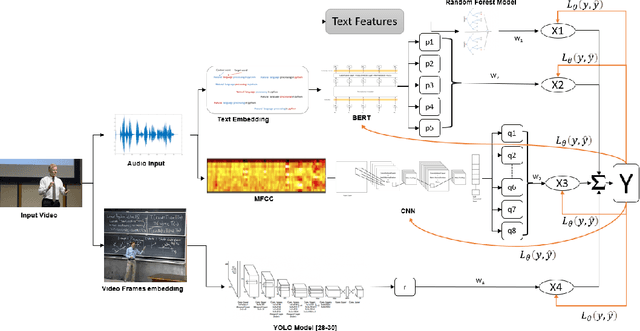

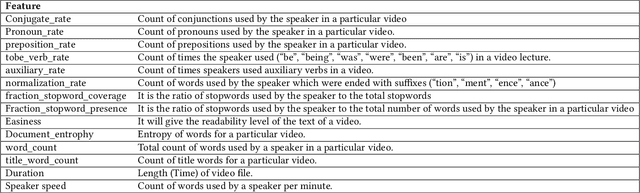

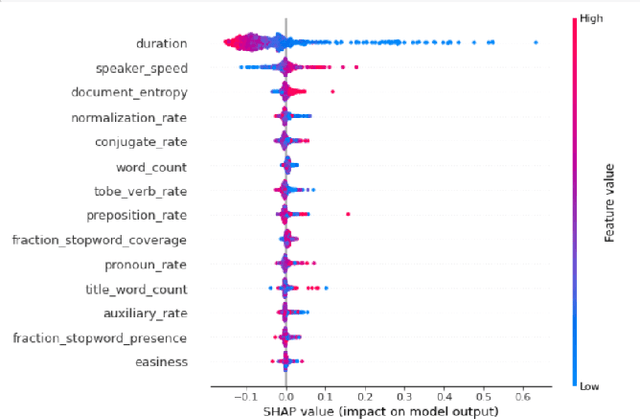

CLUE: Contextualised Unified Explainable Learning of User Engagement in Video Lectures

Jan 14, 2022

Abstract:Predicting contextualised engagement in videos is a long-standing problem that has been popularly attempted by exploiting the number of views or the associated likes using different computational methods. The recent decade has seen a boom in online learning resources, and during the pandemic, there has been an exponential rise of online teaching videos without much quality control. The quality of the content could be improved if the creators could get constructive feedback on their content. Employing an army of domain expert volunteers to provide feedback on the videos might not scale. As a result, there has been a steep rise in developing computational methods to predict a user engagement score that is indicative of some form of possible user engagement, i.e., to what level a user would tend to engage with the content. A drawback in current methods is that they model various features separately, in a cascaded approach, that is prone to error propagation. Besides, most of them do not provide crucial explanations on how the creator could improve their content. In this paper, we have proposed a new unified model, CLUE for the educational domain, which learns from the features extracted from freely available public online teaching videos and provides explainable feedback on the video along with a user engagement score. Given the complexity of the task, our unified framework employs different pre-trained models working together as an ensemble of classifiers. Our model exploits various multi-modal features to model the complexity of language, context agnostic information, textual emotion of the delivered content, animation, speaker's pitch and speech emotions. Under a transfer learning setup, the overall model, in the unified space, is fine-tuned for downstream applications.

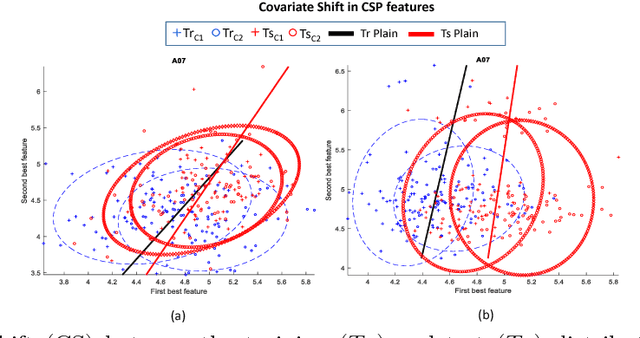

Covariate Shift Estimation based Adaptive Ensemble Learning for Handling Non-Stationarity in Motor Imagery related EEG-based Brain-Computer Interface

May 02, 2018

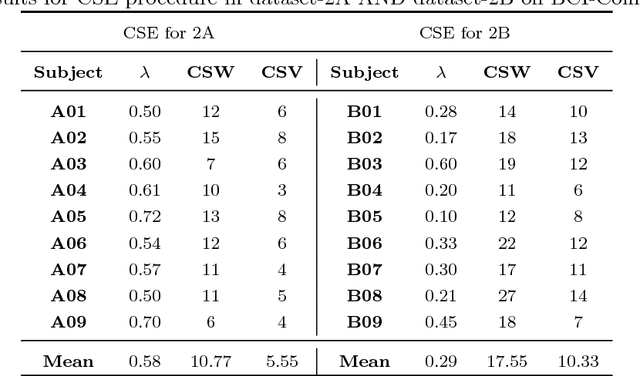

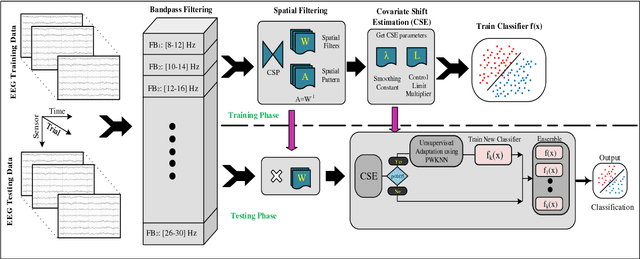

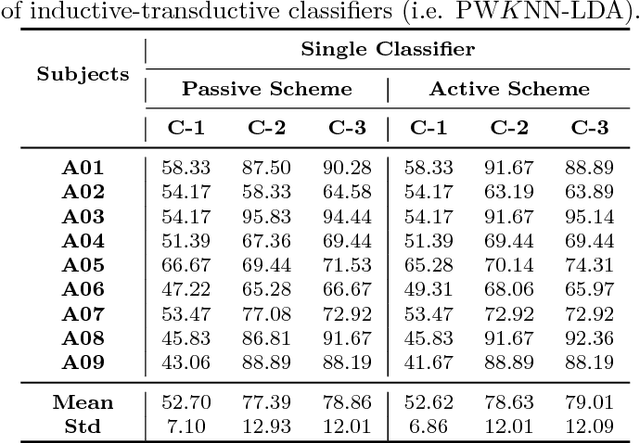

Abstract:The non-stationary nature of electroencephalography (EEG) signals makes an EEG-based brain-computer interface (BCI) a dynamic system, thus improving its performance is a challenging task. In addition, it is well-known that due to non-stationarity based covariate shifts, the input data distributions of EEG-based BCI systems change during inter- and intra-session transitions, which poses great difficulty for developments of online adaptive data-driven systems. Ensemble learning approaches have been used previously to tackle this challenge. However, passive scheme based implementation leads to poor efficiency while increasing high computational cost. This paper presents a novel integration of covariate shift estimation and unsupervised adaptive ensemble learning (CSE-UAEL) to tackle non-stationarity in motor-imagery (MI) related EEG classification. The proposed method first employs an exponentially weighted moving average model to detect the covariate shifts in the common spatial pattern features extracted from MI related brain responses. Then, a classifier ensemble was created and updated over time to account for changes in streaming input data distribution wherein new classifiers are added to the ensemble in accordance with estimated shifts. Furthermore, using two publicly available BCI-related EEG datasets, the proposed method was extensively compared with the state-of-the-art single-classifier based passive scheme, single-classifier based active scheme and ensemble based passive schemes. The experimental results show that the proposed active scheme based ensemble learning algorithm significantly enhances the BCI performance in MI classifications.

* 28 Pages, 3 figures, Neurocomputing

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge