Haichong Zhang

Position: Human-Centric AI Requires a Minimum Viable Level of Human Understanding

Jan 31, 2026Abstract:AI systems increasingly produce fluent, correct, end-to-end outcomes. Over time, this erodes users' ability to explain, verify, or intervene. We define this divergence as the Capability-Comprehension Gap: a decoupling where assisted performance improves while users' internal models deteriorate. This paper argues that prevailing approaches to transparency, user control, literacy, and governance do not define the foundational understanding humans must retain for oversight under sustained AI delegation. To formalize this, we define the Cognitive Integrity Threshold (CIT) as the minimum comprehension required to preserve oversight, autonomy, and accountable participation under AI assistance. CIT does not require full reasoning reconstruction, nor does it constrain automation. It identifies the threshold beyond which oversight becomes procedural and contestability fails. We operatinalize CIT through three functional dimensions: (i) verification capacity, (ii) comprehension-preserving interaction, and (iii) institutional scaffolds for governance. This motivates a design and governance agenda that aligns human-AI interaction with cognitive sustainability in responsibility-critical settings.

Deep Loss Convexification for Learning Iterative Models

Nov 16, 2024

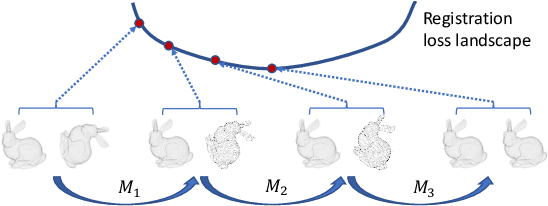

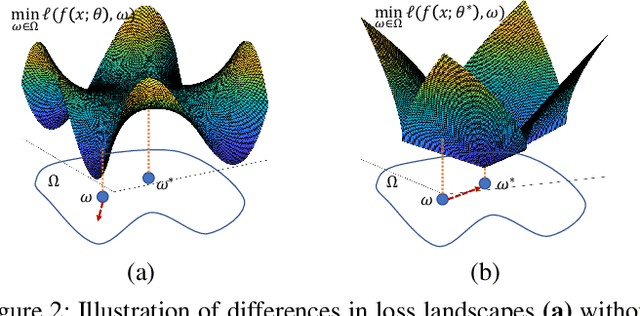

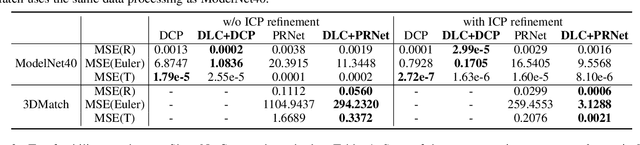

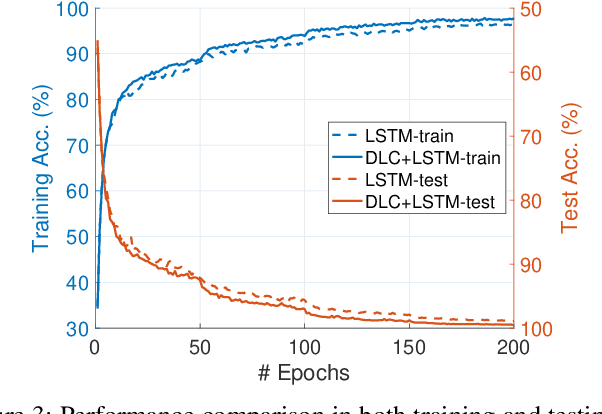

Abstract:Iterative methods such as iterative closest point (ICP) for point cloud registration often suffer from bad local optimality (e.g. saddle points), due to the nature of nonconvex optimization. To address this fundamental challenge, in this paper we propose learning to form the loss landscape of a deep iterative method w.r.t. predictions at test time into a convex-like shape locally around each ground truth given data, namely Deep Loss Convexification (DLC), thanks to the overparametrization in neural networks. To this end, we formulate our learning objective based on adversarial training by manipulating the ground-truth predictions, rather than input data. In particular, we propose using star-convexity, a family of structured nonconvex functions that are unimodal on all lines that pass through a global minimizer, as our geometric constraint for reshaping loss landscapes, leading to (1) extra novel hinge losses appended to the original loss and (2) near-optimal predictions. We demonstrate the state-of-the-art performance using DLC with existing network architectures for the tasks of training recurrent neural networks (RNNs), 3D point cloud registration, and multimodel image alignment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge