Guy Satat

Recent Advances in Imaging Around Corners

Oct 12, 2019

Abstract:Seeing around corners, also known as non-line-of-sight (NLOS) imaging is a computational method to resolve or recover objects hidden around corners. Recent advances in imaging around corners have gained significant interest. This paper reviews different types of existing NLOS imaging techniques and discusses the challenges that need to be addressed, especially for their applications outside of a constrained laboratory environment. Our goal is to introduce this topic to broader research communities as well as provide insights that would lead to further developments in this research area.

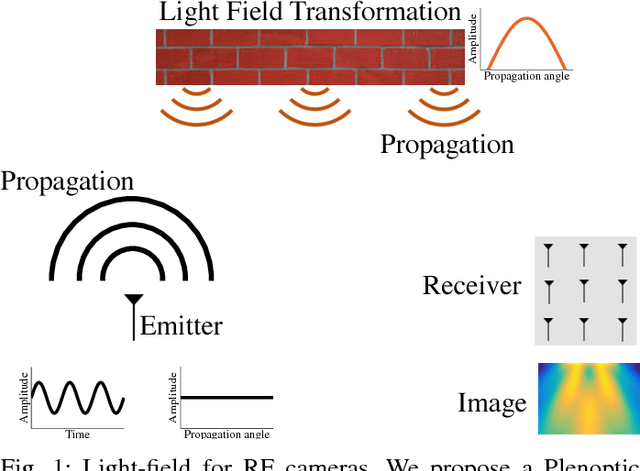

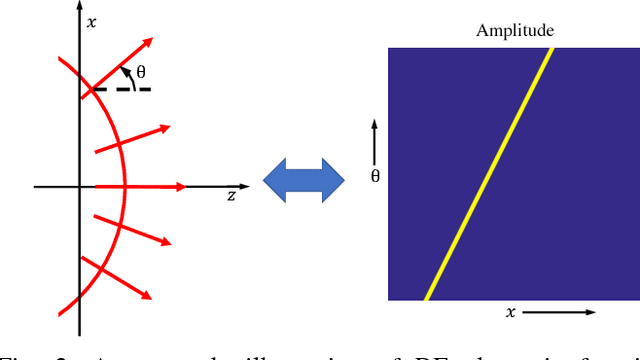

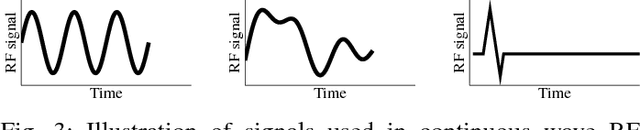

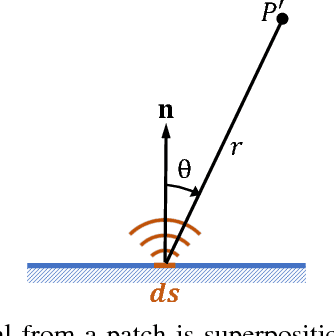

Light-Field for RF

Jan 13, 2019

Abstract:Most computer vision systems and computational photography systems are visible light based which is a small fraction of the electromagnetic (EM) spectrum. In recent years radio frequency (RF) hardware has become more widely available, for example, many cars are equipped with a RADAR, and almost every home has a WiFi device. In the context of imaging, RF spectrum holds many advantages compared to visible light systems. In particular, in this regime, EM energy effectively interacts in different ways with matter. This property allows for many novel applications such as privacy preserving computer vision and imaging through absorbing and scattering materials in visible light such as walls. Here, we expand many of the concepts in computational photography in visible light to RF cameras. The main limitation of imaging with RF is the large wavelength that limits the imaging resolution when compared to visible light. However, the output of RF cameras is usually processed by computer vision and perception algorithms which would benefit from multi-modal sensing of the environment, and from sensing in situations in which visible light systems fail. To bridge the gap between computational photography and RF imaging, we expand the concept of light-field to RF. This work paves the way to novel computational sensing systems with RF.

Flash Photography for Data-Driven Hidden Scene Recovery

Oct 27, 2018

Abstract:Vehicles, search and rescue personnel, and endoscopes use flash lights to locate, identify, and view objects in their surroundings. Here we show the first steps of how all these tasks can be done around corners with consumer cameras. Recent techniques for NLOS imaging using consumer cameras have not been able to both localize and identify the hidden object. We introduce a method that couples traditional geometric understanding and data-driven techniques. To avoid the limitation of large dataset gathering, we train the data-driven models on rendered samples to computationally recover the hidden scene on real data. The method has three independent operating modes: 1) a regression output to localize a hidden object in 2D, 2) an identification output to identify the object type or pose, and 3) a generative network to reconstruct the hidden scene from a new viewpoint. The method is able to localize 12cm wide hidden objects in 2D with 1.7cm accuracy. The method also identifies the hidden object class with 87.7% accuracy (compared to 33.3% random accuracy). This paper also provides an analysis on the distribution of information that encodes the occluded object in the accessible scene. We show that, unlike previously thought, the area that extends beyond the corner is essential for accurate object localization and identification.

Lensless Imaging with Compressive Ultrafast Sensing

Mar 30, 2017

Abstract:Lensless imaging is an important and challenging problem. One notable solution to lensless imaging is a single pixel camera which benefits from ideas central to compressive sampling. However, traditional single pixel cameras require many illumination patterns which result in a long acquisition process. Here we present a method for lensless imaging based on compressive ultrafast sensing. Each sensor acquisition is encoded with a different illumination pattern and produces a time series where time is a function of the photon's origin in the scene. Currently available hardware with picosecond time resolution enables time tagging photons as they arrive to an omnidirectional sensor. This allows lensless imaging with significantly fewer patterns compared to regular single pixel imaging. To that end, we develop a framework for designing lensless imaging systems that use ultrafast detectors. We provide an algorithm for ideal sensor placement and an algorithm for optimized active illumination patterns. We show that efficient lensless imaging is possible with ultrafast measurement and compressive sensing. This paves the way for novel imaging architectures and remote sensing in extreme situations where imaging with a lens is not possible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge