Manikanta Kotaru

Adapting Foundation Models for Information Synthesis of Wireless Communication Specifications

Aug 09, 2023Abstract:Existing approaches to understanding, developing and researching modern wireless communication technologies involves time-intensive and arduous process of sifting through numerous webpages and technical specification documents, gathering the required information and synthesizing it. This paper presents NextGen Communications Copilot, a conversational artificial intelligence tool for information synthesis of wireless communication specifications. The system builds on top of recent advancements in foundation models and consists of three key additional components: a domain-specific database, a context extractor, and a feedback mechanism. The system appends user queries with concise and query-dependent contextual information extracted from a database of wireless technical specifications and incorporates tools for expert feedback and data contributions. On evaluation using a benchmark dataset of queries and reference responses created by subject matter experts, the system demonstrated more relevant and accurate answers with an average BLEU score and BERTScore F1-measure of 0.37 and 0.79 respectively compared to the corresponding values of 0.07 and 0.59 achieved by state-of-the-art tools like ChatGPT.

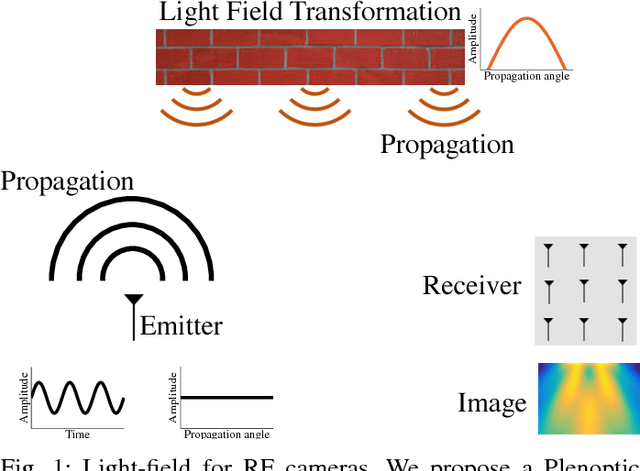

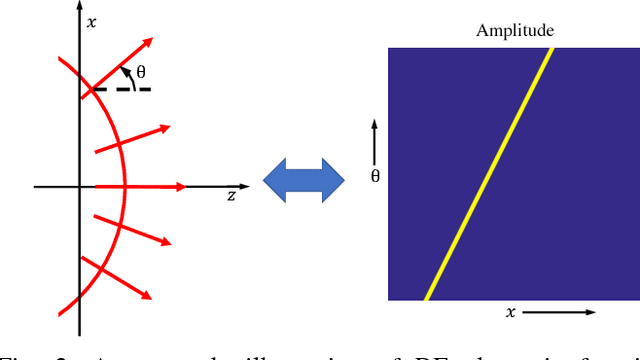

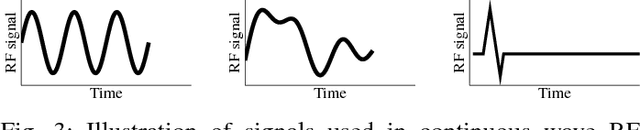

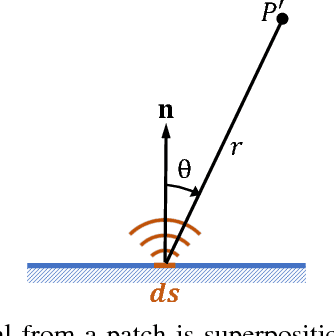

Light-Field for RF

Jan 13, 2019

Abstract:Most computer vision systems and computational photography systems are visible light based which is a small fraction of the electromagnetic (EM) spectrum. In recent years radio frequency (RF) hardware has become more widely available, for example, many cars are equipped with a RADAR, and almost every home has a WiFi device. In the context of imaging, RF spectrum holds many advantages compared to visible light systems. In particular, in this regime, EM energy effectively interacts in different ways with matter. This property allows for many novel applications such as privacy preserving computer vision and imaging through absorbing and scattering materials in visible light such as walls. Here, we expand many of the concepts in computational photography in visible light to RF cameras. The main limitation of imaging with RF is the large wavelength that limits the imaging resolution when compared to visible light. However, the output of RF cameras is usually processed by computer vision and perception algorithms which would benefit from multi-modal sensing of the environment, and from sensing in situations in which visible light systems fail. To bridge the gap between computational photography and RF imaging, we expand the concept of light-field to RF. This work paves the way to novel computational sensing systems with RF.

Position Tracking for Virtual Reality Using Commodity WiFi

Jul 12, 2017

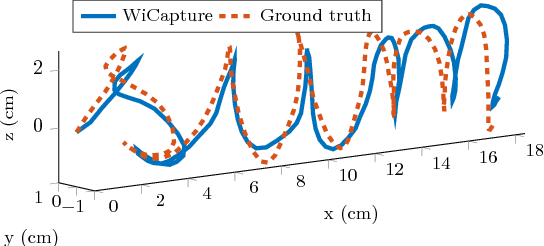

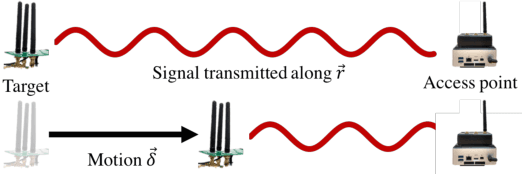

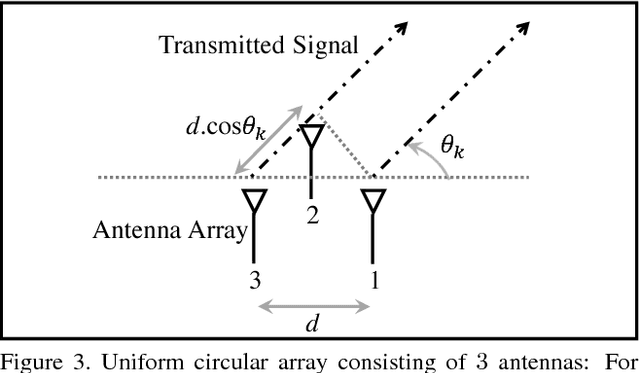

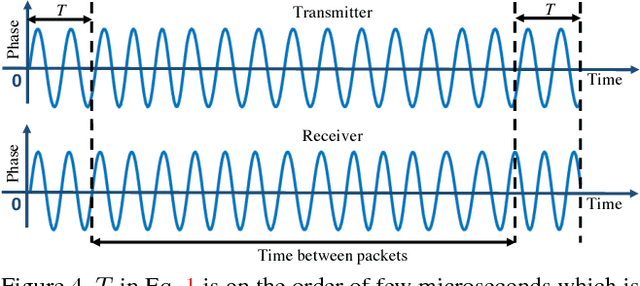

Abstract:Today, experiencing virtual reality (VR) is a cumbersome experience which either requires dedicated infrastructure like infrared cameras to track the headset and hand-motion controllers (e.g., Oculus Rift, HTC Vive), or provides only 3-DoF (Degrees of Freedom) tracking which severely limits the user experience (e.g., Samsung Gear). To truly enable VR everywhere, we need position tracking to be available as a ubiquitous service. This paper presents WiCapture, a novel approach which leverages commodity WiFi infrastructure, which is ubiquitous today, for tracking purposes. We prototype WiCapture using off-the-shelf WiFi radios and show that it achieves an accuracy of 0.88 cm compared to sophisticated infrared based tracking systems like the Oculus, while providing much higher range, resistance to occlusion, ubiquity and ease of deployment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge