Gustavo Plensack

Normalizador Neural de Datas e Endereços

Jul 09, 2020

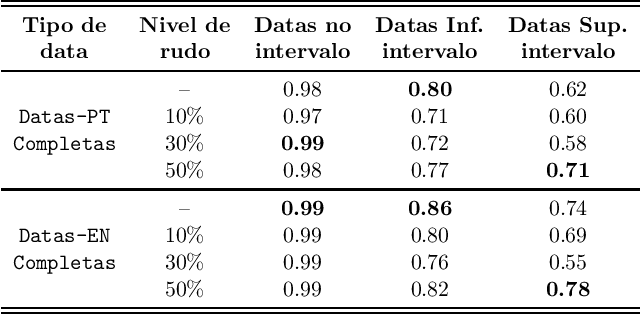

Abstract:Documents of any kind present a wide variety of date and address formats, in some cases dates can be written entirely in full or even have different types of separators. The pattern disorder in addresses is even greater due to the greater possibility of interchanging between streets, neighborhoods, cities and states. In the context of natural language processing, problems of this nature are handled by rigid tools such as ReGex or DateParser, which are efficient as long as the expected input is pre-configured. When these algorithms are given an unexpected format, errors and unwanted outputs happen. To circumvent this challenge, we present a solution with deep neural networks state of art T5 that treats non-preconfigured formats of dates and addresses with accuracy above 90% in some cases. With this model, our proposal brings generalization to the task of normalizing dates and addresses. We also deal with this problem with noisy data that simulates possible errors in the text.

Electricity Theft Detection with self-attention

Feb 14, 2020

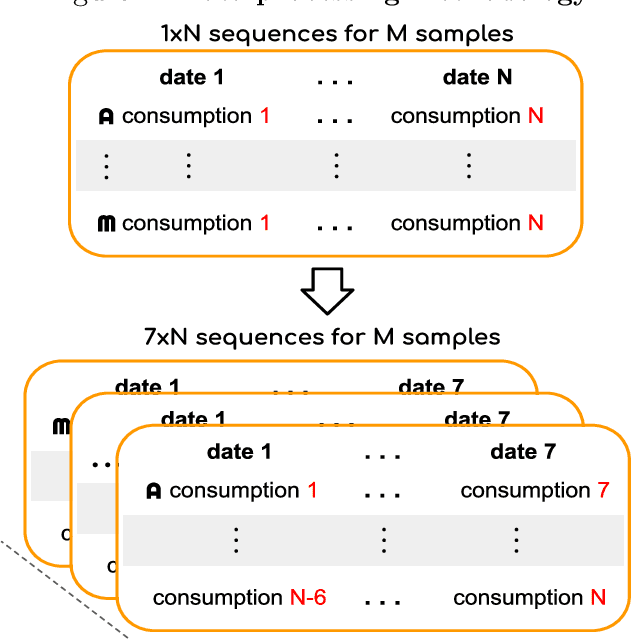

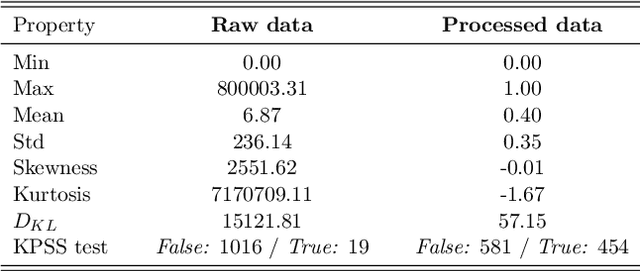

Abstract:In this work we propose a novel self-attention mechanism model to address electricity theft detection on an imbalanced realistic dataset that presents a daily electricity consumption provided by State Grid Corporation of China. Our key contribution is the introduction of a multi-head self-attention mechanism concatenated with dilated convolutions and unified by a convolution of kernel size $1$. Moreover, we introduce a binary input channel (Binary Mask) to identify the position of the missing values, allowing the network to learn how to deal with these values. Our model achieves an AUC of $0.926$ which is an improvement in more than $17\%$ with respect to previous baseline work. The code is available on GitHub at https://github.com/neuralmind-ai/electricity-theft-detection-with-self-attention.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge