Guoda Tian

Adaptive Attention-Based Model for 5G Radio-based Outdoor Localization

Mar 31, 2025

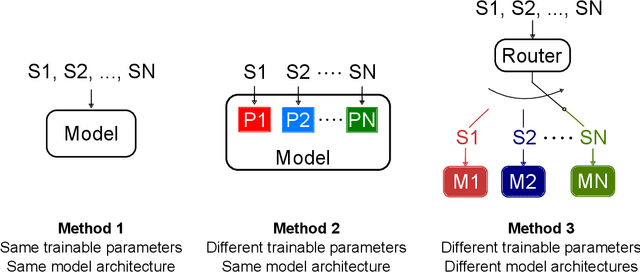

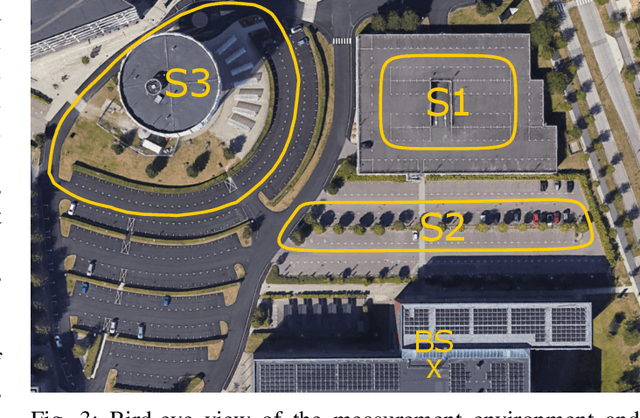

Abstract:Radio-based localization in dynamic environments, such as urban and vehicular settings, requires systems that can efficiently adapt to varying signal conditions and environmental changes. Factors such as multipath interference and obstructions introduce different levels of complexity that affect the accuracy of the localization. Although generalized models offer broad applicability, they often struggle to capture the nuances of specific environments, leading to suboptimal performance in real-world deployments. In contrast, specialized models can be tailored to particular conditions, enabling more precise localization by effectively handling domain-specific variations and noise patterns. However, deploying multiple specialized models requires an efficient mechanism to select the most appropriate one for a given scenario. In this work, we develop an adaptive localization framework that combines shallow attention-based models with a router/switching mechanism based on a single-layer perceptron (SLP). This enables seamless transitions between specialized localization models optimized for different conditions, balancing accuracy, computational efficiency, and robustness to environmental variations. We design three low-complex localization models tailored for distinct scenarios, optimized for reduced computational complexity, test time, and model size. The router dynamically selects the most suitable model based on real-time input characteristics. The proposed framework is validated using real-world vehicle localization data collected from a massive MIMO base station (BS), demonstrating its ability to seamlessly adapt to diverse deployment conditions while maintaining high localization accuracy.

Attention-aided Outdoor Localization in Commercial 5G NR Systems

May 15, 2024

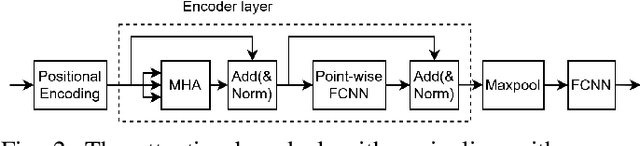

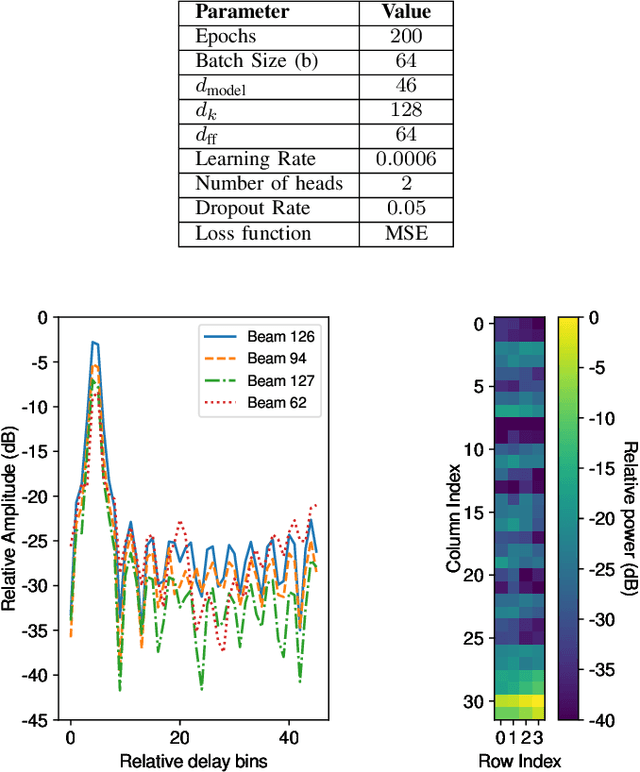

Abstract:The integration of high-precision cellular localization and machine learning (ML) is considered a cornerstone technique in future cellular navigation systems, offering unparalleled accuracy and functionality. This study focuses on localization based on uplink channel measurements in a fifth-generation (5G) new radio (NR) system. An attention-aided ML-based single-snapshot localization pipeline is presented, which consists of several cascaded blocks, namely a signal processing block, an attention-aided block, and an uncertainty estimation block. Specifically, the signal processing block generates an impulse response beam matrix for all beams. The attention-aided block trains on the channel impulse responses using an attention-aided network, which captures the correlation between impulse responses for different beams. The uncertainty estimation block predicts the probability density function of the UE position, thereby also indicating the confidence level of the localization result. Two representative uncertainty estimation techniques, the negative log-likelihood and the regression-by-classification techniques, are applied and compared. Furthermore, for dynamic measurements with multiple snapshots available, we combine the proposed pipeline with a Kalman filter to enhance localization accuracy. To evaluate our approach, we extract channel impulse responses for different beams from a commercial base station. The outdoor measurement campaign covers Line-of-Sight (LoS), Non-Line-of-Sight (NLoS), and a mix of LoS and NLoS scenarios. The results show that sub-meter localization accuracy can be achieved.

Indoor Localization Using Radio, Vision and Audio Sensors: Real-Life Data Validation and Discussion

Sep 06, 2023

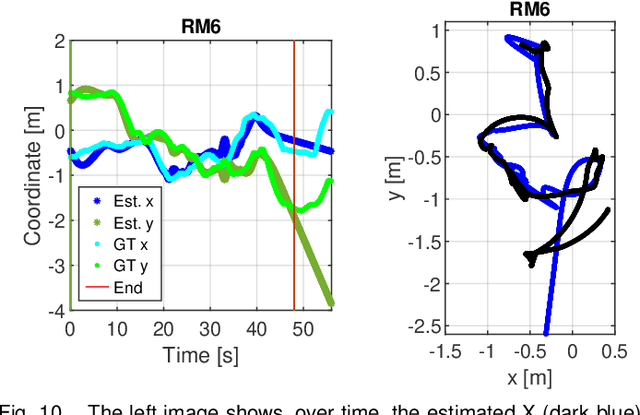

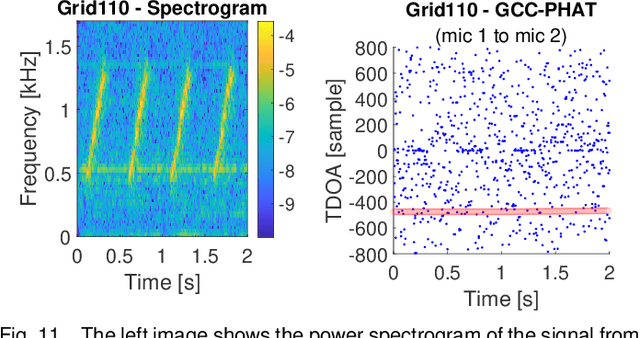

Abstract:This paper investigates indoor localization methods using radio, vision, and audio sensors, respectively, in the same environment. The evaluation is based on state-of-the-art algorithms and uses a real-life dataset. More specifically, we evaluate a machine learning algorithm for radio-based localization with massive MIMO technology, an ORB-SLAM3 algorithm for vision-based localization with an RGB-D camera, and an SFS2 algorithm for audio-based localization with microphone arrays. Aspects including localization accuracy, reliability, calibration requirements, and potential system complexity are discussed to analyze the advantages and limitations of using different sensors for indoor localization tasks. The results can serve as a guideline and basis for further development of robust and high-precision multi-sensory localization systems, e.g., through sensor fusion and context and environment-aware adaptation.

High-Precision Machine-Learning Based Indoor Localization with Massive MIMO System

Mar 07, 2023Abstract:High-precision cellular-based localization is one of the key technologies for next-generation communication systems. In this paper, we investigate the potential of applying machine learning (ML) to a massive multiple-input multiple-output (MIMO) system to enhance localization accuracy. We analyze a new ML-based localization pipeline that has two parallel fully connected neural networks (FCNN). The first FCNN takes the instantaneous spatial covariance matrix to capture angular information, while the second FCNN takes the channel impulse responses to capture delay information. We fuse the estimated coordinates of these two FCNNs for further accuracy improvement. To test the localization algorithm, we performed an indoor measurement campaign with a massive MIMO testbed at 3.7GHz. In the measured scenario, the proposed pipeline can achieve centimeter-level accuracy by combining delay and angular information.

The LuViRA Dataset: Measurement Description

Feb 10, 2023Abstract:We present a dataset to evaluate localization algorithms, which utilizes vision, audio, and radio sensors: the Lund University Vision, Radio, and Audio (LuViRA) Dataset. The dataset includes RGB images, corresponding depth maps, IMU readings, channel response between a massive MIMO channel sounder and a user equipment, audio recorded by 12 microphones, and 0.5 mm accurate 6DoF pose ground truth. We synchronize these sensors to make sure that all data are recorded simultaneously. A camera, speaker, and transmit antenna are placed on top of a slowly moving service robot and 88 trajectories are recorded. Each trajectory includes 20 to 50 seconds of recorded sensor data and ground truth labels. The data from different sensors can be used separately or jointly to conduct localization tasks and a motion capture system is used to verify the results obtained by the localization algorithms. The main aim of this dataset is to enable research on fusing the most commonly used sensors for localization tasks. However, the full dataset or some parts of it can also be used for other research areas such as channel estimation, image classification, etc. Fusing sensor data can lead to increased localization accuracy and reliability, as well as decreased latency and power consumption. The created dataset will be made public at a later date.

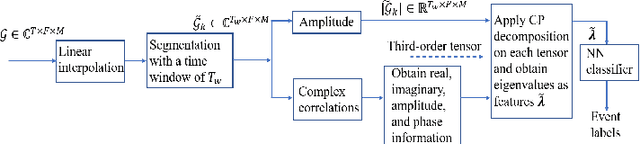

Sensing and Classification Using Massive MIMO: A Tensor Decomposition-Based Approach

Sep 02, 2021

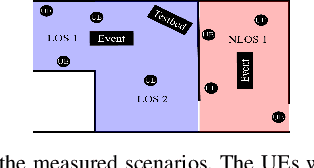

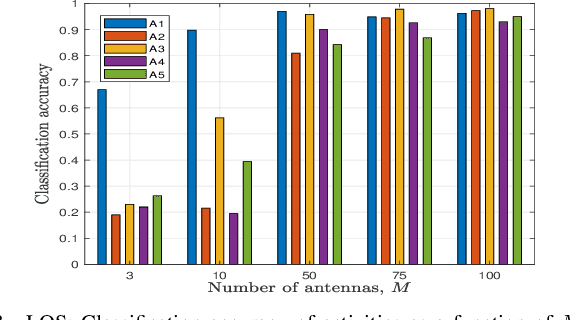

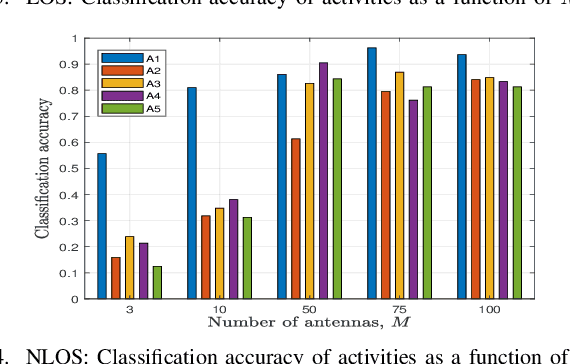

Abstract:Wireless-based activity sensing has gained significant attention due to its wide range of applications. We investigate radio-based multi-class classification of human activities using massive multiple-input multiple-output (MIMO) channel measurements in line-of-sight and non line-of-sight scenarios. We propose a tensor decomposition-based algorithm to extract features by exploiting the complex correlation characteristics across time, frequency, and space from channel tensors formed from the measurements, followed by a neural network that learns the relationship between the input features and output target labels. Through evaluations of real measurement data, it is demonstrated that the classification accuracy using a massive MIMO array achieves significantly better results compared to the state-of-the-art even for a smaller experimental data set.

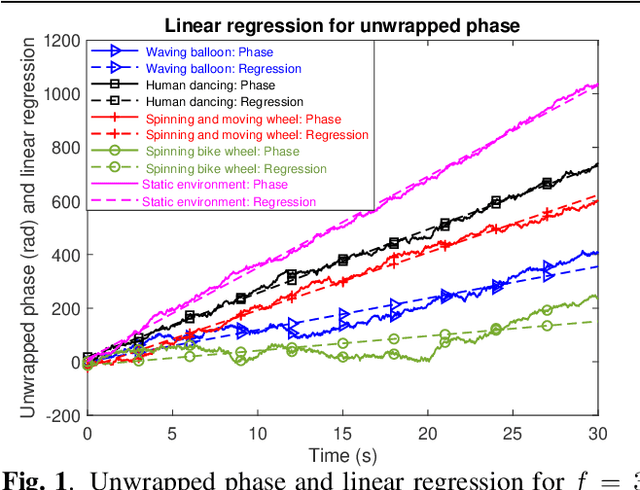

Moving Object Classification with a Sub-6 GHz Massive MIMO Array using Real Data

Feb 09, 2021

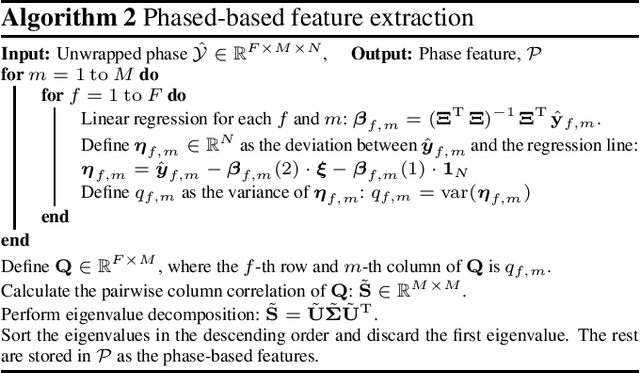

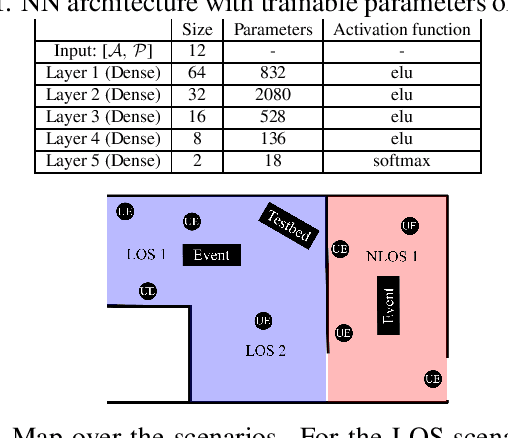

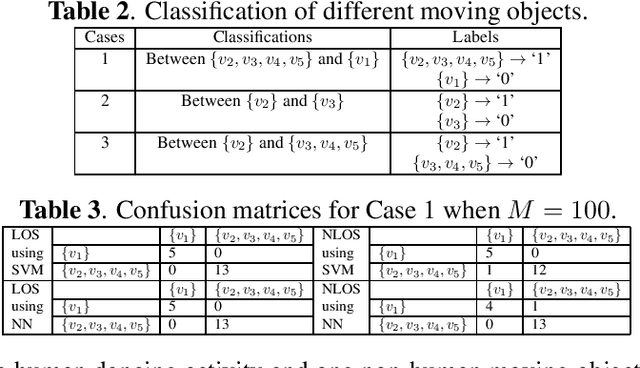

Abstract:Classification between different activities in an indoor environment using wireless signals is an emerging technology for various applications, including intrusion detection, patient care, and smart home. Researchers have shown different methods to classify activities and their potential benefits by utilizing WiFi signals. In this paper, we analyze classification of moving objects by employing machine learning on real data from a massive multi-input-multi-output (MIMO) system in an indoor environment. We conduct measurements for different activities in both line-of-sight and non line-of-sight scenarios with a massive MIMO testbed operating at 3.7 GHz. We propose algorithms to exploit amplitude and phase-based features classification task. For the considered setup, we benchmark the classification performance and show that we can achieve up to 98% accuracy using real massive MIMO data, even with a small number of experiments. Furthermore, we demonstrate the gain in performance results with a massive MIMO system as compared with that of a limited number of antennas such as in WiFi devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge