Guoan Li

Varifocal Multiview Images: Capturing and Visual Tasks

Nov 19, 2021

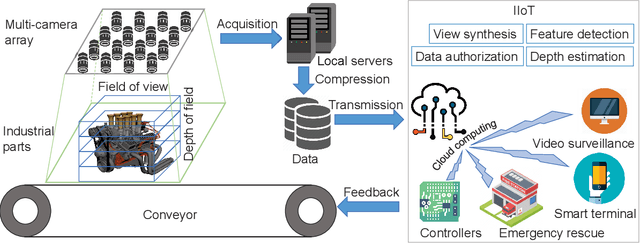

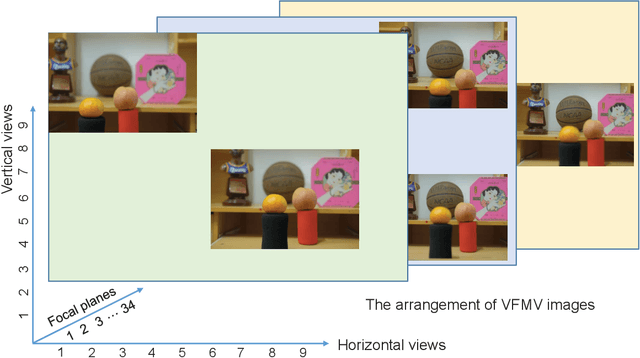

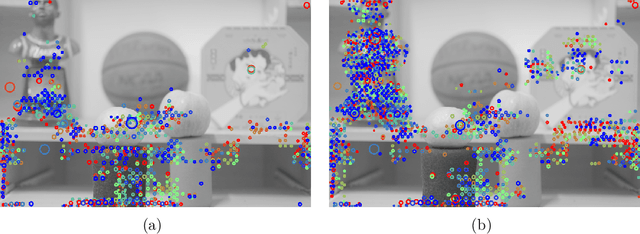

Abstract:Multiview images have flexible field of view (FoV) but inflexible depth of field (DoF). To overcome the limitation of multiview images on visual tasks, in this paper, we present varifocal multiview (VFMV) images with flexible DoF. VFMV images are captured by focusing a scene on distinct depths by varying focal planes, and each view only focused on one single plane.Therefore, VFMV images contain more information in focal dimension than multiview images, and can provide a rich representation for 3D scene by considering both FoV and DoF. The characteristics of VFMV images are useful for visual tasks to achieve high quality scene representation. Two experiments are conducted to validate the advantages of VFMV images in 4D light field feature detection and 3D reconstruction. Experiment results show that VFMV images can detect more light field features and achieve higher reconstruction quality due to informative focus cues. This work demonstrates that VFMV images have definite advantages over multiview images in visual tasks.

Transfer Learning from an Artificial Radiograph-landmark Dataset for Registration of the Anatomic Skull Model to Dual Fluoroscopic X-ray Images

Aug 14, 2021

Abstract:Registration of 3D anatomic structures to their 2D dual fluoroscopic X-ray images is a widely used motion tracking technique. However, deep learning implementation is often impeded by a paucity of medical images and ground truths. In this study, we proposed a transfer learning strategy for 3D-to-2D registration using deep neural networks trained from an artificial dataset. Digitally reconstructed radiographs (DRRs) and radiographic skull landmarks were automatically created from craniocervical CT data of a female subject. They were used to train a residual network (ResNet) for landmark detection and a cycle generative adversarial network (GAN) to eliminate the style difference between DRRs and actual X-rays. Landmarks on the X-rays experiencing GAN style translation were detected by the ResNet, and were used in triangulation optimization for 3D-to-2D registration of the skull in actual dual-fluoroscope images (with a non-orthogonal setup, point X-ray sources, image distortions, and partially captured skull regions). The registration accuracy was evaluated in multiple scenarios of craniocervical motions. In walking, learning-based registration for the skull had angular/position errors of 3.9 +- 2.1 deg / 4.6 +- 2.2 mm. However, the accuracy was lower during functional neck activity, due to overly small skull regions imaged on the dual fluoroscopic images at end-range positions. The methodology to strategically augment artificial training data can tackle the complicated skull registration scenario, and has potentials to extend to widespread registration scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge