Gunvant Chaudhari

Using Deep Learning with Large Aggregated Datasets for COVID-19 Classification from Cough

Jan 22, 2022

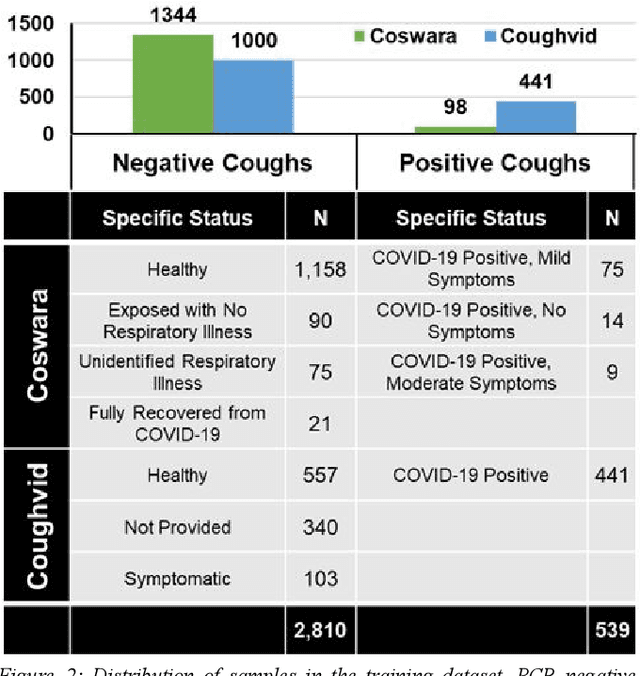

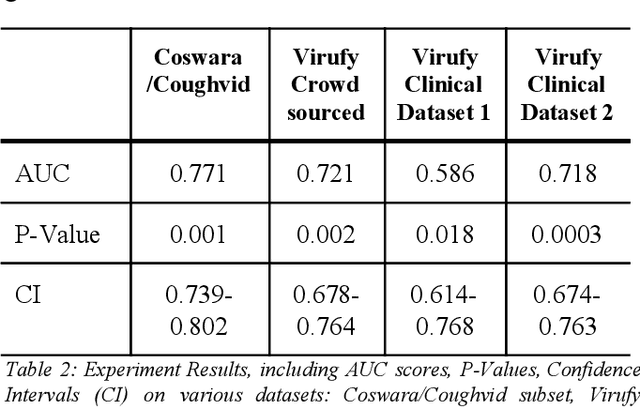

Abstract:The Covid-19 pandemic has been a scourge upon humanity, claiming the lives of more than 5 million people worldwide. Although vaccines are being distributed worldwide, there is an apparent need for affordable screening techniques to serve parts of the world that do not have access to traditional medicine. Artificial Intelligence can provide a solution utilizing cough sounds as the primary screening mode. This paper presents multiple models that have achieved relatively respectable perfor mance on the largest evaluation dataset currently presented in academic literature. Moreover, we also show that performance increases with training data size, showing the need for the world wide collection of data to help combat the Covid-19 pandemic with non-traditional means.

Virufy: A Multi-Branch Deep Learning Network for Automated Detection of COVID-19

Mar 16, 2021

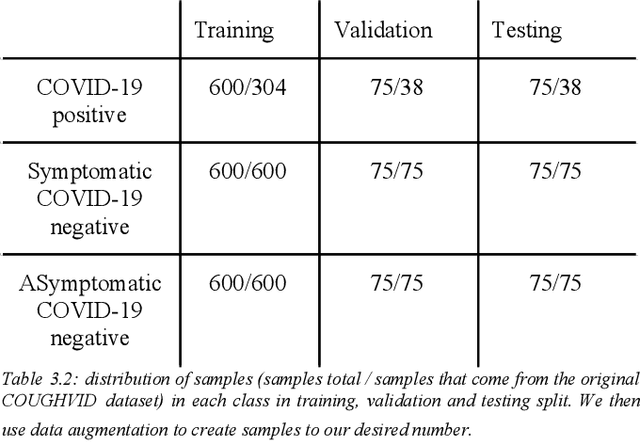

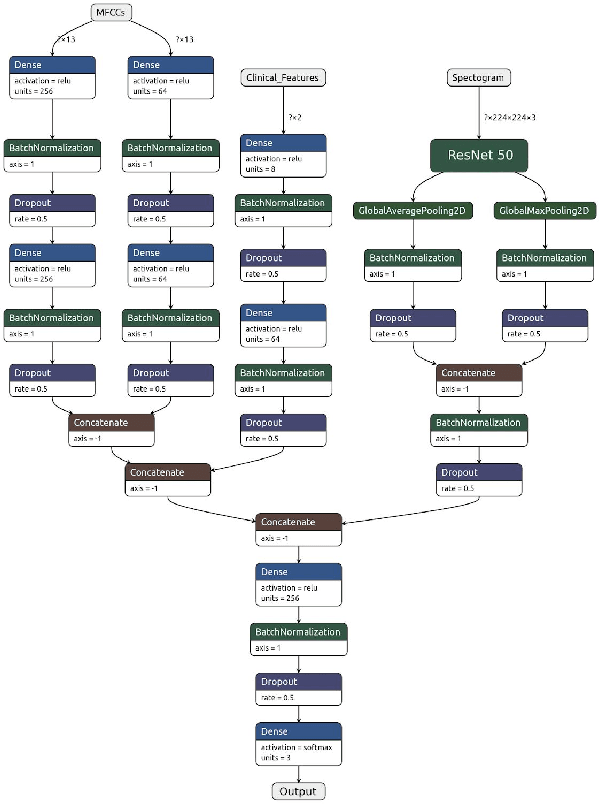

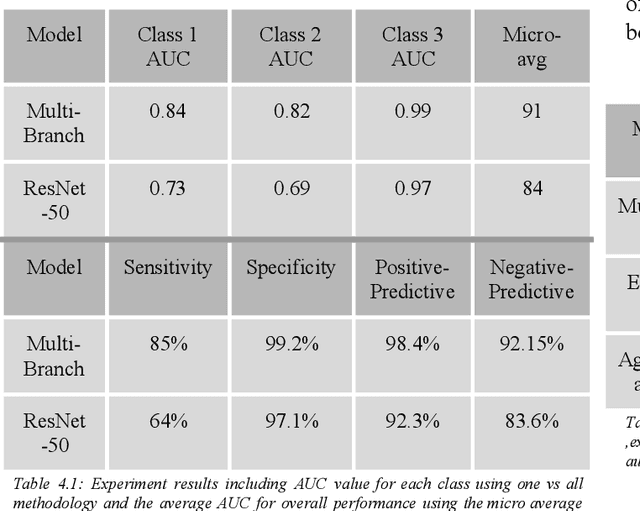

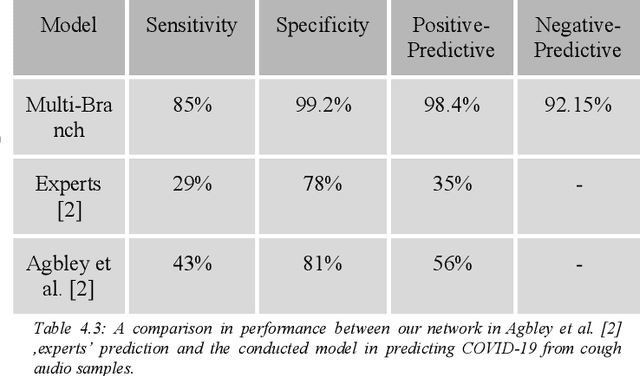

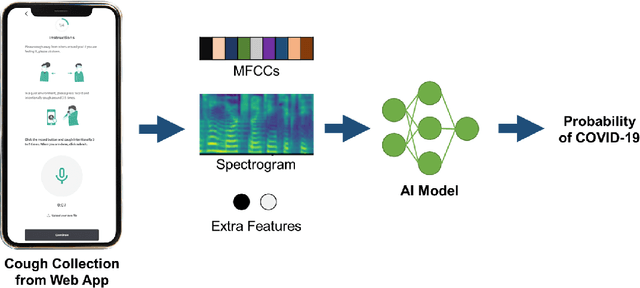

Abstract:Fast and affordable solutions for COVID-19 testing are necessary to contain the spread of the global pandemic and help relieve the burden on medical facilities. Currently, limited testing locations and expensive equipment pose difficulties for individuals trying to be tested, especially in low-resource settings. Researchers have successfully presented models for detecting COVID-19 infection status using audio samples recorded in clinical settings [5, 15], suggesting that audio-based Artificial Intelligence models can be used to identify COVID-19. Such models have the potential to be deployed on smartphones for fast, widespread, and low-resource testing. However, while previous studies have trained models on cleaned audio samples collected mainly from clinical settings, audio samples collected from average smartphones may yield suboptimal quality data that is different from the clean data that models were trained on. This discrepancy may add a bias that affects COVID-19 status predictions. To tackle this issue, we propose a multi-branch deep learning network that is trained and tested on crowdsourced data where most of the data has not been manually processed and cleaned. Furthermore, the model achieves state-of-art results for the COUGHVID dataset [16]. After breaking down results for each category, we have shown an AUC of 0.99 for audio samples with COVID-19 positive labels.

Virufy: Global Applicability of Crowdsourced and Clinical Datasets for AI Detection of COVID-19 from Cough

Dec 25, 2020

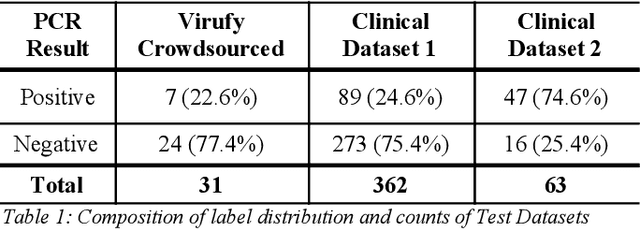

Abstract:Rapid and affordable methods of testing for COVID-19 infections are essential to reduce infection rates and prevent medical facilities from becoming overwhelmed. Current approaches of detecting COVID-19 require in-person testing with expensive kits that are not always easily accessible. This study demonstrates that crowdsourced cough audio samples recorded and acquired on smartphones from around the world can be used to develop an AI-based method that accurately predicts COVID-19 infection with an ROC-AUC of 77.1% (75.2%-78.3%). Furthermore, we show that our method is able to generalize to crowdsourced audio samples from Latin America and clinical samples from South Asia, without further training using the specific samples from those regions. As more crowdsourced data is collected, further development can be implemented using various respiratory audio samples to create a cough analysis-based machine learning (ML) solution for COVID-19 detection that can likely generalize globally to all demographic groups in both clinical and non-clinical settings.

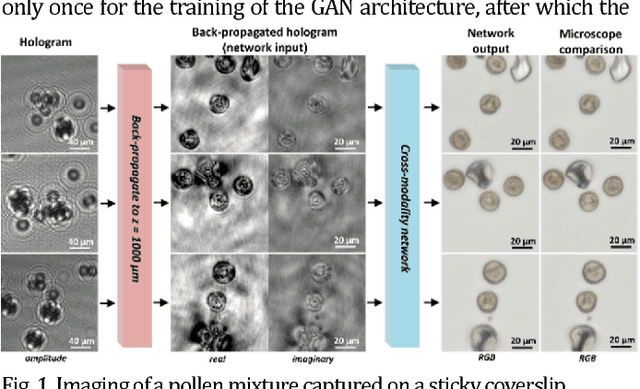

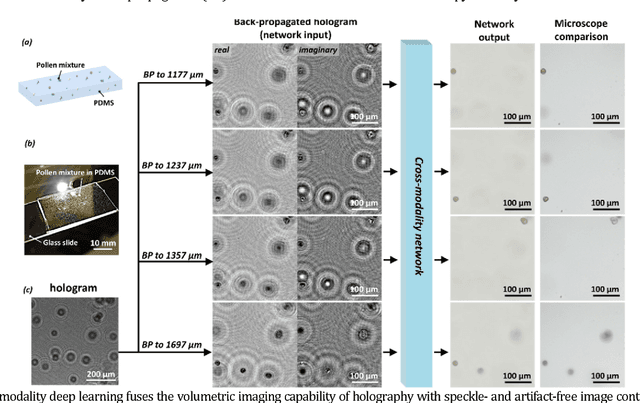

Cross-modality deep learning brings bright-field microscopy contrast to holography

Nov 17, 2018

Abstract:Deep learning brings bright-field microscopy contrast to holographic images of a sample volume, bridging the volumetric imaging capability of holography with the speckle- and artifact-free image contrast of bright-field incoherent microscopy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge