Gunel Jahangirova

Real Faults in Deep Learning Fault Benchmarks: How Real Are They?

Dec 20, 2024

Abstract:As the adoption of Deep Learning (DL) systems continues to rise, an increasing number of approaches are being proposed to test these systems, localise faults within them, and repair those faults. The best attestation of effectiveness for such techniques is an evaluation that showcases their capability to detect, localise and fix real faults. To facilitate these evaluations, the research community has collected multiple benchmarks of real faults in DL systems. In this work, we perform a manual analysis of 490 faults from five different benchmarks and identify that 314 of them are eligible for our study. Our investigation focuses specifically on how well the bugs correspond to the sources they were extracted from, which fault types are represented, and whether the bugs are reproducible. Our findings indicate that only 18.5% of the faults satisfy our realism conditions. Our attempts to reproduce these faults were successful only in 52% of cases.

An Empirical Study of Fault Localisation Techniques for Deep Learning

Dec 17, 2024Abstract:With the increased popularity of Deep Neural Networks (DNNs), increases also the need for tools to assist developers in the DNN implementation, testing and debugging process. Several approaches have been proposed that automatically analyse and localise potential faults in DNNs under test. In this work, we evaluate and compare existing state-of-the-art fault localisation techniques, which operate based on both dynamic and static analysis of the DNN. The evaluation is performed on a benchmark consisting of both real faults obtained from bug reporting platforms and faulty models produced by a mutation tool. Our findings indicate that the usage of a single, specific ground truth (e.g., the human defined one) for the evaluation of DNN fault localisation tools results in pretty low performance (maximum average recall of 0.31 and precision of 0.23). However, such figures increase when considering alternative, equivalent patches that exist for a given faulty DNN. Results indicate that \dfd is the most effective tool, achieving an average recall of 0.61 and precision of 0.41 on our benchmark.

DeepMetis: Augmenting a Deep Learning Test Set to Increase its Mutation Score

Sep 15, 2021

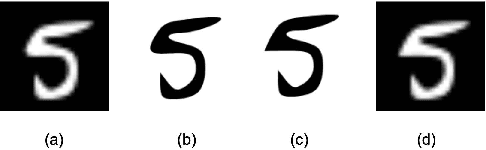

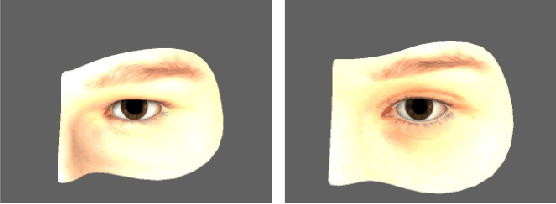

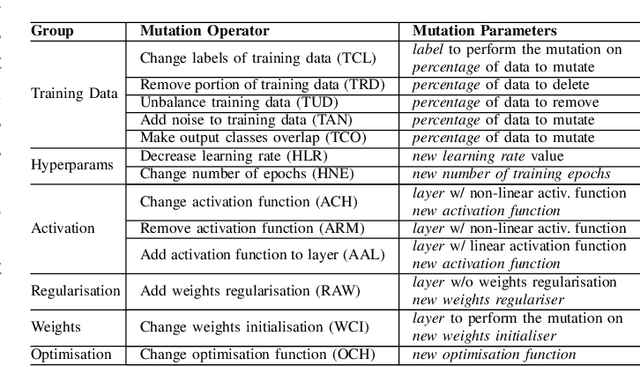

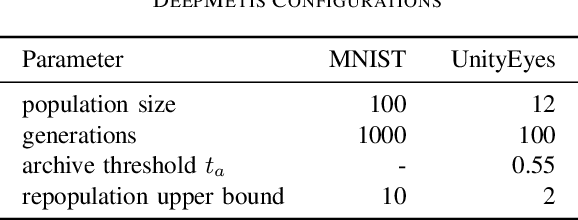

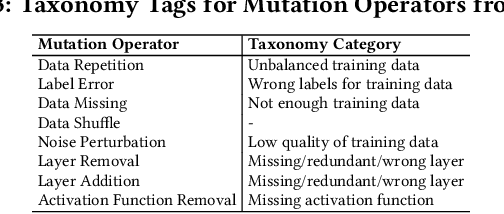

Abstract:Deep Learning (DL) components are routinely integrated into software systems that need to perform complex tasks such as image or natural language processing. The adequacy of the test data used to test such systems can be assessed by their ability to expose artificially injected faults (mutations) that simulate real DL faults. In this paper, we describe an approach to automatically generate new test inputs that can be used to augment the existing test set so that its capability to detect DL mutations increases. Our tool DeepMetis implements a search based input generation strategy. To account for the non-determinism of the training and the mutation processes, our fitness function involves multiple instances of the DL model under test. Experimental results show that \tool is effective at augmenting the given test set, increasing its capability to detect mutants by 63% on average. A leave-one-out experiment shows that the augmented test set is capable of exposing unseen mutants, which simulate the occurrence of yet undetected faults.

Taxonomy of Real Faults in Deep Learning Systems

Nov 07, 2019

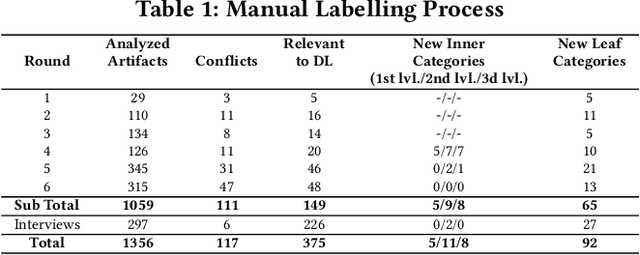

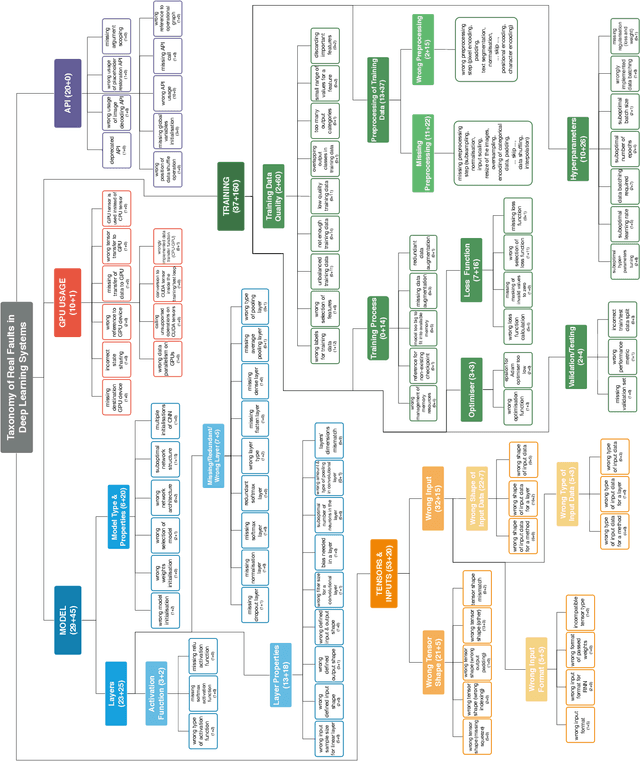

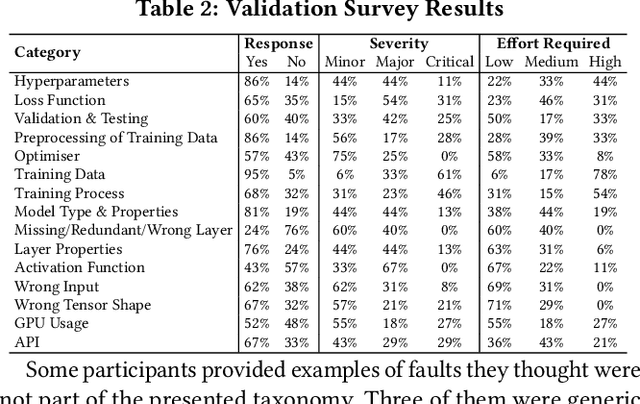

Abstract:The growing application of deep neural networks in safety-critical domains makes the analysis of faults that occur in such systems of enormous importance. In this paper we introduce a large taxonomy of faults in deep learning (DL) systems. We have manually analysed 1059 artefacts gathered from GitHub commits and issues of projects that use the most popular DL frameworks (TensorFlow, Keras and PyTorch) and from related Stack Overflow posts. Structured interviews with 20 researchers and practitioners describing the problems they have encountered in their experience have enriched our taxonomy with a variety of additional faults that did not emerge from the other two sources. Our final taxonomy was validated with a survey involving an additional set of 21 developers, confirming that almost all fault categories (13/15) were experienced by at least 50% of the survey participants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge