Guihui Li

MSGFusion: Multimodal Scene Graph-Guided Infrared and Visible Image Fusion

Sep 16, 2025

Abstract:Infrared and visible image fusion has garnered considerable attention owing to the strong complementarity of these two modalities in complex, harsh environments. While deep learning-based fusion methods have made remarkable advances in feature extraction, alignment, fusion, and reconstruction, they still depend largely on low-level visual cues, such as texture and contrast, and struggle to capture the high-level semantic information embedded in images. Recent attempts to incorporate text as a source of semantic guidance have relied on unstructured descriptions that neither explicitly model entities, attributes, and relationships nor provide spatial localization, thereby limiting fine-grained fusion performance. To overcome these challenges, we introduce MSGFusion, a multimodal scene graph-guided fusion framework for infrared and visible imagery. By deeply coupling structured scene graphs derived from text and vision, MSGFusion explicitly represents entities, attributes, and spatial relations, and then synchronously refines high-level semantics and low-level details through successive modules for scene graph representation, hierarchical aggregation, and graph-driven fusion. Extensive experiments on multiple public benchmarks show that MSGFusion significantly outperforms state-of-the-art approaches, particularly in detail preservation and structural clarity, and delivers superior semantic consistency and generalizability in downstream tasks such as low-light object detection, semantic segmentation, and medical image fusion.

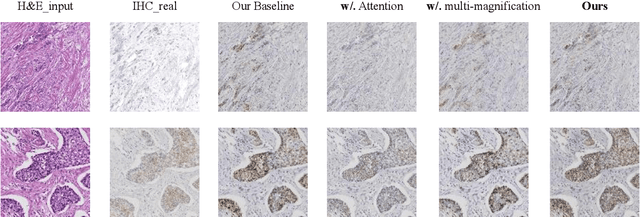

Advancing H&E-to-IHC Stain Translation in Breast Cancer: A Multi-Magnification and Attention-Based Approach

Aug 04, 2024

Abstract:Breast cancer presents a significant healthcare challenge globally, demanding precise diagnostics and effective treatment strategies, where histopathological examination of Hematoxylin and Eosin (H&E) stained tissue sections plays a central role. Despite its importance, evaluating specific biomarkers like Human Epidermal Growth Factor Receptor 2 (HER2) for personalized treatment remains constrained by the resource-intensive nature of Immunohistochemistry (IHC). Recent strides in deep learning, particularly in image-to-image translation, offer promise in synthesizing IHC-HER2 slides from H\&E stained slides. However, existing methodologies encounter challenges, including managing multiple magnifications in pathology images and insufficient focus on crucial information during translation. To address these issues, we propose a novel model integrating attention mechanisms and multi-magnification information processing. Our model employs a multi-magnification processing strategy to extract and utilize information from various magnifications within pathology images, facilitating robust image translation. Additionally, an attention module within the generative network prioritizes critical information for image distribution translation while minimizing less pertinent details. Rigorous testing on a publicly available breast cancer dataset demonstrates superior performance compared to existing methods, establishing our model as a state-of-the-art solution in advancing pathology image translation from H&E to IHC staining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge