Advancing H&E-to-IHC Stain Translation in Breast Cancer: A Multi-Magnification and Attention-Based Approach

Paper and Code

Aug 04, 2024

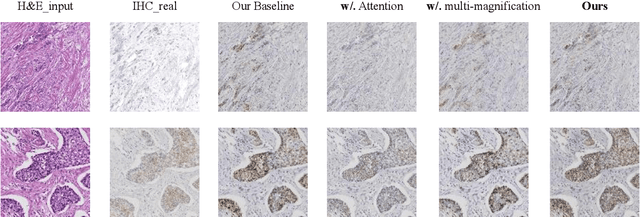

Breast cancer presents a significant healthcare challenge globally, demanding precise diagnostics and effective treatment strategies, where histopathological examination of Hematoxylin and Eosin (H&E) stained tissue sections plays a central role. Despite its importance, evaluating specific biomarkers like Human Epidermal Growth Factor Receptor 2 (HER2) for personalized treatment remains constrained by the resource-intensive nature of Immunohistochemistry (IHC). Recent strides in deep learning, particularly in image-to-image translation, offer promise in synthesizing IHC-HER2 slides from H\&E stained slides. However, existing methodologies encounter challenges, including managing multiple magnifications in pathology images and insufficient focus on crucial information during translation. To address these issues, we propose a novel model integrating attention mechanisms and multi-magnification information processing. Our model employs a multi-magnification processing strategy to extract and utilize information from various magnifications within pathology images, facilitating robust image translation. Additionally, an attention module within the generative network prioritizes critical information for image distribution translation while minimizing less pertinent details. Rigorous testing on a publicly available breast cancer dataset demonstrates superior performance compared to existing methods, establishing our model as a state-of-the-art solution in advancing pathology image translation from H&E to IHC staining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge