Guangxin Jiang

LLMs for Supply Chain Management

May 24, 2025Abstract:The development of large language models (LLMs) has provided new tools for research in supply chain management (SCM). In this paper, we introduce a retrieval-augmented generation (RAG) framework that dynamically integrates external knowledge into the inference process, and develop a domain-specialized SCM LLM, which demonstrates expert-level competence by passing standardized SCM examinations and beer game tests. We further employ the use of LLMs to conduct horizontal and vertical supply chain games, in order to analyze competition and cooperation within supply chains. Our experiments show that RAG significantly improves performance on SCM tasks. Moreover, game-theoretic analysis reveals that the LLM can reproduce insights from the classical SCM literature, while also uncovering novel behaviors and offering fresh perspectives on phenomena such as the bullwhip effect. This paper opens the door for exploring cooperation and competition for complex supply chain network through the lens of LLMs.

PT-Tuning: Bridging the Gap between Time Series Masked Reconstruction and Forecasting via Prompt Token Tuning

Nov 07, 2023Abstract:Self-supervised learning has been actively studied in time series domain recently, especially for masked reconstruction. Most of these methods follow the "Pre-training + Fine-tuning" paradigm in which a new decoder replaces the pre-trained decoder to fit for a specific downstream task, leading to inconsistency of upstream and downstream tasks. In this paper, we first point out that the unification of task objectives and adaptation for task difficulty are critical for bridging the gap between time series masked reconstruction and forecasting. By reserving the pre-trained mask token during fine-tuning stage, the forecasting task can be taken as a special case of masked reconstruction, where the future values are masked and reconstructed based on history values. It guarantees the consistency of task objectives but there is still a gap in task difficulty. Because masked reconstruction can utilize contextual information while forecasting can only use historical information to reconstruct. To further mitigate the existed gap, we propose a simple yet effective prompt token tuning (PT-Tuning) paradigm, in which all pre-trained parameters are frozen and only a few trainable prompt tokens are added to extended mask tokens in element-wise manner. Extensive experiments on real-world datasets demonstrate the superiority of our proposed paradigm with state-of-the-art performance compared to representation learning and end-to-end supervised forecasting methods.

An Intelligent End-to-End Neural Architecture Search Framework for Electricity Forecasting Model Development

Mar 25, 2022

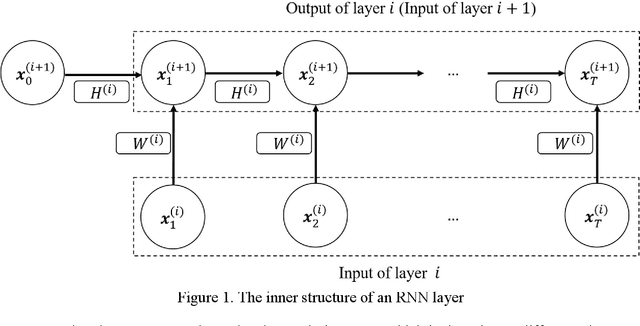

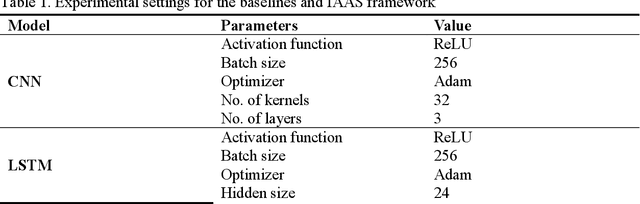

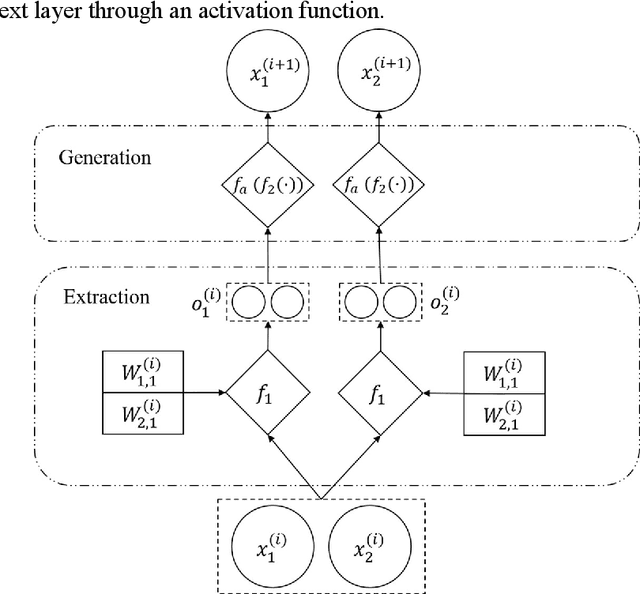

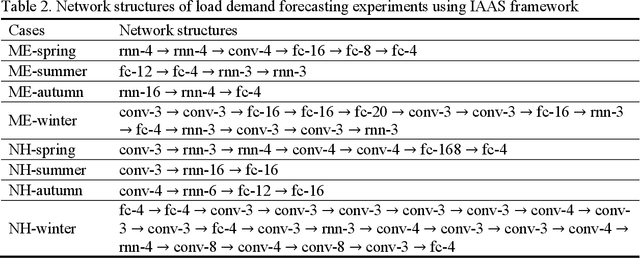

Abstract:Recent years have witnessed an exponential growth in developing deep learning (DL) models for the time-series electricity forecasting in power systems. However, most of the proposed models are designed based on the designers' inherent knowledge and experience without elaborating on the suitability of the proposed neural architectures. Moreover, these models cannot be self-adjusted to the dynamically changing data patterns due to an inflexible design of their structures. Even though several latest studies have considered application of the neural architecture search (NAS) technique for obtaining a network with an optimized structure in the electricity forecasting sector, their training process is quite time-consuming, computationally expensive and not intelligent, indicating that the NAS application in electricity forecasting area is still at an infancy phase. In this research study, we propose an intelligent automated architecture search (IAAS) framework for the development of time-series electricity forecasting models. The proposed framework contains two primary components, i.e., network function-preserving transformation operation and reinforcement learning (RL)-based network transformation control. In the first component, we introduce a theoretical function-preserving transformation of recurrent neural networks (RNN) to the literature for capturing the hidden temporal patterns within the time-series data. In the second component, we develop three RL-based transformation actors and a net pool to intelligently and effectively search a high-quality neural architecture. After conducting comprehensive experiments on two publicly-available electricity load datasets and two wind power datasets, we demonstrate that the proposed IAAS framework significantly outperforms the ten existing models or methods in terms of forecasting accuracy and stability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge