Greg J. Bishop-Hurley

Multi-modal Sensor Data Fusion for In-situ Classification of Animal Behavior Using Accelerometry and GNSS Data

Jun 24, 2022

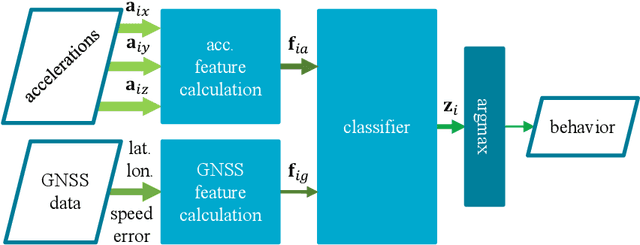

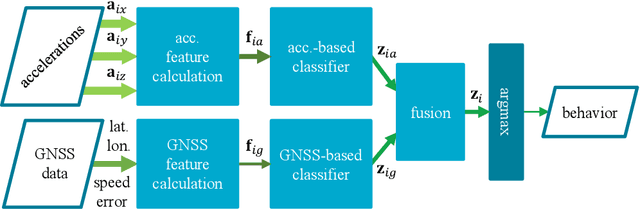

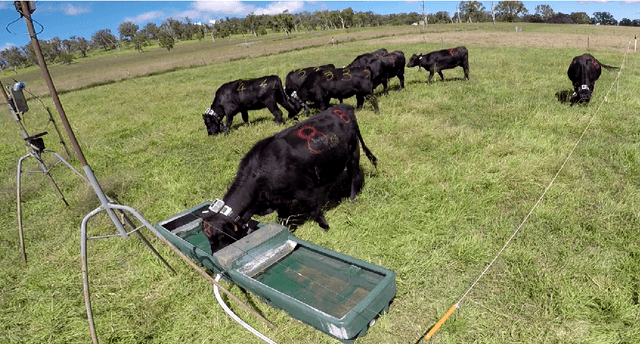

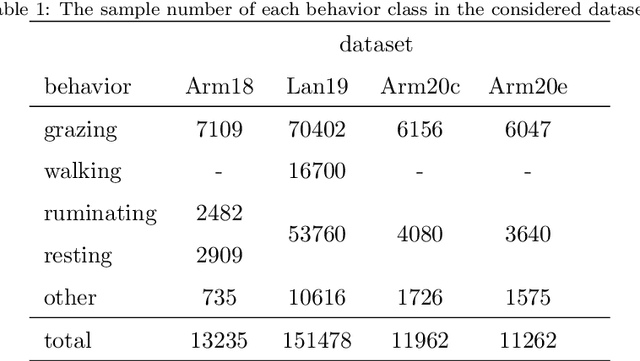

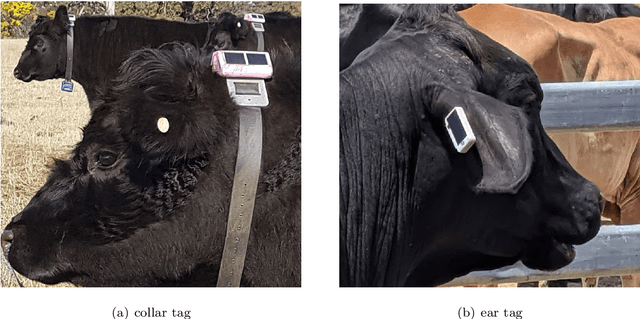

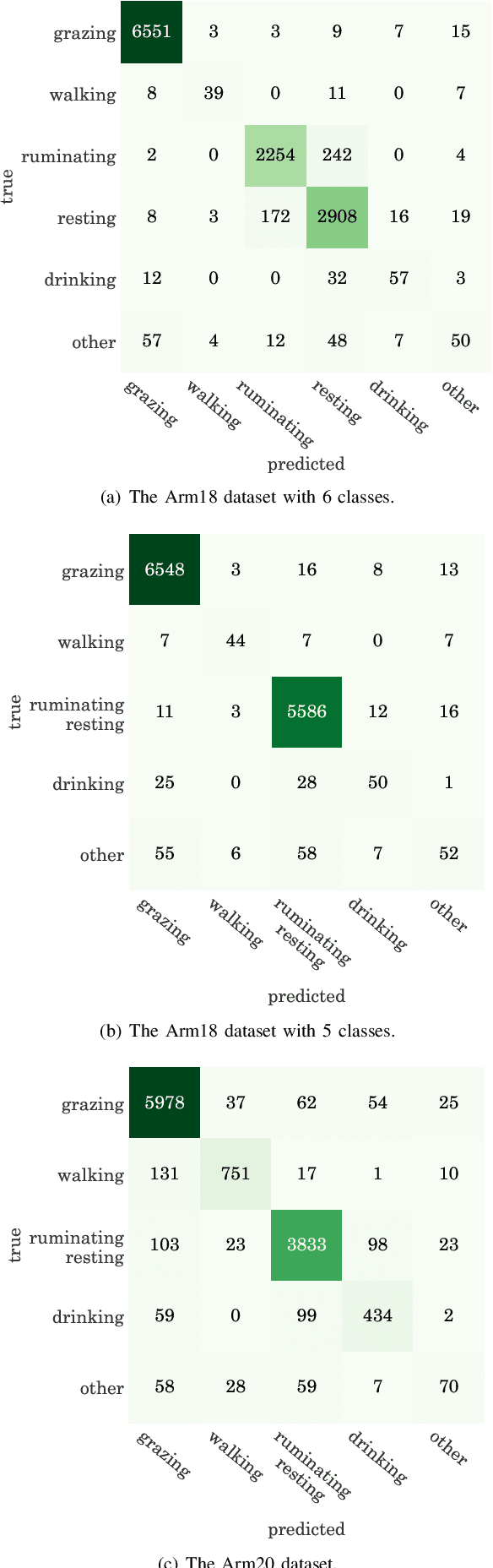

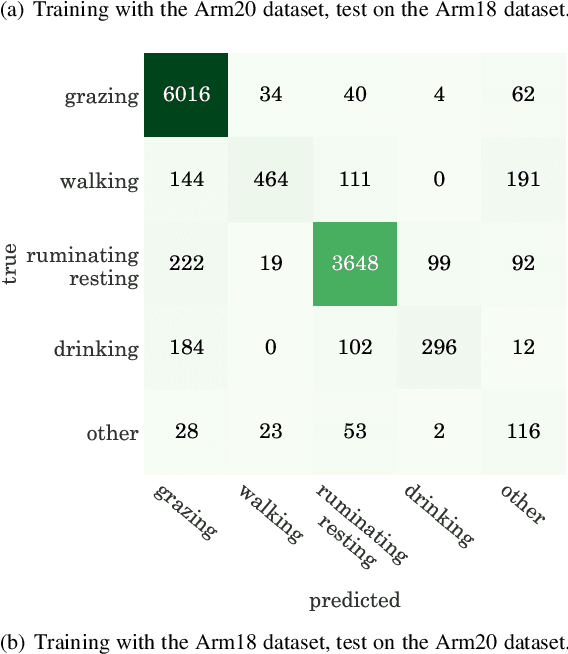

Abstract:We examine using data from multiple sensing modes, i.e., accelerometry and global navigation satellite system (GNSS), for classifying animal behavior. We extract three new features from the GNSS data, namely, the distance from the water point, median speed, and median estimated horizontal position error. We consider two approaches for combining the information available from the accelerometry and GNSS data. The first approach is based on concatenating the features extracted from both sensor data and feeding the concatenated feature vector into a multi-layer perceptron (MLP) classifier. The second approach is based on fusing the posterior probabilities predicted by two MLP classifiers each taking the features extracted from the data of one sensor as input. We evaluate the performance of the developed multi-modal animal behavior classification algorithms using two real-world datasets collected via smart cattle collar and ear tags. The leave-one-animal-out cross-validation results show that both approaches improve the classification performance appreciably compared with using the data from only one sensing mode, in particular, for the infrequent but important behaviors of walking and drinking. The algorithms developed based on both approaches require rather small computational and memory resources hence are suitable for implementation on embedded systems of our collar and ear tags. However, the multi-modal animal behavior classification algorithm based on posterior probability fusion is preferable to the one based on feature concatenation as it delivers better classification accuracy, has less computational and memory complexity, is more robust to sensor data failure, and enjoys better modularity.

Animal Behavior Classification via Accelerometry Data and Recurrent Neural Networks

Nov 24, 2021

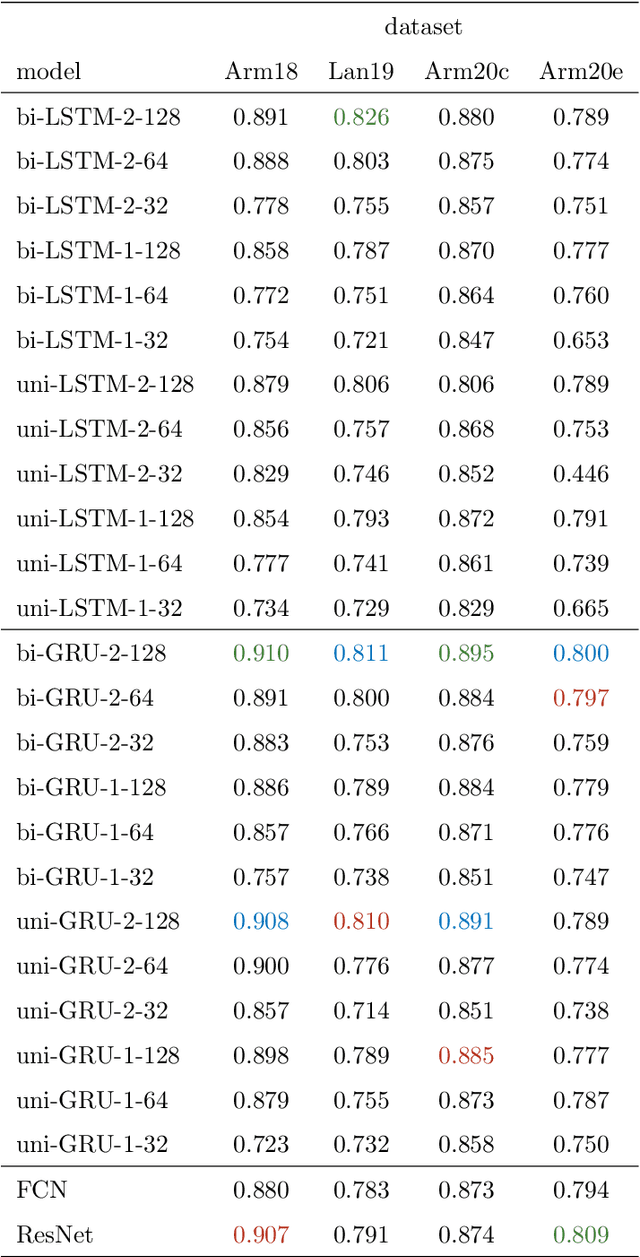

Abstract:We study the classification of animal behavior using accelerometry data through various recurrent neural network (RNN) models. We evaluate the classification performance and complexity of the considered models, which feature long short-time memory (LSTM) or gated recurrent unit (GRU) architectures with varying depths and widths, using four datasets acquired from cattle via collar or ear tags. We also include two state-of-the-art convolutional neural network (CNN)-based time-series classification models in the evaluations. The results show that the RNN-based models can achieve similar or higher classification accuracy compared with the CNN-based models while having less computational and memory requirements. We also observe that the models with GRU architecture generally outperform the ones with LSTM architecture in terms of classification accuracy despite being less complex. A single-layer uni-directional GRU model with 64 hidden units appears to offer a good balance between accuracy and complexity making it suitable for implementation on edge/embedded devices.

Animal Behavior Classification via Deep Learning on Embedded Systems

Nov 24, 2021

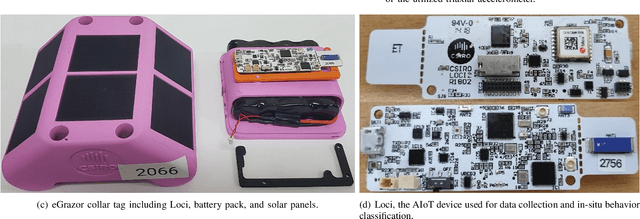

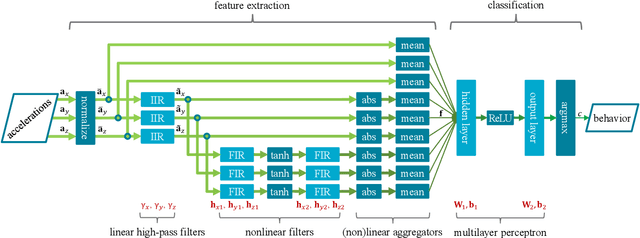

Abstract:We develop an end-to-end deep-neural-network-based algorithm for classifying animal behavior using accelerometry data on the embedded system of an artificial intelligence of things (AIoT) device installed in a wearable collar tag. The proposed algorithm jointly performs feature extraction and classification utilizing a set of infinite-impulse-response (IIR) and finite-impulse-response (FIR) filters together with a multilayer perceptron. The utilized IIR and FIR filters can be viewed as specific types of recurrent and convolutional neural network layers, respectively. We evaluate the performance of the proposed algorithm via two real-world datasets collected from grazing cattle. The results show that the proposed algorithm offers good intra- and inter-dataset classification accuracy and outperforms its closest contenders including two state-of-the-art convolutional-neural-network-based time-series classification algorithms, which are significantly more complex. We implement the proposed algorithm on the embedded system of the collar tag's AIoT device to perform in-situ classification of animal behavior. We achieve real-time in-situ behavior inference from accelerometry data without imposing any strain on the available computational, memory, or energy resources of the embedded system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge