Greg Fields

Trojan Cleansing with Neural Collapse

Nov 19, 2024

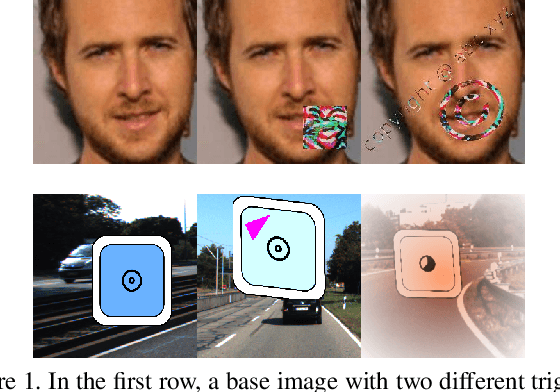

Abstract:Trojan attacks are sophisticated training-time attacks on neural networks that embed backdoor triggers which force the network to produce a specific output on any input which includes the trigger. With the increasing relevance of deep networks which are too large to train with personal resources and which are trained on data too large to thoroughly audit, these training-time attacks pose a significant risk. In this work, we connect trojan attacks to Neural Collapse, a phenomenon wherein the final feature representations of over-parameterized neural networks converge to a simple geometric structure. We provide experimental evidence that trojan attacks disrupt this convergence for a variety of datasets and architectures. We then use this disruption to design a lightweight, broadly generalizable mechanism for cleansing trojan attacks from a wide variety of different network architectures and experimentally demonstrate its efficacy.

Trojan Signatures in DNN Weights

Sep 07, 2021

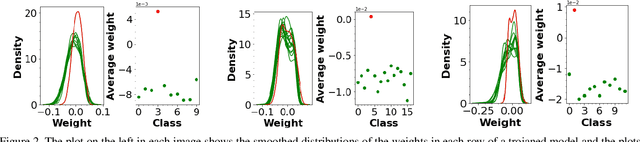

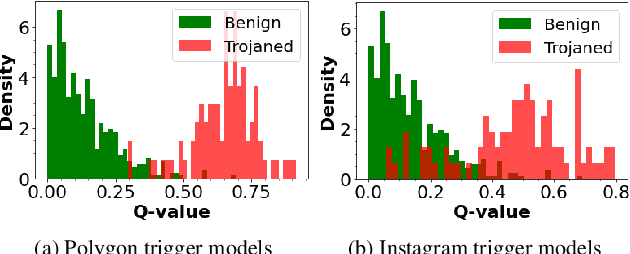

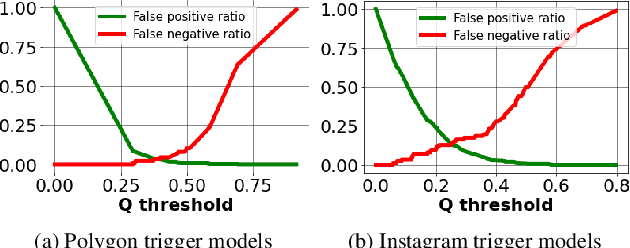

Abstract:Deep neural networks have been shown to be vulnerable to backdoor, or trojan, attacks where an adversary has embedded a trigger in the network at training time such that the model correctly classifies all standard inputs, but generates a targeted, incorrect classification on any input which contains the trigger. In this paper, we present the first ultra light-weight and highly effective trojan detection method that does not require access to the training/test data, does not involve any expensive computations, and makes no assumptions on the nature of the trojan trigger. Our approach focuses on analysis of the weights of the final, linear layer of the network. We empirically demonstrate several characteristics of these weights that occur frequently in trojaned networks, but not in benign networks. In particular, we show that the distribution of the weights associated with the trojan target class is clearly distinguishable from the weights associated with other classes. Using this, we demonstrate the effectiveness of our proposed detection method against state-of-the-art attacks across a variety of architectures, datasets, and trigger types.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge