Grégoire Jauvion

IMT

PlumeCityNet: Multi-Resolution Air Quality Forecasting

Oct 14, 2021

Abstract:This paper presents an engine able to forecast jointly the concentrations of the main pollutants harming people's health: nitrogen dioxide (NO2), ozone (O3) and particulate matter (PM2.5 and PM10, which are respectively the particles whose diameters are below 2.5um and 10um respectively). The engine is fed with air quality monitoring stations' measurements, weather forecasts, physical models' outputs and traffic estimates to produce forecasts up to 24 hours. The forecasts are produced with several spatial resolutions, from a few dozens of meters to dozens of kilometers, fitting several use-cases needing air quality data. We introduce the Scale-Unit block, which enables to integrate seamlessly all available inputs at a given resolution to return forecasts at the same resolution. Then, the engine is based on a U-Net architecture built with several of those blocks, giving it the ability to process inputs and to output predictions at different resolutions. We have implemented and evaluated the engine on the largest cities in Europe and the United States, and it clearly outperforms other prediction methods. In particular, the out-of-sample accuracy remains high, meaning that the engine can be used in cities which are not included in the training dataset. A valuable advantage of the engine is that it does not need much computing power: the forecasts can be built in a few minutes on a standard CPU. Thus, they can be updated very frequently, as soon as new air quality monitoring stations' measurements are available (generally every hour), which is not the case of physical models traditionally used for air quality forecasting.

High-Resolution Air Quality Prediction Using Low-Cost Sensors

Jun 22, 2020

Abstract:The use of low-cost sensors in air quality monitoring networks is still a much-debated topic among practitioners: they are much cheaper than traditional air quality monitoring stations set up by public authorities (a few hundred dollars compared to a few dozens of thousand dollars) at the cost of a lower accuracy and robustness. This paper presents a case study of using low-cost sensors measurements in an air quality prediction engine. The engine predicts jointly PM2.5 and PM10 concentrations in the United States at a very high resolution in the range of a few dozens of meters. It is fed with the measurements provided by official air quality monitoring stations, the measurements provided by a network of more than 4000 low-cost sensors across the country, and traffic estimates. We show that the use of low-cost sensors' measurements improves the engine's accuracy very significantly. In particular, we derive a strong link between the density of low-cost sensors and the predictions' accuracy: the more low-cost sensors are in an area, the more accurate are the predictions. As an illustration, in areas with the highest density of low-cost sensors, the low-cost sensors' measurements bring a 25% and 15% improvement in PM2.5 and PM10 predictions' accuracy respectively. An other strong conclusion is that in some areas with a high density of low-cost sensors, the engine performs better when fed with low-cost sensors' measurements only than when fed with official monitoring stations' measurements only: this suggests that an air quality monitoring network composed of low-cost sensors is effective in monitoring air quality. This is a very important result, as such a monitoring network is much cheaper to set up.

PlumeNet: Large-Scale Air Quality Forecasting Using A Convolutional LSTM Network

Jun 14, 2020

Abstract:This paper presents an engine able to forecast jointly the concentrations of the main pollutants harming people's health: nitrogen dioxyde (NO2), ozone (O3) and particulate matter (PM2.5 and PM10, which are respectively the particles whose diameters are below 2.5 um and 10 um respectively). The forecasts are performed on a regular grid (the results presented in the paper are produced with a 0.5{\deg} resolution grid over Europe and the United States) with a neural network whose architecture includes convolutional LSTM blocks. The engine is fed with the most recent air quality monitoring stations measures available, weather forecasts as well as air quality physical and chemical model (AQPCM) outputs. The engine can be used to produce air quality forecasts with long time horizons, and the experiments presented in this paper show that the 4 days forecasts beat very significantly simple benchmarks. A valuable advantage of the engine is that it does not need much computing power: the forecasts can be built in a few minutes on a standard GPU. Thus, they can be updated very frequently, as soon as new air quality measures are available (generally every hour), which is not the case of AQPCMs traditionally used for air quality forecasting. The engine described in this paper relies on the same principles as a prediction engine deployed and used by Plume Labs in several products aiming at providing air quality data to individuals and businesses.

Real-Time Optimization Of Web Publisher RTB Revenues

Jun 12, 2020

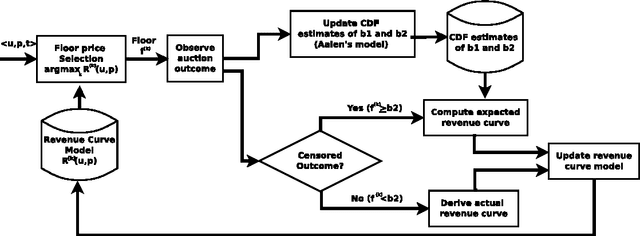

Abstract:This paper describes an engine to optimize web publisher revenues from second-price auctions. These auctions are widely used to sell online ad spaces in a mechanism called real-time bidding (RTB). Optimization within these auctions is crucial for web publishers, because setting appropriate reserve prices can significantly increase revenue. We consider a practical real-world setting where the only available information before an auction occurs consists of a user identifier and an ad placement identifier. The real-world challenges we had to tackle consist mainly of tracking the dependencies on both the user and placement in an highly non-stationary environment and of dealing with censored bid observations. These challenges led us to make the following design choices: (i) we adopted a relatively simple non-parametric regression model of auction revenue based on an incremental time-weighted matrix factorization which implicitly builds adaptive users' and placements' profiles; (ii) we jointly used a non-parametric model to estimate the first and second bids' distribution when they are censored, based on an on-line extension of the Aalen's Additive model. Our engine is a component of a deployed system handling hundreds of web publishers across the world, serving billions of ads a day to hundreds of millions of visitors. The engine is able to predict, for each auction, an optimal reserve price in approximately one millisecond and yields a significant revenue increase for the web publishers.

Optimal Allocation of Real-Time-Bidding and Direct Campaigns

Jun 12, 2020

Abstract:In this paper, we consider the problem of optimizing the revenue a web publisher gets through real-time bidding (i.e. from ads sold in real-time auctions) and direct (i.e. from ads sold through contracts agreed in advance). We consider a setting where the publisher is able to bid in the real-time bidding auction for each impression. If it wins the auction, it chooses a direct campaign to deliver and displays the corresponding ad. This paper presents an algorithm to build an optimal strategy for the publisher to deliver its direct campaigns while maximizing its real-time bidding revenue. The optimal strategy gives a formula to determine the publisher bid as well as a way to choose the direct campaign being delivered if the publisher bidder wins the auction, depending on the impression characteristics. The optimal strategy can be estimated on past auctions data. The algorithm scales with the number of campaigns and the size of the dataset. This is a very important feature, as in practice a publisher may have thousands of active direct campaigns at the same time and would like to estimate an optimal strategy on billions of auctions. The algorithm is a key component of a system which is being developed, and which will be deployed on thousands of web publishers worldwide, helping them to serve efficiently billions of ads a day to hundreds of millions of visitors.

DeepPlume: Very High Resolution Real-Time Air Quality Mapping

Feb 14, 2020

Abstract:This paper presents an engine able to predict jointly the real-time concentration of the main pollutants harming people's health: nitrogen dioxyde (NO2), ozone (O3) and particulate matter (PM2.5 and PM10, which are respectively the particles whose size are below 2.5 um and 10 um). The engine covers a large part of the world and is fed with real-time official stations measures, atmospheric models' forecasts, land cover data, road networks and traffic estimates to produce predictions with a very high resolution in the range of a few dozens of meters. This resolution makes the engine adapted to very innovative applications like street-level air quality mapping or air quality adjusted routing. Plume Labs has deployed a similar prediction engine to build several products aiming at providing air quality data to individuals and businesses. For the sake of clarity and reproducibility, the engine presented here has been built specifically for this paper and differs quite significantly from the one used in Plume Labs' products. A major difference is in the data sources feeding the engine: in particular, this prediction engine does not include mobile sensors measurements.

Optimization of a SSP's Header Bidding Strategy using Thompson Sampling

Jul 09, 2018

Abstract:Over the last decade, digital media (web or app publishers) generalized the use of real time ad auctions to sell their ad spaces. Multiple auction platforms, also called Supply-Side Platforms (SSP), were created. Because of this multiplicity, publishers started to create competition between SSPs. In this setting, there are two successive auctions: a second price auction in each SSP and a secondary, first price auction, called header bidding auction, between SSPs.In this paper, we consider an SSP competing with other SSPs for ad spaces. The SSP acts as an intermediary between an advertiser wanting to buy ad spaces and a web publisher wanting to sell its ad spaces, and needs to define a bidding strategy to be able to deliver to the advertisers as many ads as possible while spending as little as possible. The revenue optimization of this SSP can be written as a contextual bandit problem, where the context consists of the information available about the ad opportunity, such as properties of the internet user or of the ad placement.Using classical multi-armed bandit strategies (such as the original versions of UCB and EXP3) is inefficient in this setting and yields a low convergence speed, as the arms are very correlated. In this paper we design and experiment a version of the Thompson Sampling algorithm that easily takes this correlation into account. We combine this bayesian algorithm with a particle filter, which permits to handle non-stationarity by sequentially estimating the distribution of the highest bid to beat in order to win an auction. We apply this methodology on two real auction datasets, and show that it significantly outperforms more classical approaches.The strategy defined in this paper is being developed to be deployed on thousands of publishers worldwide.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge