Giulio Pagnotta

Do You Trust Your Model? Emerging Malware Threats in the Deep Learning Ecosystem

Mar 06, 2024

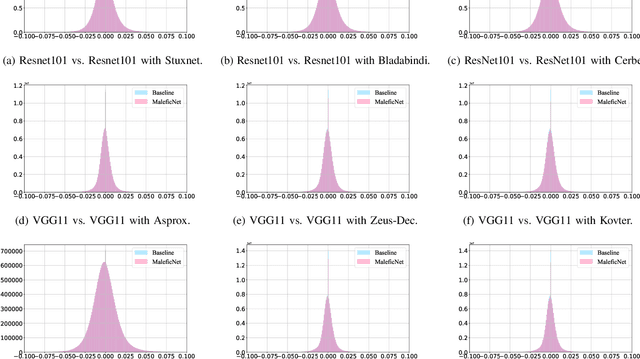

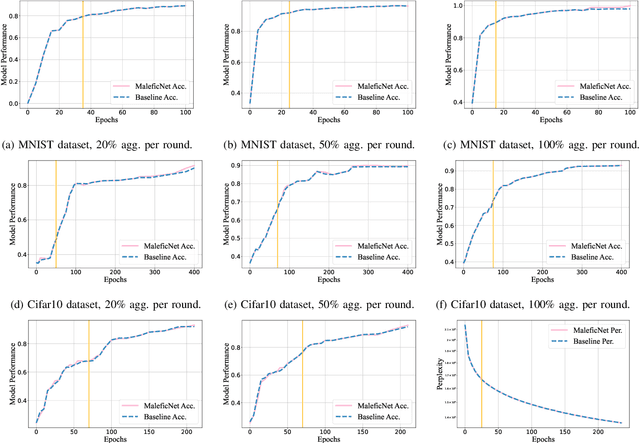

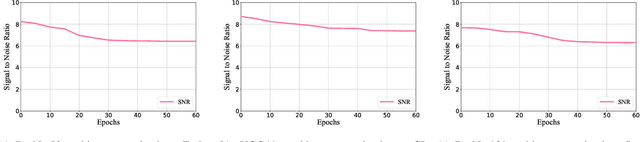

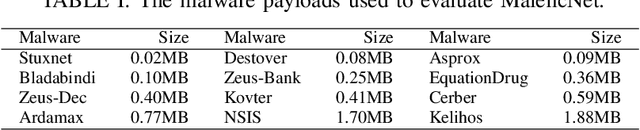

Abstract:Training high-quality deep learning models is a challenging task due to computational and technical requirements. A growing number of individuals, institutions, and companies increasingly rely on pre-trained, third-party models made available in public repositories. These models are often used directly or integrated in product pipelines with no particular precautions, since they are effectively just data in tensor form and considered safe. In this paper, we raise awareness of a new machine learning supply chain threat targeting neural networks. We introduce MaleficNet 2.0, a novel technique to embed self-extracting, self-executing malware in neural networks. MaleficNet 2.0 uses spread-spectrum channel coding combined with error correction techniques to inject malicious payloads in the parameters of deep neural networks. MaleficNet 2.0 injection technique is stealthy, does not degrade the performance of the model, and is robust against removal techniques. We design our approach to work both in traditional and distributed learning settings such as Federated Learning, and demonstrate that it is effective even when a reduced number of bits is used for the model parameters. Finally, we implement a proof-of-concept self-extracting neural network malware using MaleficNet 2.0, demonstrating the practicality of the attack against a widely adopted machine learning framework. Our aim with this work is to raise awareness against these new, dangerous attacks both in the research community and industry, and we hope to encourage further research in mitigation techniques against such threats.

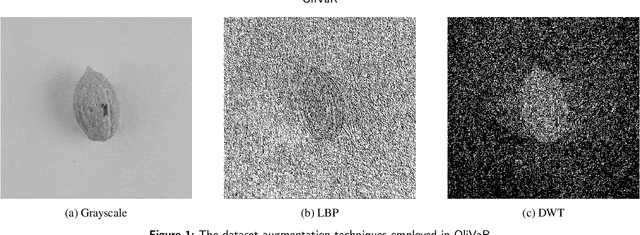

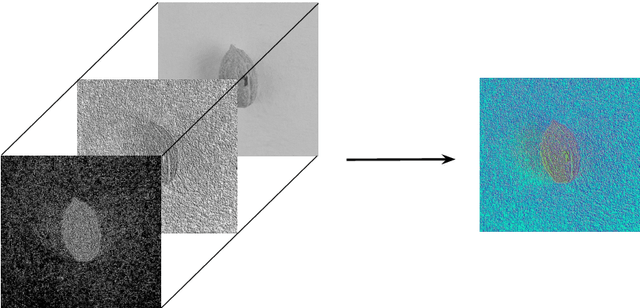

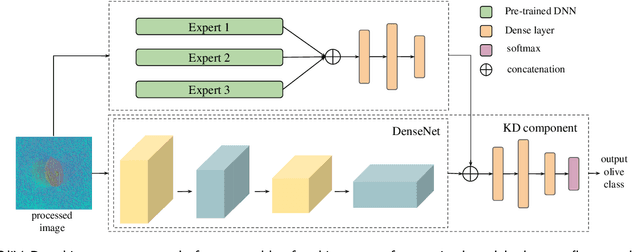

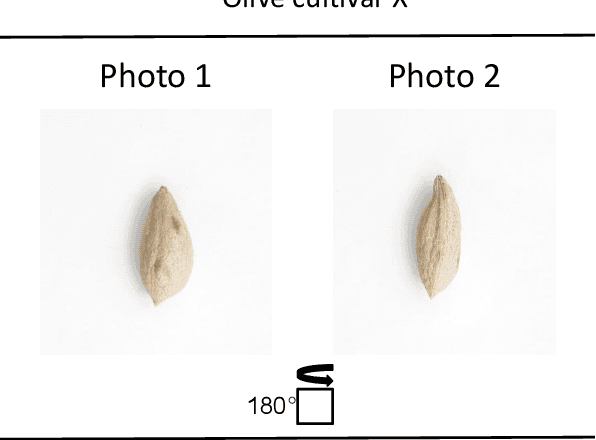

OliVaR: Improving Olive Variety Recognition using Deep Neural Networks

Mar 01, 2023

Abstract:The easy and accurate identification of varieties is fundamental in agriculture, especially in the olive sector, where more than 1200 olive varieties are currently known worldwide. Varietal misidentification leads to many potential problems for all the actors in the sector: farmers and nursery workers may establish the wrong variety, leading to its maladaptation in the field; olive oil and table olive producers may label and sell a non-authentic product; consumers may be misled; and breeders may commit errors during targeted crossings between different varieties. To date, the standard for varietal identification and certification consists of two methods: morphological classification and genetic analysis. The morphological classification consists of the visual pairwise comparison of different organs of the olive tree, where the most important organ is considered to be the endocarp. In contrast, different methods for genetic classification exist (RAPDs, SSR, and SNP). Both classification methods present advantages and disadvantages. Visual morphological classification requires highly specialized personnel and is prone to human error. Genetic identification methods are more accurate but incur a high cost and are difficult to implement. This paper introduces OliVaR, a novel approach to olive varietal identification. OliVaR uses a teacher-student deep learning architecture to learn the defining characteristics of the endocarp of each specific olive variety and perform classification. We construct what is, to the best of our knowledge, the largest olive variety dataset to date, comprising image data for 131 varieties from the Mediterranean basin. We thoroughly test OliVaR on this dataset and show that it correctly predicts olive varieties with over 86% accuracy.

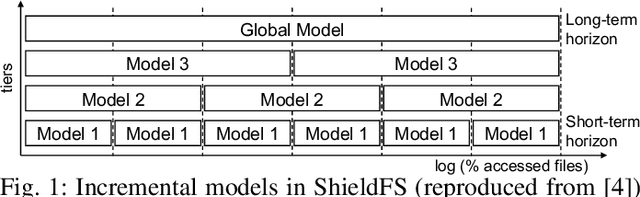

Minerva: A File-Based Ransomware Detector

Jan 26, 2023Abstract:Ransomware is a rapidly evolving type of malware designed to encrypt user files on a device, making them inaccessible in order to exact a ransom. Ransomware attacks resulted in billions of dollars in damages in recent years and are expected to cause hundreds of billions more in the next decade. With current state-of-the-art process-based detectors being heavily susceptible to evasion attacks, no comprehensive solution to this problem is available today. This paper presents Minerva, a new approach to ransomware detection. Unlike current methods focused on identifying ransomware based on process-level behavioral modeling, Minerva detects ransomware by building behavioral profiles of files based on all the operations they receive in a time window. Minerva addresses some of the critical challenges associated with process-based approaches, specifically their vulnerability to complex evasion attacks. Our evaluation of Minerva demonstrates its effectiveness in detecting ransomware attacks, including those that are able to bypass existing defenses. Our results show that Minerva identifies ransomware activity with an average accuracy of 99.45% and an average recall of 99.66%, with 99.97% of ransomware detected within 1 second.

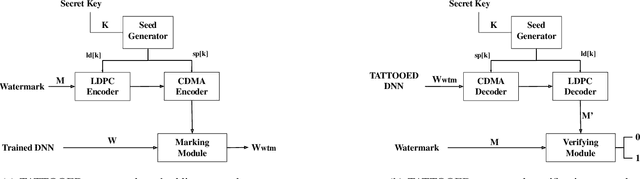

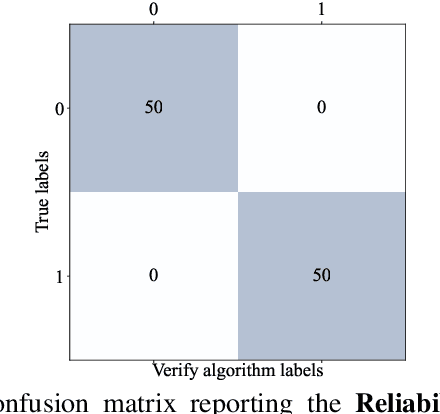

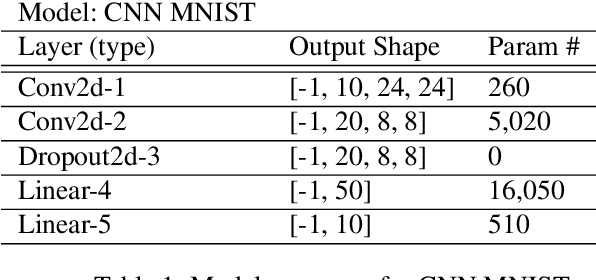

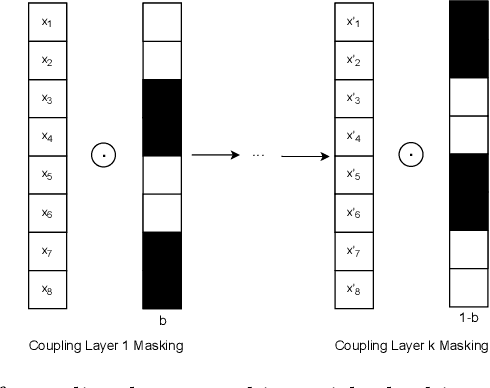

TATTOOED: A Robust Deep Neural Network Watermarking Scheme based on Spread-Spectrum Channel Coding

Feb 22, 2022

Abstract:The proliferation of deep learning applications in several areas has led to the rapid adoption of such solutions from an ever-growing number of institutions and companies. These entities' deep neural network (DNN) models are often trained on proprietary data. They require powerful computational resources, with the resulting DNN models being incorporated in the company's work pipeline or provided as a service. Being trained on proprietary information, these models provide a competitive edge for the owner company. At the same time, these models can be attractive to competitors (or malicious entities), which can employ state-of-the-art security attacks to obtain and use these models for their benefit. As these attacks are hard to prevent, it becomes imperative to have mechanisms that enable an affected entity to verify the ownership of its DNN with high confidence. This paper presents TATTOOED, a robust and efficient DNN watermarking technique based on spread-spectrum channel coding. TATTOOED has a negligible effect on the performance of the DNN model and is robust against several state-of-the-art mechanisms used to remove watermarks from DNNs. Our results show that TATTOOED is robust to such removal techniques even in extreme scenarios. For example, if the removal techniques such as fine-tuning and parameter pruning change as much as 99% of the model parameters, the TATTOOED watermark is still present in full in the DNN model and ensures ownership verification.

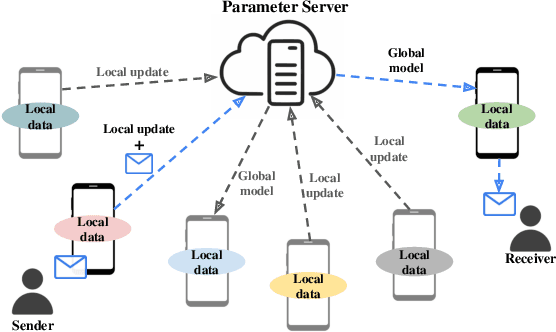

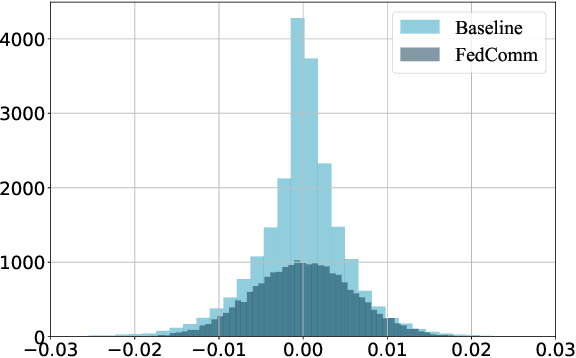

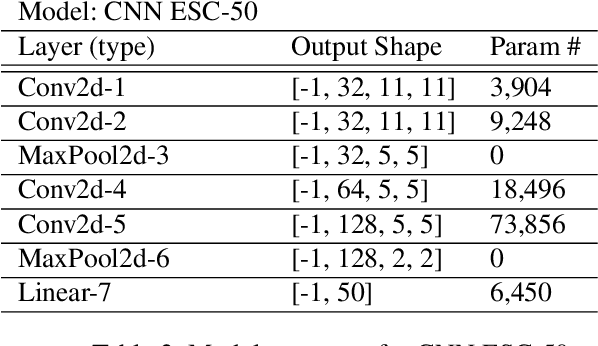

FedComm: Federated Learning as a Medium for Covert Communication

Jan 21, 2022

Abstract:Proposed as a solution to mitigate the privacy implications related to the adoption of deep learning solutions, Federated Learning (FL) enables large numbers of participants to successfully train deep neural networks without having to reveal the actual private training data. To date, a substantial amount of research has investigated the security and privacy properties of FL, resulting in a plethora of innovative attack and defense strategies. This paper thoroughly investigates the communication capabilities of an FL scheme. In particular, we show that a party involved in the FL learning process can use FL as a covert communication medium to send an arbitrary message. We introduce FedComm, a novel covert-communication technique that enables robust sharing and transfer of targeted payloads within the FL framework. Our extensive theoretical and empirical evaluations show that FedComm provides a stealthy communication channel, with minimal disruptions to the training process. Our experiments show that FedComm, allowed us to successfully deliver 100% of a payload in the order of kilobits before the FL procedure converges. Our evaluation also shows that FedComm is independent of the application domain and the neural network architecture used by the underlying FL scheme.

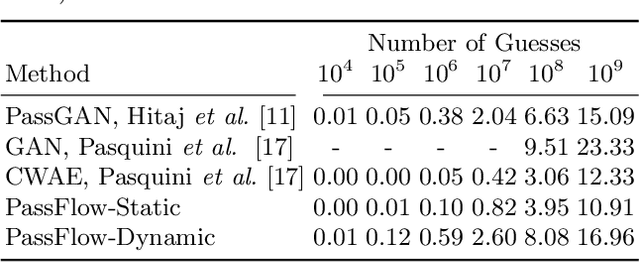

PassFlow: Guessing Passwords with Generative Flows

May 13, 2021

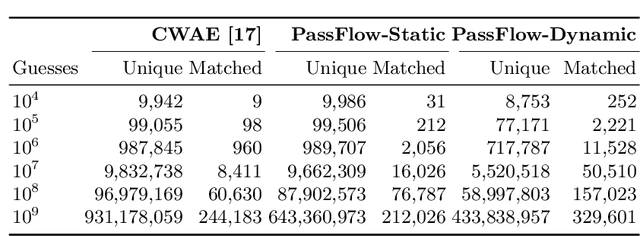

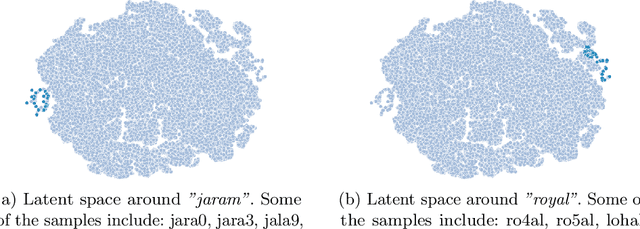

Abstract:Recent advances in generative machine learning models rekindled research interest in the area of password guessing. Data-driven password guessing approaches based on GANs, language models and deep latent variable models show impressive generalization performance and offer compelling properties for the task of password guessing. In this paper, we propose a flow-based generative model approach to password guessing. Flow-based models allow for precise log-likelihood computation and optimization, which enables exact latent variable inference. Additionally, flow-based models provide meaningful latent space representation, which enables operations such as exploration of specific subspaces of the latent space and interpolation. We demonstrate the applicability of generative flows to the context of password guessing, departing from previous applications of flow networks which are mainly limited to the continuous space of image generation. We show that the above-mentioned properties allow flow-based models to outperform deep latent variable model approaches and remain competitive with state-of-the-art GANs in the password guessing task, while using a training set that is orders of magnitudes smaller than that of previous art. Furthermore, a qualitative analysis of the generated samples shows that flow-based networks are able to accurately model the original passwords distribution, with even non-matched samples closely resembling human-like passwords.

Reliable Detection of Compressed and Encrypted Data

Mar 31, 2021

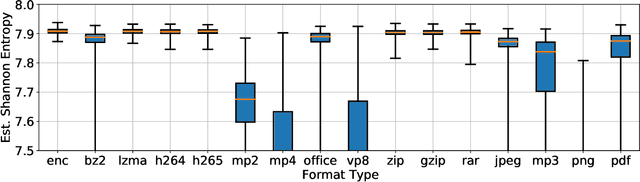

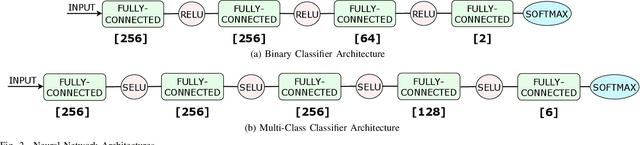

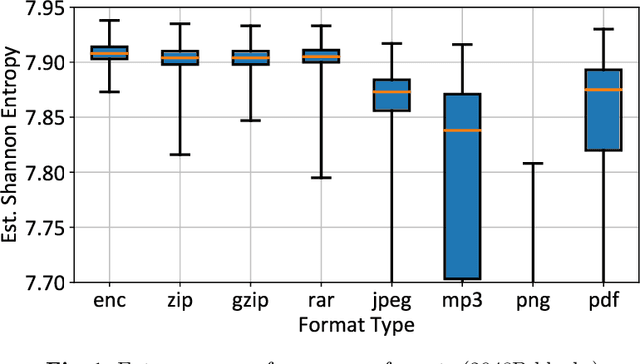

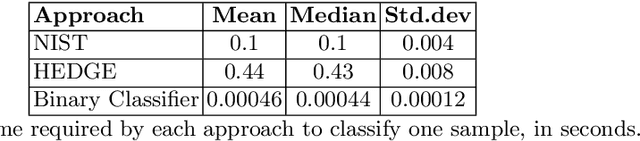

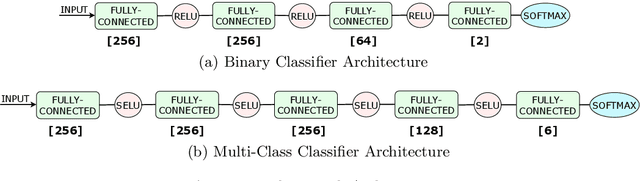

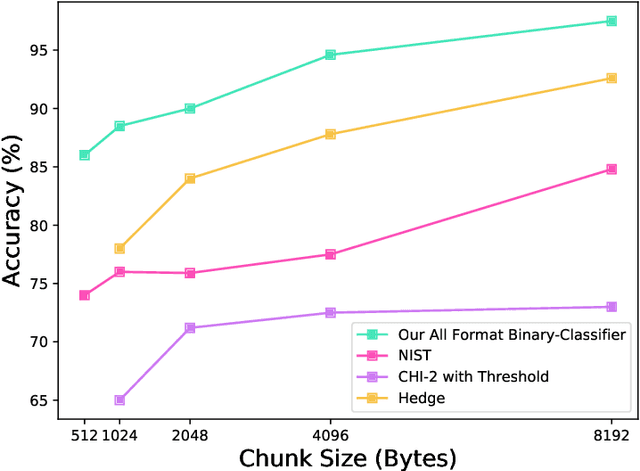

Abstract:Several cybersecurity domains, such as ransomware detection, forensics and data analysis, require methods to reliably identify encrypted data fragments. Typically, current approaches employ statistics derived from byte-level distribution, such as entropy estimation, to identify encrypted fragments. However, modern content types use compression techniques which alter data distribution pushing it closer to the uniform distribution. The result is that current approaches exhibit unreliable encryption detection performance when compressed data appears in the dataset. Furthermore, proposed approaches are typically evaluated over few data types and fragment sizes, making it hard to assess their practical applicability. This paper compares existing statistical tests on a large, standardized dataset and shows that current approaches consistently fail to distinguish encrypted and compressed data on both small and large fragment sizes. We address these shortcomings and design EnCoD, a learning-based classifier which can reliably distinguish compressed and encrypted data. We evaluate EnCoD on a dataset of 16 different file types and fragment sizes ranging from 512B to 8KB. Our results highlight that EnCoD outperforms current approaches by a wide margin, with accuracy ranging from ~82 for 512B fragments up to ~92 for 8KB data fragments. Moreover, EnCoD can pinpoint the exact format of a given data fragment, rather than performing only binary classification like previous approaches.

Evaluating the Robustness of Geometry-Aware Instance-Reweighted Adversarial Training

Mar 05, 2021

Abstract:In this technical report, we evaluate the adversarial robustness of a very recent method called "Geometry-aware Instance-reweighted Adversarial Training"[7]. GAIRAT reports state-of-the-art results on defenses to adversarial attacks on the CIFAR-10 dataset. In fact, we find that a network trained with this method, while showing an improvement over regular adversarial training (AT), is biasing the model towards certain samples by re-scaling the loss. Indeed, this leads the model to be susceptible to attacks that scale the logits. The original model shows an accuracy of 59% under AutoAttack - when trained with additional data with pseudo-labels. We provide an analysis that shows the opposite. In particular, we craft a PGD attack multiplying the logits by a positive scalar that decreases the GAIRAT accuracy from from 55% to 44%, when trained solely on CIFAR-10. In this report, we rigorously evaluate the model and provide insights into the reasons behind the vulnerability of GAIRAT to this adversarial attack. The code to reproduce our evaluation is made available at https://github.com/giuxhub/GAIRAT-LSA

EnCoD: Distinguishing Compressed and Encrypted File Fragments

Oct 15, 2020

Abstract:Reliable identification of encrypted file fragments is a requirement for several security applications, including ransomware detection, digital forensics, and traffic analysis. A popular approach consists of estimating high entropy as a proxy for randomness. However, many modern content types (e.g. office documents, media files, etc.) are highly compressed for storage and transmission efficiency. Compression algorithms also output high-entropy data, thus reducing the accuracy of entropy-based encryption detectors. Over the years, a variety of approaches have been proposed to distinguish encrypted file fragments from high-entropy compressed fragments. However, these approaches are typically only evaluated over a few, select data types and fragment sizes, which makes a fair assessment of their practical applicability impossible. This paper aims to close this gap by comparing existing statistical tests on a large, standardized dataset. Our results show that current approaches cannot reliably tell apart encryption and compression, even for large fragment sizes. To address this issue, we design EnCoD, a learning-based classifier which can reliably distinguish compressed and encrypted data, starting with fragments as small as 512 bytes. We evaluate EnCoD against current approaches over a large dataset of different data types, showing that it outperforms current state-of-the-art for most considered fragment sizes and data types.

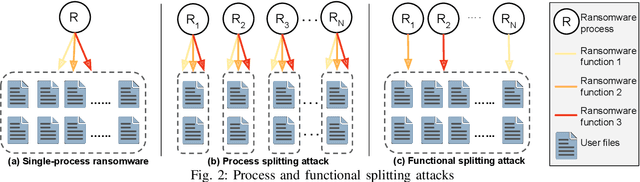

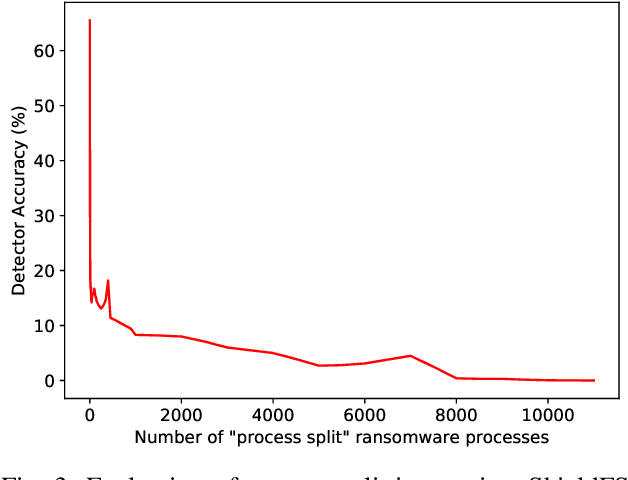

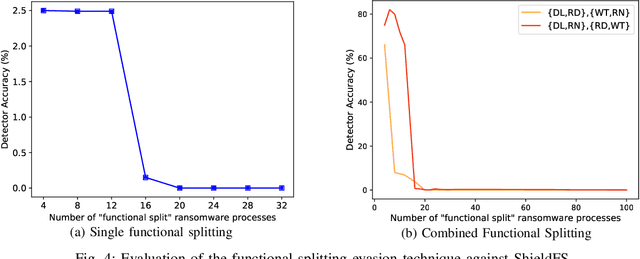

The Naked Sun: Malicious Cooperation Between Benign-Looking Processes

Nov 06, 2019

Abstract:Recent progress in machine learning has generated promising results in behavioral malware detection. Behavioral modeling identifies malicious processes via features derived by their runtime behavior. Behavioral features hold great promise as they are intrinsically related to the functioning of each malware, and are therefore considered difficult to evade. Indeed, while a significant amount of results exists on evasion of static malware features, evasion of dynamic features has seen limited work. This paper thoroughly examines the robustness of behavioral malware detectors to evasion, focusing particularly on anti-ransomware evasion. We choose ransomware as its behavior tends to differ significantly from that of benign processes, making it a low-hanging fruit for behavioral detection (and a difficult candidate for evasion). Our analysis identifies a set of novel attacks that distribute the overall malware workload across a small set of cooperating processes to avoid the generation of significant behavioral features. Our most effective attack decreases the accuracy of a state-of-the-art classifier from 98.6% to 0% using only 18 cooperating processes. Furthermore, we show our attacks to be effective against commercial ransomware detectors even in a black-box setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge