Giovanni Saponaro

Beyond the Self: Using Grounded Affordances to Interpret and Describe Others' Actions

Feb 26, 2019

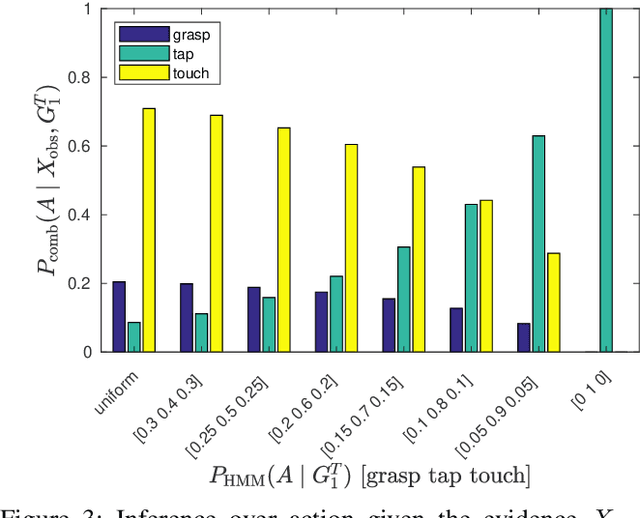

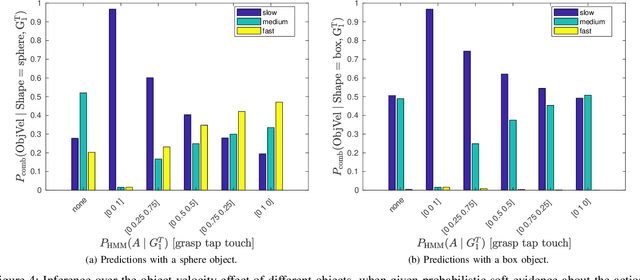

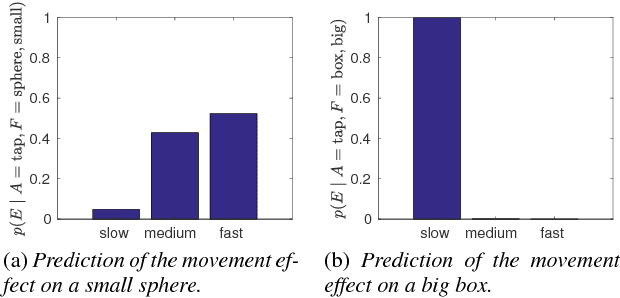

Abstract:We propose a developmental approach that allows a robot to interpret and describe the actions of human agents by reusing previous experience. The robot first learns the association between words and object affordances by manipulating the objects in its environment. It then uses this information to learn a mapping between its own actions and those performed by a human in a shared environment. It finally fuses the information from these two models to interpret and describe human actions in light of its own experience. In our experiments, we show that the model can be used flexibly to do inference on different aspects of the scene. We can predict the effects of an action on the basis of object properties. We can revise the belief that a certain action occurred, given the observed effects of the human action. In an early action recognition fashion, we can anticipate the effects when the action has only been partially observed. By estimating the probability of words given the evidence and feeding them into a pre-defined grammar, we can generate relevant descriptions of the scene. We believe that this is a step towards providing robots with the fundamental skills to engage in social collaboration with humans.

Learning at the Ends: From Hand to Tool Affordances in Humanoid Robots

Apr 09, 2018

Abstract:One of the open challenges in designing robots that operate successfully in the unpredictable human environment is how to make them able to predict what actions they can perform on objects, and what their effects will be, i.e., the ability to perceive object affordances. Since modeling all the possible world interactions is unfeasible, learning from experience is required, posing the challenge of collecting a large amount of experiences (i.e., training data). Typically, a manipulative robot operates on external objects by using its own hands (or similar end-effectors), but in some cases the use of tools may be desirable, nevertheless, it is reasonable to assume that while a robot can collect many sensorimotor experiences using its own hands, this cannot happen for all possible human-made tools. Therefore, in this paper we investigate the developmental transition from hand to tool affordances: what sensorimotor skills that a robot has acquired with its bare hands can be employed for tool use? By employing a visual and motor imagination mechanism to represent different hand postures compactly, we propose a probabilistic model to learn hand affordances, and we show how this model can generalize to estimate the affordances of previously unseen tools, ultimately supporting planning, decision-making and tool selection tasks in humanoid robots. We present experimental results with the iCub humanoid robot, and we publicly release the collected sensorimotor data in the form of a hand posture affordances dataset.

Interactive Robot Learning of Gestures, Language and Affordances

Nov 24, 2017

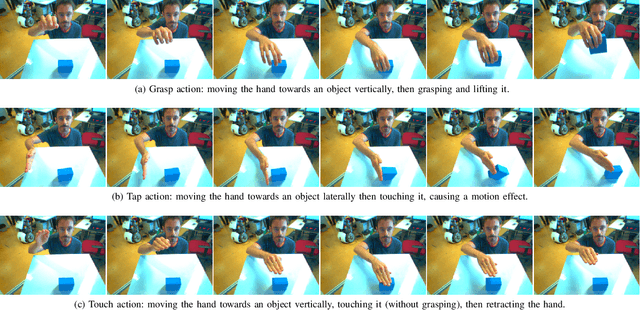

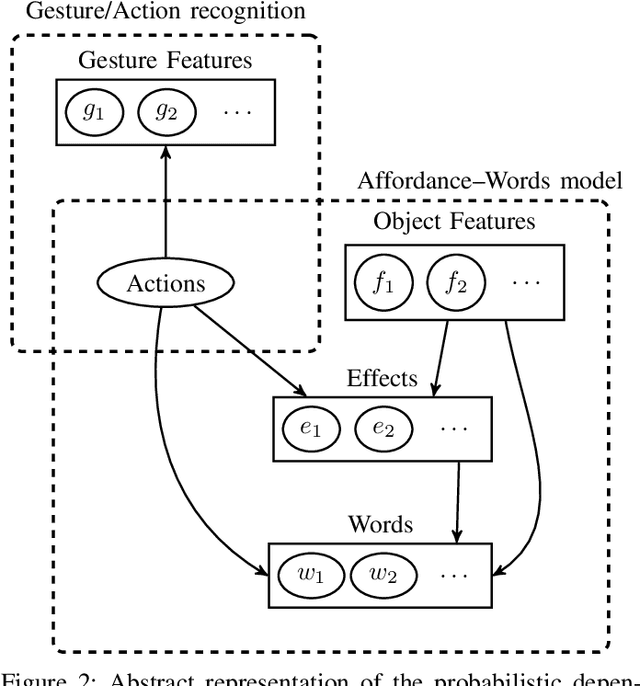

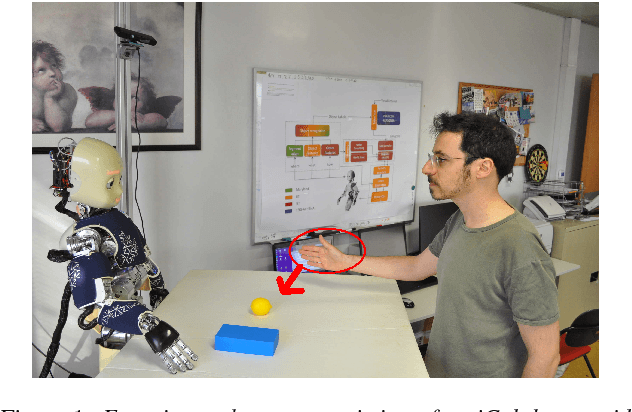

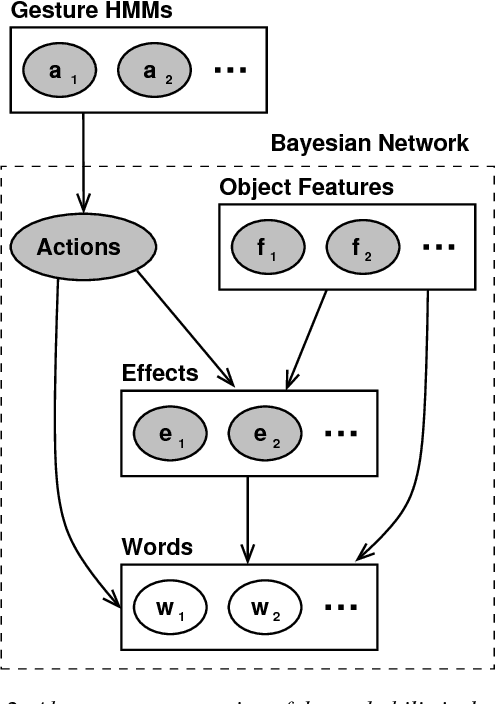

Abstract:A growing field in robotics and Artificial Intelligence (AI) research is human-robot collaboration, whose target is to enable effective teamwork between humans and robots. However, in many situations human teams are still superior to human-robot teams, primarily because human teams can easily agree on a common goal with language, and the individual members observe each other effectively, leveraging their shared motor repertoire and sensorimotor resources. This paper shows that for cognitive robots it is possible, and indeed fruitful, to combine knowledge acquired from interacting with elements of the environment (affordance exploration) with the probabilistic observation of another agent's actions. We propose a model that unites (i) learning robot affordances and word descriptions with (ii) statistical recognition of human gestures with vision sensors. We discuss theoretical motivations, possible implementations, and we show initial results which highlight that, after having acquired knowledge of its surrounding environment, a humanoid robot can generalize this knowledge to the case when it observes another agent (human partner) performing the same motor actions previously executed during training.

* code available at https://github.com/gsaponaro/glu-gestures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge