Giorgio Nanfa

SNN under Attack: are Spiking Deep Belief Networks vulnerable to Adversarial Examples?

Feb 04, 2019

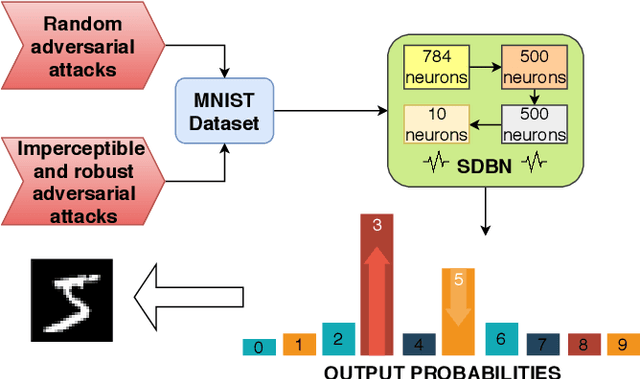

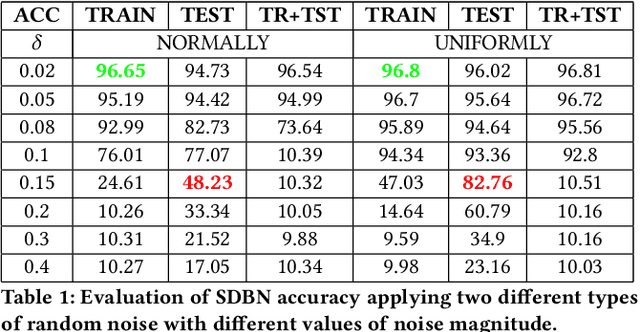

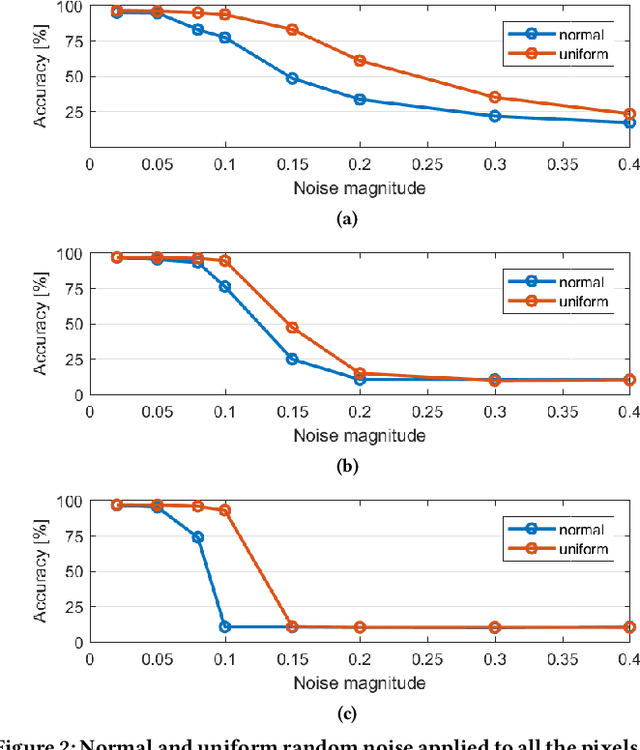

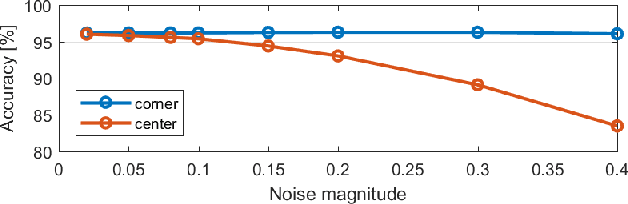

Abstract:Recently, many adversarial examples have emerged for Deep Neural Networks (DNNs) causing misclassifications. However, in-depth work still needs to be performed to demonstrate such attacks and security vulnerabilities for spiking neural networks (SNNs), i.e. the 3rd generation NNs. This paper aims at addressing the fundamental questions:"Are SNNs vulnerable to the adversarial attacks as well?" and "if yes, to what extent?" Using a Spiking Deep Belief Network (SDBN) for the MNIST database classification, we show that the SNN accuracy decreases accordingly to the noise magnitude in data poisoning random attacks applied to the test images. Moreover, SDBNs generalization capabilities increase by applying noise to the training images. We develop a novel black box attack methodology to automatically generate imperceptible and robust adversarial examples through a greedy algorithm, which is first of its kind for SNNs.

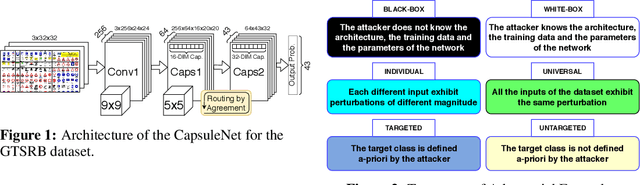

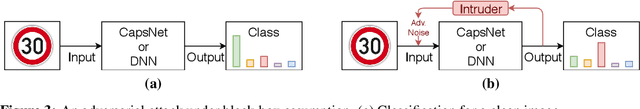

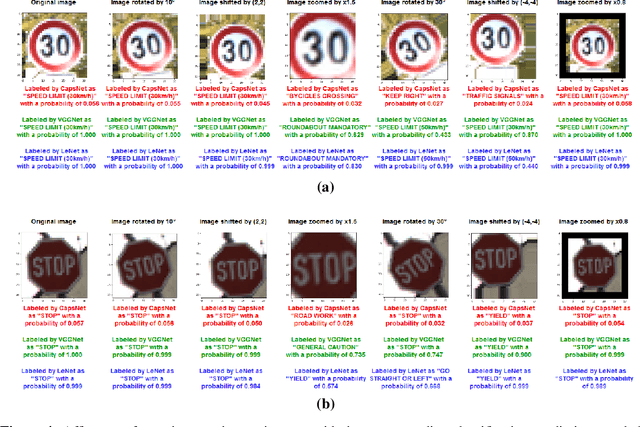

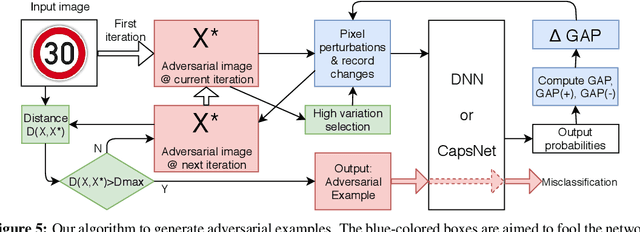

CapsAttacks: Robust and Imperceptible Adversarial Attacks on Capsule Networks

Jan 28, 2019

Abstract:Capsule Networks envision an innovative point of view about the representation of the objects in the brain and preserve the hierarchical spatial relationships between them. This type of networks exhibits a huge potential for several Machine Learning tasks like image classification, while outperforming Convolutional Neural Networks (CNNs). A large body of work has explored adversarial examples for CNNs, but their efficacy to Capsule Networks is not well explored. In our work, we study the vulnerabilities in Capsule Networks to adversarial attacks. These perturbations, added to the test inputs, are small and imperceptible to humans, but fool the network to mis-predict. We propose a greedy algorithm to automatically generate targeted imperceptible adversarial examples in a black-box attack scenario. We show that this kind of attacks, when applied to the German Traffic Sign Recognition Benchmark (GTSRB), mislead Capsule Networks. Moreover, we apply the same kind of adversarial attacks to a 9-layer CNN and analyze the outcome, compared to the Capsule Networks to study their differences / commonalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge