Gerardo Hermosillo Valadez

Fast Multi-Organ Fine Segmentation in CT Images with Hierarchical Sparse Sampling and Residual Transformer

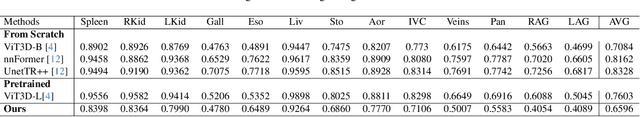

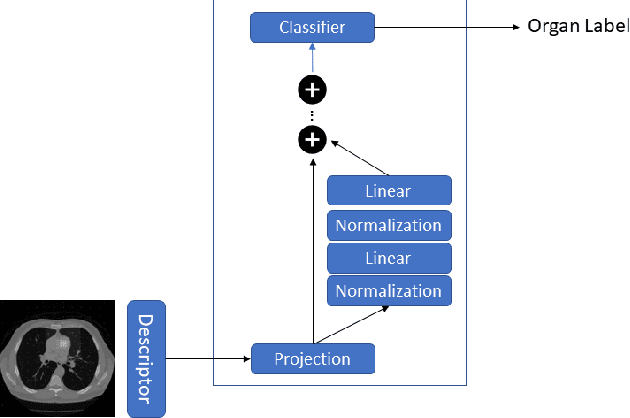

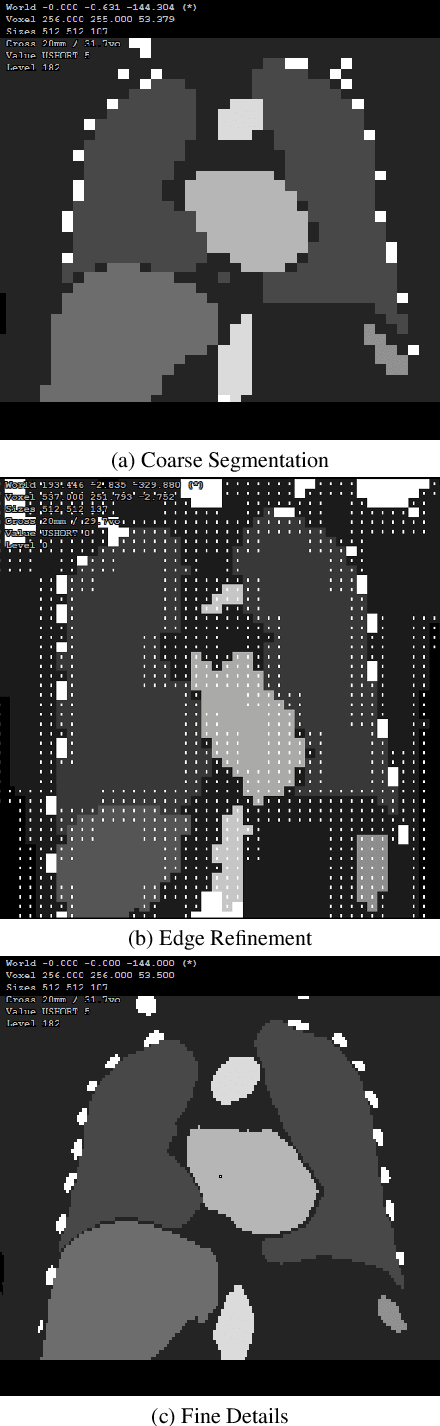

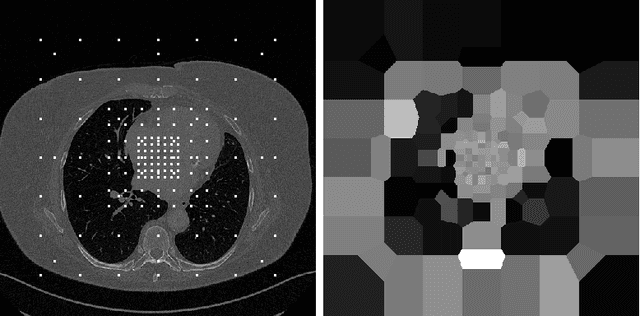

Nov 11, 2025Abstract:Multi-organ segmentation of 3D medical images is fundamental with meaningful applications in various clinical automation pipelines. Although deep learning has achieved superior performance, the time and memory consumption of segmenting the entire 3D volume voxel by voxel using neural networks can be huge. Classifiers have been developed as an alternative in cases with certain points of interest, but the trade-off between speed and accuracy remains an issue. Thus, we propose a novel fast multi-organ segmentation framework with the usage of hierarchical sparse sampling and a Residual Transformer. Compared with whole-volume analysis, the hierarchical sparse sampling strategy could successfully reduce computation time while preserving a meaningful hierarchical context utilizing multiple resolution levels. The architecture of the Residual Transformer segmentation network could extract and combine information from different levels of information in the sparse descriptor while maintaining a low computational cost. In an internal data set containing 10,253 CT images and the public dataset TotalSegmentator, the proposed method successfully improved qualitative and quantitative segmentation performance compared to the current fast organ classifier, with fast speed at the level of ~2.24 seconds on CPU hardware. The potential of achieving real-time fine organ segmentation is suggested.

Consistent Point Matching

Jul 31, 2025Abstract:This study demonstrates that incorporating a consistency heuristic into the point-matching algorithm \cite{yerebakan2023hierarchical} improves robustness in matching anatomical locations across pairs of medical images. We validated our approach on diverse longitudinal internal and public datasets spanning CT and MRI modalities. Notably, it surpasses state-of-the-art results on the Deep Lesion Tracking dataset. Additionally, we show that the method effectively addresses landmark localization. The algorithm operates efficiently on standard CPU hardware and allows configurable trade-offs between speed and robustness. The method enables high-precision navigation between medical images without requiring a machine learning model or training data.

BodyGPS: Anatomical Positioning System

May 12, 2025Abstract:We introduce a new type of foundational model for parsing human anatomy in medical images that works for different modalities. It supports supervised or unsupervised training and can perform matching, registration, classification, or segmentation with or without user interaction. We achieve this by training a neural network estimator that maps query locations to atlas coordinates via regression. Efficiency is improved by sparsely sampling the input, enabling response times of less than 1 ms without additional accelerator hardware. We demonstrate the utility of the algorithm in both CT and MRI modalities.

Automatic Mapping of Anatomical Landmarks from Free-Text Using Large Language Models: Insights from Llama-2

Oct 17, 2024

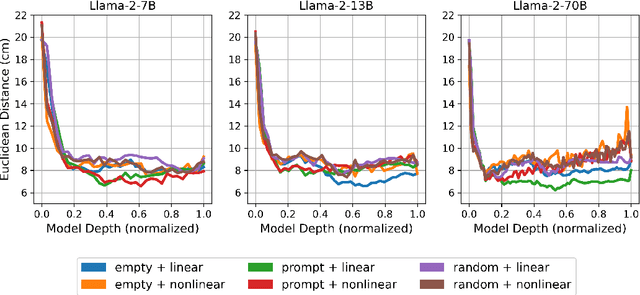

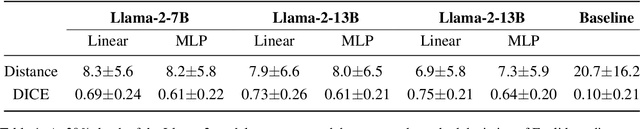

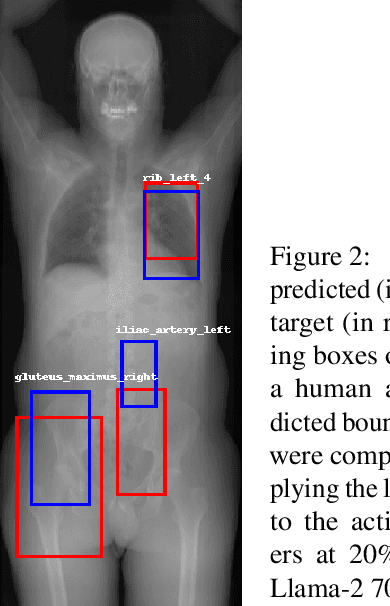

Abstract:Anatomical landmarks are vital in medical imaging for navigation and anomaly detection. Modern large language models (LLMs), like Llama-2, offer promise for automating the mapping of these landmarks in free-text radiology reports to corresponding positions in image data. Recent studies propose LLMs may develop coherent representations of generative processes. Motivated by these insights, we investigated whether LLMs accurately represent the spatial positions of anatomical landmarks. Through experiments with Llama-2 models, we found that they can linearly represent anatomical landmarks in space with considerable robustness to different prompts. These results underscore the potential of LLMs to enhance the efficiency and accuracy of medical imaging workflows.

Real Time Multi Organ Classification on Computed Tomography Images

Apr 29, 2024

Abstract:Organ segmentation is a fundamental task in medical imaging, and it is useful for many clinical automation pipelines. Typically, the process involves segmenting the entire volume, which can be unnecessary when the points of interest are limited. In those cases, a classifier could be used instead of segmentation. However, there is an inherent trade-off between the context size and the speed of classifiers. To address this issue, we propose a new method that employs a data selection strategy with sparse sampling across a wide field of view without image resampling. This sparse sampling strategy makes it possible to classify voxels into multiple organs in real time without using accelerators. Although our method is an independent classifier, it can generate full segmentation by querying grid locations at any resolution. We have compared our method with existing segmentation techniques, demonstrating its potential for superior runtime in practical applications in medical imaging.

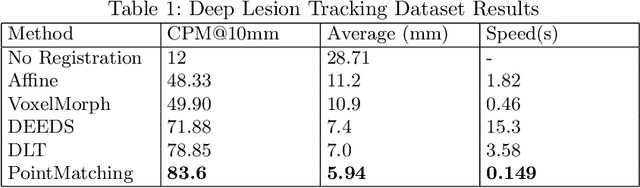

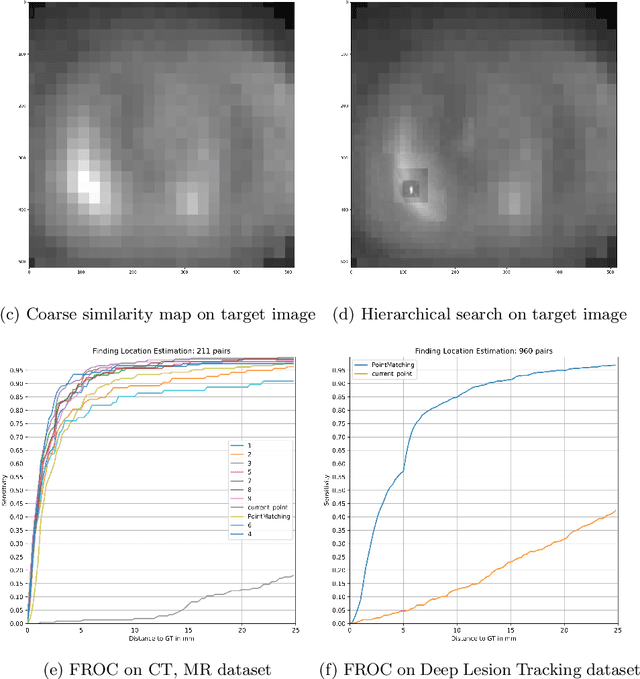

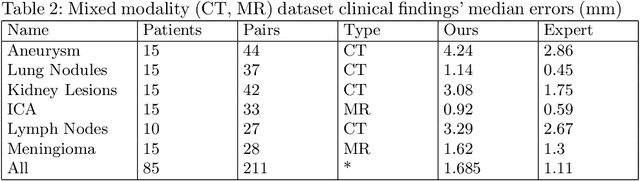

A Hierarchical Descriptor Framework for On-the-Fly Anatomical Location Matching between Longitudinal Studies

Aug 11, 2023

Abstract:We propose a method to match anatomical locations between pairs of medical images in longitudinal comparisons. The matching is made possible by computing a descriptor of the query point in a source image based on a hierarchical sparse sampling of image intensities that encode the location information. Then, a hierarchical search operation finds the corresponding point with the most similar descriptor in the target image. This simple yet powerful strategy reduces the computational time of mapping points to a millisecond scale on a single CPU. Thus, radiologists can compare similar anatomical locations in near real-time without requiring extra architectural costs for precomputing or storing deformation fields from registrations. Our algorithm does not require prior training, resampling, segmentation, or affine transformation steps. We have tested our algorithm on the recently published Deep Lesion Tracking dataset annotations. We observed more accurate matching compared to Deep Lesion Tracker while being 24 times faster than the most precise algorithm reported therein. We also investigated the matching accuracy on CT and MR modalities and compared the proposed algorithm's accuracy against ground truth consolidated from multiple radiologists.

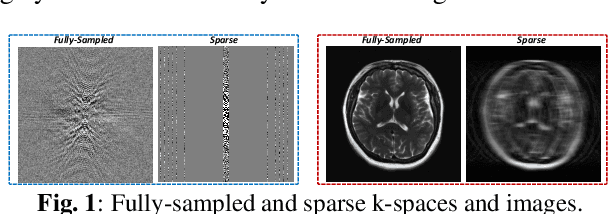

DD-CISENet: Dual-Domain Cross-Iteration Squeeze and Excitation Network for Accelerated MRI Reconstruction

Apr 28, 2023

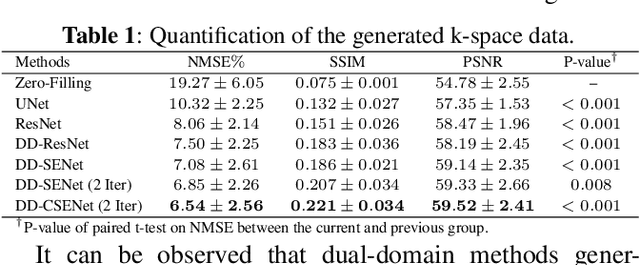

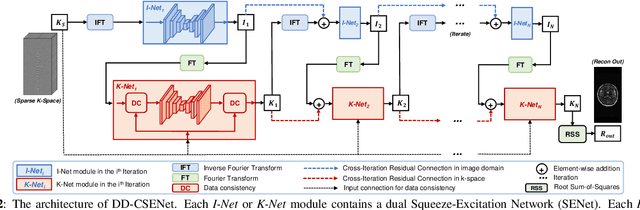

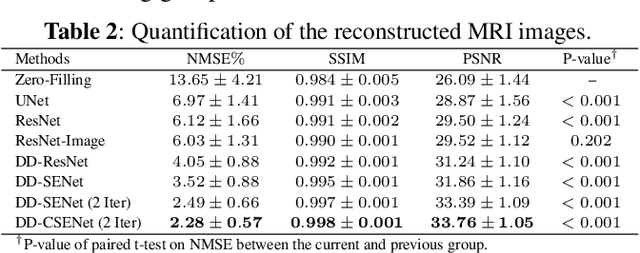

Abstract:Magnetic resonance imaging (MRI) is widely employed for diagnostic tests in neurology. However, the utility of MRI is largely limited by its long acquisition time. Acquiring fewer k-space data in a sparse manner is a potential solution to reducing the acquisition time, but it can lead to severe aliasing reconstruction artifacts. In this paper, we present a novel Dual-Domain Cross-Iteration Squeeze and Excitation Network (DD-CISENet) for accelerated sparse MRI reconstruction. The information of k-spaces and MRI images can be iteratively fused and maintained using the Cross-Iteration Residual connection (CIR) structures. This study included 720 multi-coil brain MRI cases adopted from the open-source fastMRI Dataset. Results showed that the average reconstruction error by DD-CISENet was 2.28 $\pm$ 0.57%, which outperformed existing deep learning methods including image-domain prediction (6.03 $\pm$ 1.31, p < 0.001), k-space synthesis (6.12 $\pm$ 1.66, p < 0.001), and dual-domain feature fusion approaches (4.05 $\pm$ 0.88, p < 0.001).

Dual-Domain Cross-Iteration Squeeze-Excitation Network for Sparse Reconstruction of Brain MRI

Oct 05, 2022

Abstract:Magnetic resonance imaging (MRI) is one of the most commonly applied tests in neurology and neurosurgery. However, the utility of MRI is largely limited by its long acquisition time, which might induce many problems including patient discomfort and motion artifacts. Acquiring fewer k-space sampling is a potential solution to reducing the total scanning time. However, it can lead to severe aliasing reconstruction artifacts and thus affect the clinical diagnosis. Nowadays, deep learning has provided new insights into the sparse reconstruction of MRI. In this paper, we present a new approach to this problem that iteratively fuses the information of k-space and MRI images using novel dual Squeeze-Excitation Networks and Cross-Iteration Residual Connections. This study included 720 clinical multi-coil brain MRI cases adopted from the open-source deidentified fastMRI Dataset. 8-folder downsampling rate was applied to generate the sparse k-space. Results showed that the average reconstruction error over 120 testing cases by our proposed method was 2.28%, which outperformed the existing image-domain prediction (6.03%, p<0.001), k-space synthesis (6.12%, p<0.001), and dual-domain feature fusion (4.05%, p<0.001).

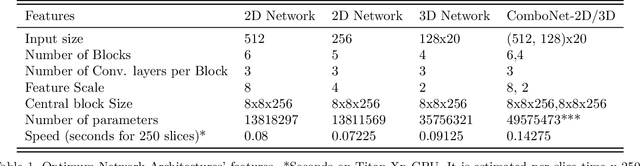

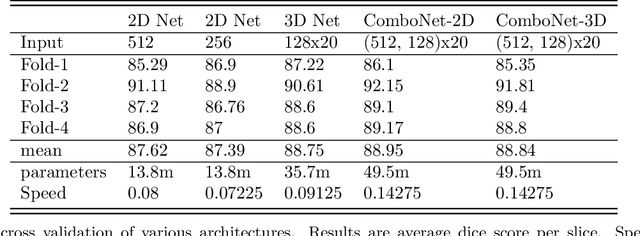

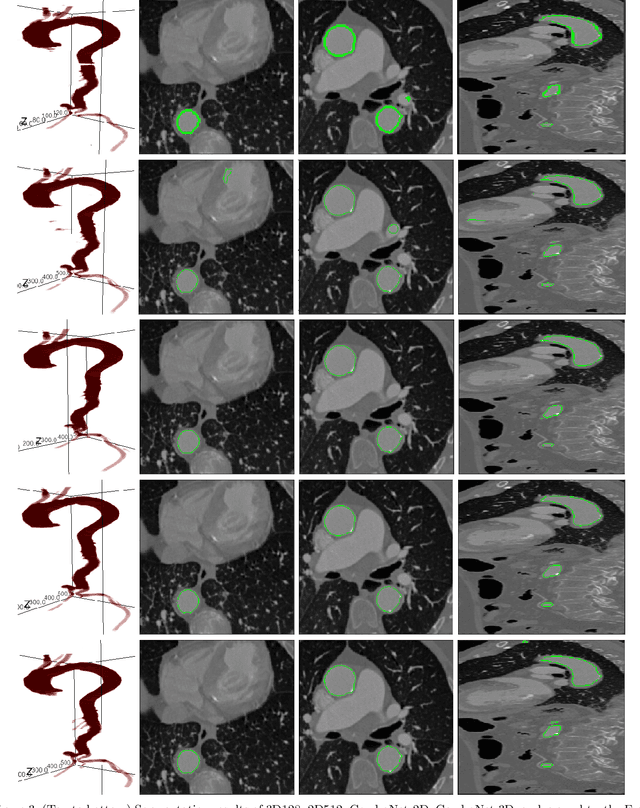

ComboNet: Combined 2D & 3D Architecture for Aorta Segmentation

Jun 09, 2020

Abstract:3D segmentation with deep learning if trained with full resolution is the ideal way of achieving the best accuracy. Unlike in 2D, 3D segmentation generally does not have sparse outliers, prevents leakage to surrounding soft tissues, at the very least it is generally more consistent than 2D segmentation. However, GPU memory is generally the bottleneck for such an application. Thus, most of the 3D segmentation applications handle sub-sampled input instead of full resolution, which comes with the cost of losing precision at the boundary. In order to maintain precision at the boundary and prevent sparse outliers and leakage, we designed ComboNet. ComboNet is designed in an end to end fashion with three sub-network structures. The first two are parallel: 2D UNet with full resolution and 3D UNet with four times sub-sampled input. The last stage is the concatenation of 2D and 3D outputs along with a full-resolution input image which is followed by two convolution layers either with 2D or 3D convolutions. With ComboNet we have achieved $92.1\%$ dice accuracy for aorta segmentation. With Combonet, we have observed up to $2.3\%$ improvement of dice accuracy as opposed to 2D UNet with the full-resolution input image.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge