Gerard Kennedy

Iterative Optimisation with an Innovation CNN for Pose Refinement

Jan 22, 2021

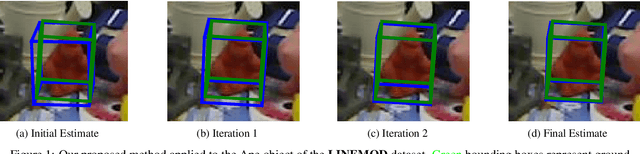

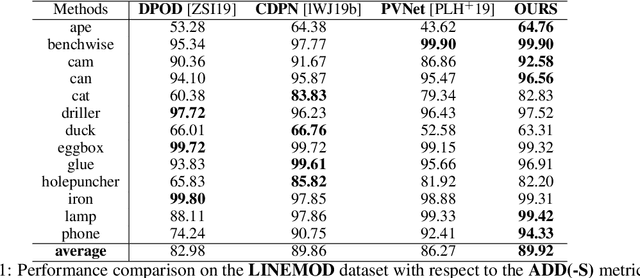

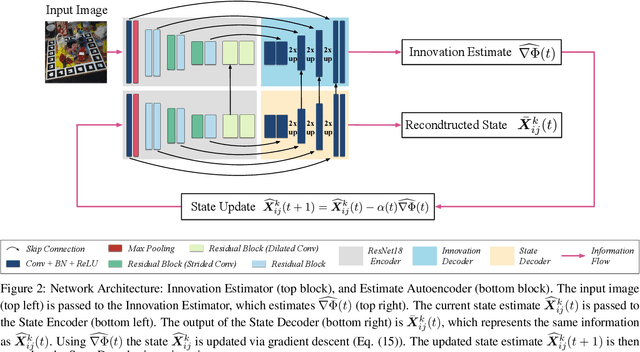

Abstract:Object pose estimation from a single RGB image is a challenging problem due to variable lighting conditions and viewpoint changes. The most accurate pose estimation networks implement pose refinement via reprojection of a known, textured 3D model, however, such methods cannot be applied without high quality 3D models of the observed objects. In this work we propose an approach, namely an Innovation CNN, to object pose estimation refinement that overcomes the requirement for reprojecting a textured 3D model. Our approach improves initial pose estimation progressively by applying the Innovation CNN iteratively in a stochastic gradient descent (SGD) framework. We evaluate our method on the popular LINEMOD and Occlusion LINEMOD datasets and obtain state-of-the-art performance on both datasets.

Simultaneous Localization and Mapping with Dynamic Rigid Objects

May 10, 2018

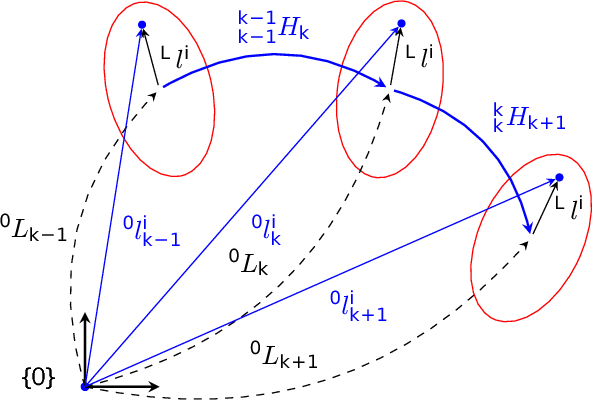

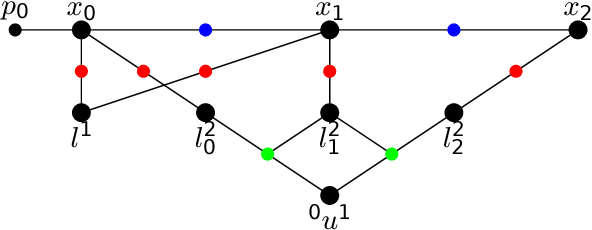

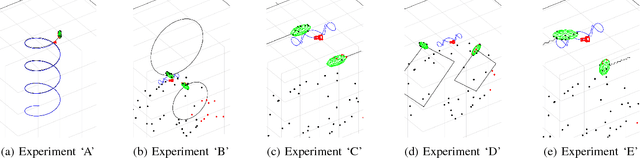

Abstract:Accurate estimation of the environment structure simultaneously with the robot pose is a key capability of autonomous robotic vehicles. Classical simultaneous localization and mapping (SLAM) algorithms rely on the static world assumption to formulate the estimation problem, however, the real world has a significant amount of dynamics that can be exploited for a more accurate localization and versatile representation of the environment. In this paper we propose a technique to integrate the motion of dynamic objects into the SLAM estimation problem, without the necessity of estimating the pose or the geometry of the objects. To this end, we introduce a novel representation of the pose change of rigid bodies in motion and show the benefits of integrating such information when performing SLAM in dynamic environments. Our experiments show consistent improvement in robot localization and mapping accuracy when using a simple constant motion assumption, even for objects whose motion slightly violates this assumption.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge