Iterative Optimisation with an Innovation CNN for Pose Refinement

Paper and Code

Jan 22, 2021

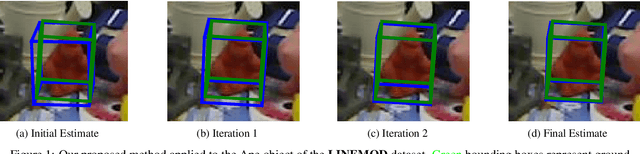

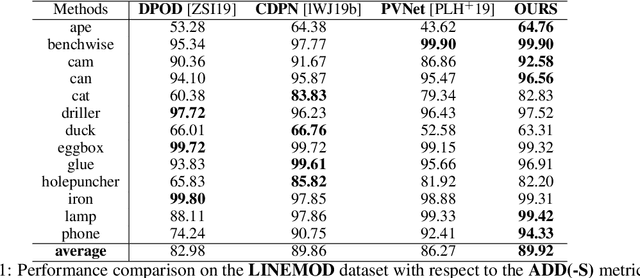

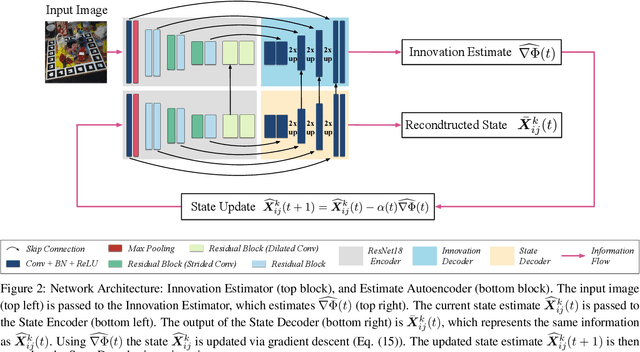

Object pose estimation from a single RGB image is a challenging problem due to variable lighting conditions and viewpoint changes. The most accurate pose estimation networks implement pose refinement via reprojection of a known, textured 3D model, however, such methods cannot be applied without high quality 3D models of the observed objects. In this work we propose an approach, namely an Innovation CNN, to object pose estimation refinement that overcomes the requirement for reprojecting a textured 3D model. Our approach improves initial pose estimation progressively by applying the Innovation CNN iteratively in a stochastic gradient descent (SGD) framework. We evaluate our method on the popular LINEMOD and Occlusion LINEMOD datasets and obtain state-of-the-art performance on both datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge