Gerald S. Buller

A Plug-and-Play Algorithm for 3D Video Super-Resolution of Single-Photon LiDAR data

Dec 12, 2024

Abstract:Single-photon avalanche diodes (SPADs) are advanced sensors capable of detecting individual photons and recording their arrival times with picosecond resolution using time-correlated Single-Photon Counting detection techniques. They are used in various applications, such as LiDAR, and can capture high-speed sequences of binary single-photon images, offering great potential for reconstructing 3D environments with high motion dynamics. To complement single-photon data, they are often paired with conventional passive cameras, which capture high-resolution (HR) intensity images at a lower frame rate. However, 3D reconstruction from SPAD data faces challenges. Aggregating multiple binary measurements improves precision and reduces noise but can cause motion blur in dynamic scenes. Additionally, SPAD arrays often have lower resolution than passive cameras. To address these issues, we propose a novel computational imaging algorithm to improve the 3D reconstruction of moving scenes from SPAD data by addressing the motion blur and increasing the native spatial resolution. We adopt a plug-and-play approach within an optimization scheme alternating between guided video super-resolution of the 3D scene, and precise image realignment using optical flow. Experiments on synthetic data show significantly improved image resolutions across various signal-to-noise ratios and photon levels. We validate our method using real-world SPAD measurements on three practical situations with dynamic objects. First on fast-moving scenes in laboratory conditions at short range; second very low resolution imaging of people with a consumer-grade SPAD sensor from STMicroelectronics; and finally, HR imaging of people walking outdoors in daylight at a range of 325 meters under eye-safe illumination conditions using a short-wave infrared SPAD camera. These results demonstrate the robustness and versatility of our approach.

3D Target Detection and Spectral Classification for Single-photon LiDAR Data

Feb 20, 2023

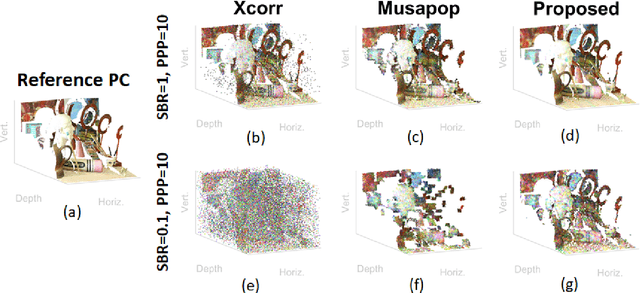

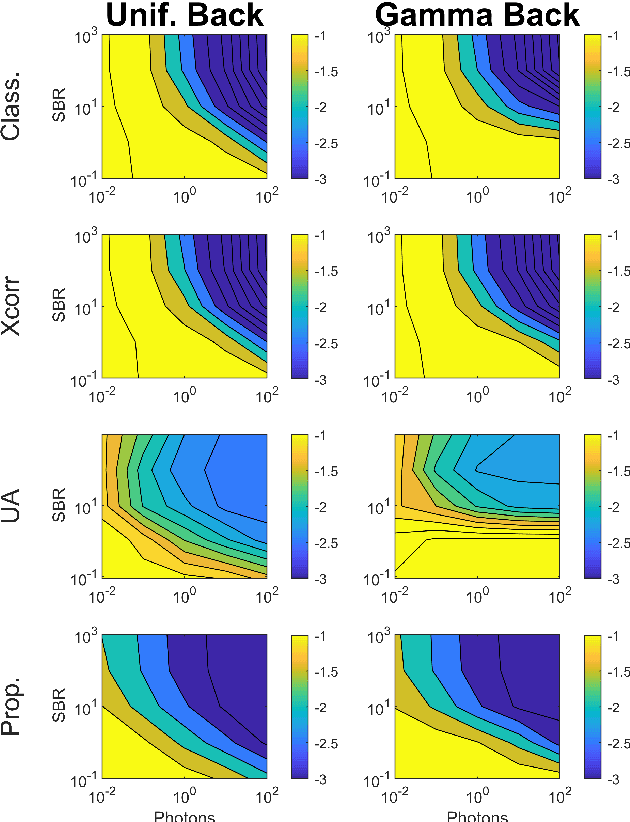

Abstract:3D single-photon LiDAR imaging has an important role in many applications. However, full deployment of this modality will require the analysis of low signal to noise ratio target returns and a very high volume of data. This is particularly evident when imaging through obscurants or in high ambient background light conditions. This paper proposes a multiscale approach for 3D surface detection from the photon timing histogram to permit a significant reduction in data volume. The resulting surfaces are background-free and can be used to infer depth and reflectivity information about the target. We demonstrate this by proposing a hierarchical Bayesian model for 3D reconstruction and spectral classification of multispectral single-photon LiDAR data. The reconstruction method promotes spatial correlation between point-cloud estimates and uses a coordinate gradient descent algorithm for parameter estimation. Results on simulated and real data show the benefits of the proposed target detection and reconstruction approaches when compared to state-of-the-art processing algorithms

Fast Task-Based Adaptive Sampling for 3D Single-Photon Multispectral Lidar Data

Sep 03, 2021

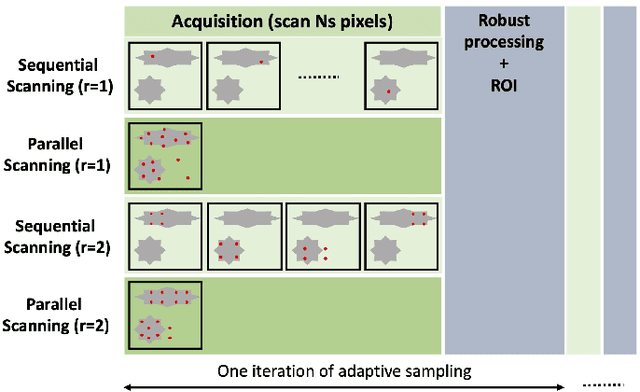

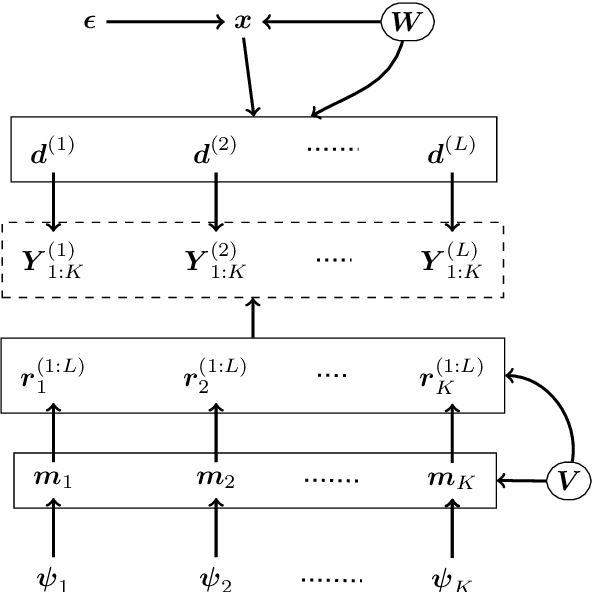

Abstract:3D single-photon LiDAR imaging plays an important role in numerous applications. However, long acquisition times and significant data volumes present a challenge to LiDAR imaging. This paper proposes a task-optimized adaptive sampling framework that enables fast acquisition and processing of high-dimensional single-photon LiDAR data. Given a task of interest, the iterative sampling strategy targets the most informative regions of a scene which are defined as those minimizing parameter uncertainties. The task is performed by considering a Bayesian model that is carefully built to allow fast per-pixel computations while delivering parameter estimates with quantified uncertainties. The framework is demonstrated on multispectral 3D single-photon LiDAR imaging when considering object classification and/or target detection as tasks. It is also analysed for both sequential and parallel scanning modes for different detector array sizes. Results on simulated and real data show the benefit of the proposed optimized sampling strategy when compared to fixed sampling strategies.

Robust and Guided Bayesian Reconstruction of Single-Photon 3D Lidar Data: Application to Multispectral and Underwater Imaging

Mar 18, 2021

Abstract:3D Lidar imaging can be a challenging modality when using multiple wavelengths, or when imaging in high noise environments (e.g., imaging through obscurants). This paper presents a hierarchical Bayesian algorithm for the robust reconstruction of multispectral single-photon Lidar data in such environments. The algorithm exploits multi-scale information to provide robust depth and reflectivity estimates together with their uncertainties to help with decision making. The proposed weight-based strategy allows the use of available guide information that can be obtained by using state-of-the-art learning based algorithms. The proposed Bayesian model and its estimation algorithm are validated on both synthetic and real images showing competitive results regarding the quality of the inferences and the computational complexity when compared to the state-of-the-art algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge