Gautam Bathla

High-Throughput, High-Performance Deep Learning-Driven Light Guide Plate Surface Visual Quality Inspection Tailored for Real-World Manufacturing Environments

Dec 20, 2022

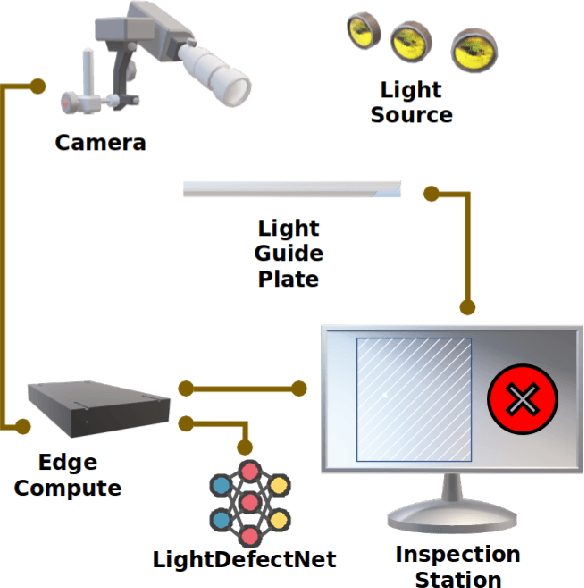

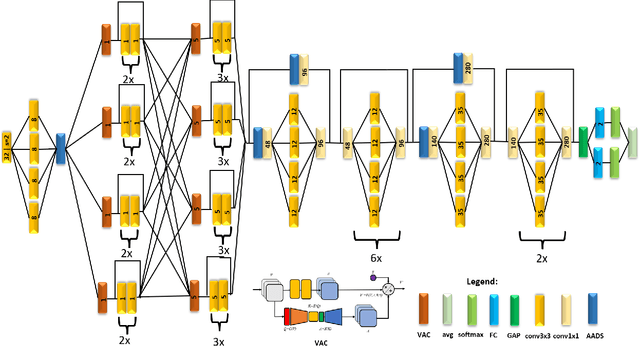

Abstract:Light guide plates are essential optical components widely used in a diverse range of applications ranging from medical lighting fixtures to back-lit TV displays. In this work, we introduce a fully-integrated, high-throughput, high-performance deep learning-driven workflow for light guide plate surface visual quality inspection (VQI) tailored for real-world manufacturing environments. To enable automated VQI on the edge computing within the fully-integrated VQI system, a highly compact deep anti-aliased attention condenser neural network (which we name LightDefectNet) tailored specifically for light guide plate surface defect detection in resource-constrained scenarios was created via machine-driven design exploration with computational and "best-practices" constraints as well as L_1 paired classification discrepancy loss. Experiments show that LightDetectNet achieves a detection accuracy of ~98.2% on the LGPSDD benchmark while having just 770K parameters (~33X and ~6.9X lower than ResNet-50 and EfficientNet-B0, respectively) and ~93M FLOPs (~88X and ~8.4X lower than ResNet-50 and EfficientNet-B0, respectively) and ~8.8X faster inference speed than EfficientNet-B0 on an embedded ARM processor. As such, the proposed deep learning-driven workflow, integrated with the aforementioned LightDefectNet neural network, is highly suited for high-throughput, high-performance light plate surface VQI within real-world manufacturing environments.

CellDefectNet: A Machine-designed Attention Condenser Network for Electroluminescence-based Photovoltaic Cell Defect Inspection

Apr 25, 2022

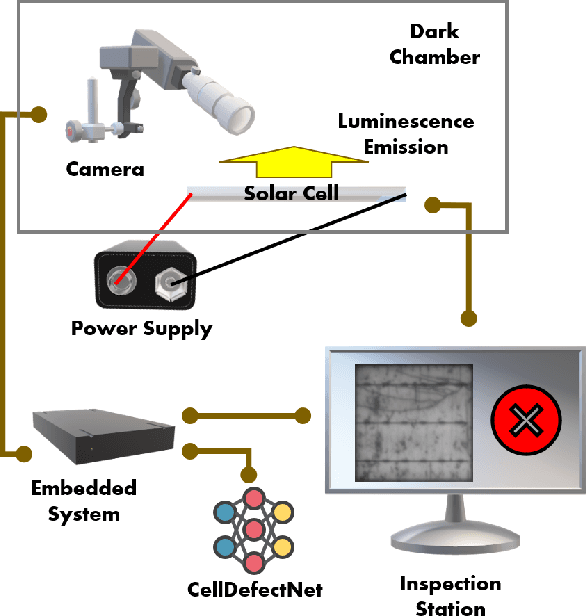

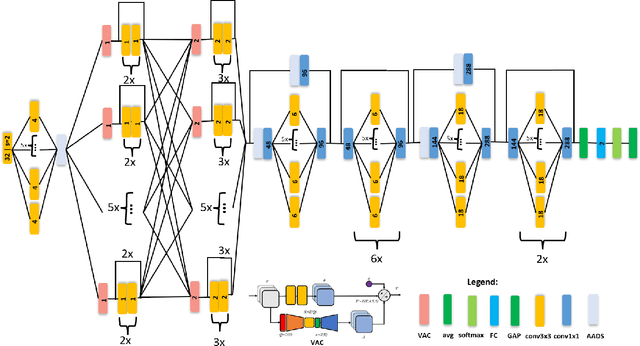

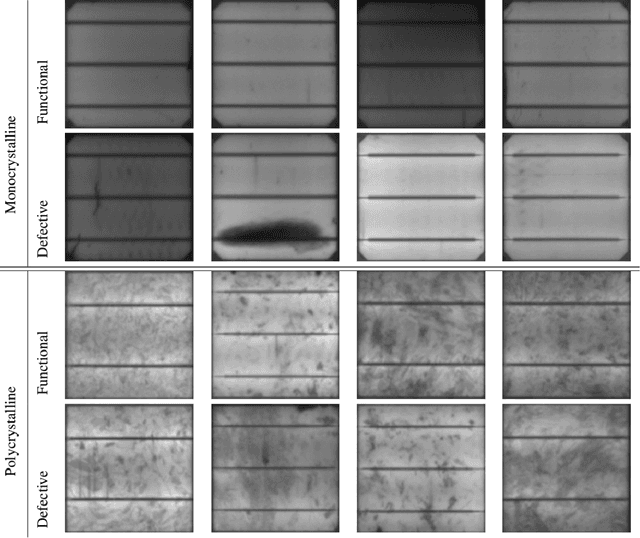

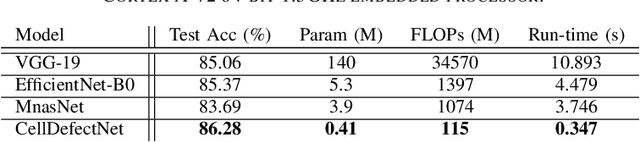

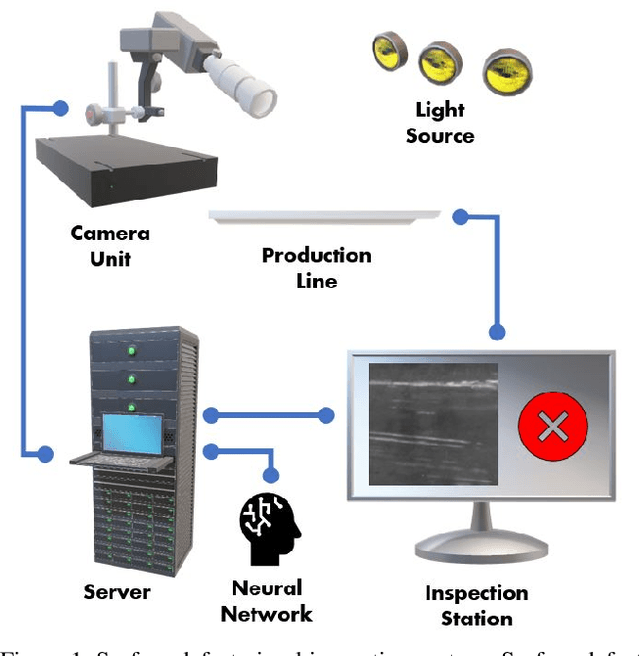

Abstract:Photovoltaic cells are electronic devices that convert light energy to electricity, forming the backbone of solar energy harvesting systems. An essential step in the manufacturing process for photovoltaic cells is visual quality inspection using electroluminescence imaging to identify defects such as cracks, finger interruptions, and broken cells. A big challenge faced by industry in photovoltaic cell visual inspection is the fact that it is currently done manually by human inspectors, which is extremely time consuming, laborious, and prone to human error. While deep learning approaches holds great potential to automating this inspection, the hardware resource-constrained manufacturing scenario makes it challenging for deploying complex deep neural network architectures. In this work, we introduce CellDefectNet, a highly efficient attention condenser network designed via machine-driven design exploration specifically for electroluminesence-based photovoltaic cell defect detection on the edge. We demonstrate the efficacy of CellDefectNet on a benchmark dataset comprising of a diversity of photovoltaic cells captured using electroluminescence imagery, achieving an accuracy of ~86.3% while possessing just 410K parameters (~13$\times$ lower than EfficientNet-B0, respectively) and ~115M FLOPs (~12$\times$ lower than EfficientNet-B0) and ~13$\times$ faster on an ARM Cortex A-72 embedded processor when compared to EfficientNet-B0.

LightDefectNet: A Highly Compact Deep Anti-Aliased Attention Condenser Neural Network Architecture for Light Guide Plate Surface Defect Detection

Apr 25, 2022

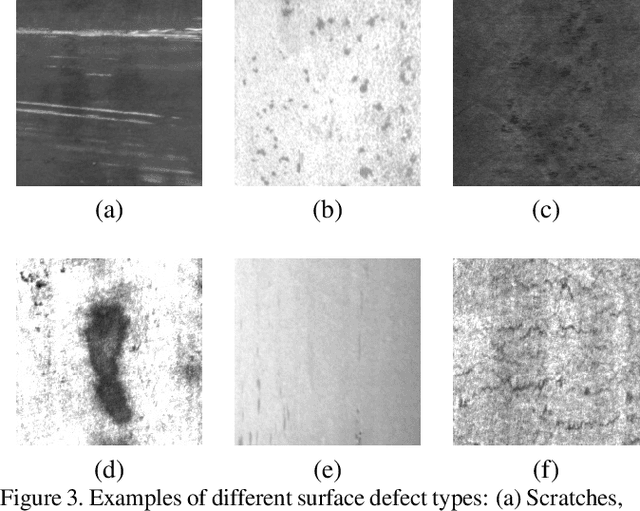

Abstract:Light guide plates are essential optical components widely used in a diverse range of applications ranging from medical lighting fixtures to back-lit TV displays. An essential step in the manufacturing of light guide plates is the quality inspection of defects such as scratches, bright/dark spots, and impurities. This is mainly done in industry through manual visual inspection for plate pattern irregularities, which is time-consuming and prone to human error and thus act as a significant barrier to high-throughput production. Advances in deep learning-driven computer vision has led to the exploration of automated visual quality inspection of light guide plates to improve inspection consistency, accuracy, and efficiency. However, given the cost constraints in visual inspection scenarios, the widespread adoption of deep learning-driven computer vision methods for inspecting light guide plates has been greatly limited due to high computational requirements. In this study, we explore the utilization of machine-driven design exploration with computational and "best-practices" constraints as well as L$_1$ paired classification discrepancy loss to create LightDefectNet, a highly compact deep anti-aliased attention condenser neural network architecture tailored specifically for light guide plate surface defect detection in resource-constrained scenarios. Experiments show that LightDetectNet achieves a detection accuracy of $\sim$98.2% on the LGPSDD benchmark while having just 770K parameters ($\sim$33$\times$ and $\sim$6.9$\times$ lower than ResNet-50 and EfficientNet-B0, respectively) and $\sim$93M FLOPs ($\sim$88$\times$ and $\sim$8.4$\times$ lower than ResNet-50 and EfficientNet-B0, respectively) and $\sim$8.8$\times$ faster inference speed than EfficientNet-B0 on an embedded ARM processor.

TinyDefectNet: Highly Compact Deep Neural Network Architecture for High-Throughput Manufacturing Visual Quality Inspection

Nov 29, 2021

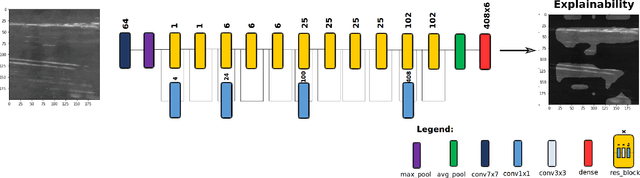

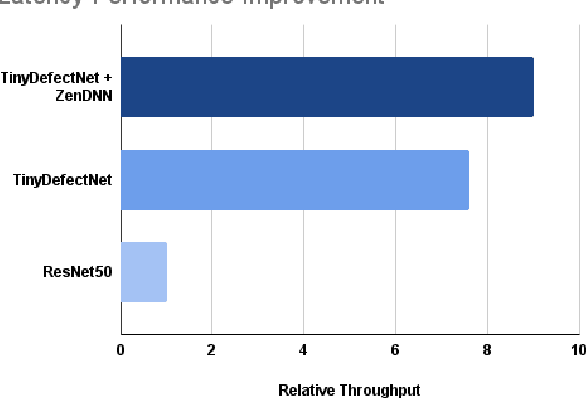

Abstract:A critical aspect in the manufacturing process is the visual quality inspection of manufactured components for defects and flaws. Human-only visual inspection can be very time-consuming and laborious, and is a significant bottleneck especially for high-throughput manufacturing scenarios. Given significant advances in the field of deep learning, automated visual quality inspection can lead to highly efficient and reliable detection of defects and flaws during the manufacturing process. However, deep learning-driven visual inspection methods often necessitate significant computational resources, thus limiting throughput and act as a bottleneck to widespread adoption for enabling smart factories. In this study, we investigated the utilization of a machine-driven design exploration approach to create TinyDefectNet, a highly compact deep convolutional network architecture tailored for high-throughput manufacturing visual quality inspection. TinyDefectNet comprises of just ~427K parameters and has a computational complexity of ~97M FLOPs, yet achieving a detection accuracy of a state-of-the-art architecture for the task of surface defect detection on the NEU defect benchmark dataset. As such, TinyDefectNet can achieve the same level of detection performance at 52$\times$ lower architectural complexity and 11x lower computational complexity. Furthermore, TinyDefectNet was deployed on an AMD EPYC 7R32, and achieved 7.6x faster throughput using the native Tensorflow environment and 9x faster throughput using AMD ZenDNN accelerator library. Finally, explainability-driven performance validation strategy was conducted to ensure correct decision-making behaviour was exhibited by TinyDefectNet to improve trust in its usage by operators and inspectors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge