Garrett B. Goh

Multiple-objective Reinforcement Learning for Inverse Design and Identification

Oct 09, 2019

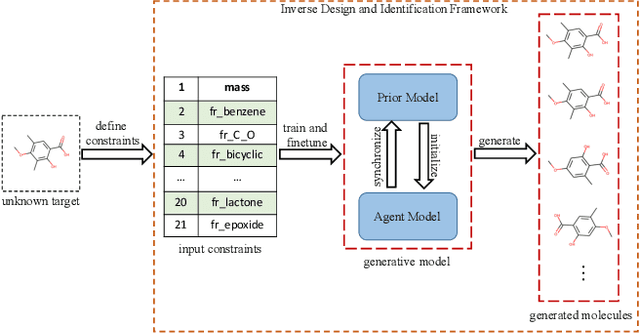

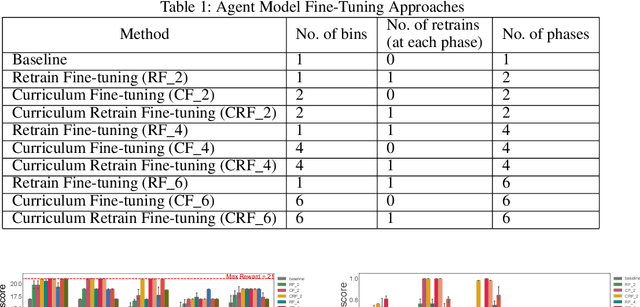

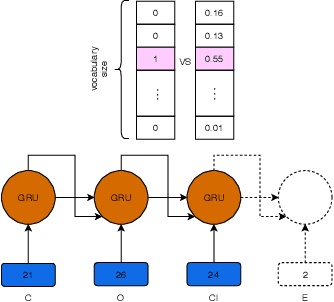

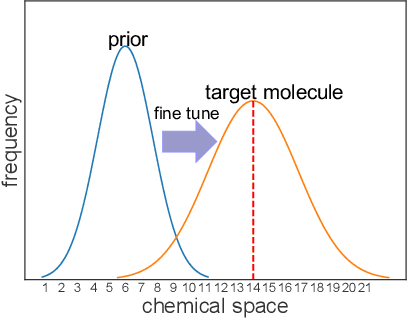

Abstract:The aim of the inverse chemical design is to develop new molecules with given optimized molecular properties or objectives. Recently, generative deep learning (DL) networks are considered as the state-of-the-art in inverse chemical design and have achieved early success in generating molecular structures with desired properties in the pharmaceutical and material chemistry fields. However, satisfying a large number (larger than 10 objectives) of molecular objectives is a limitation of current generative models. To improve the model's ability to handle a large number of molecule design objectives, we developed a Reinforcement Learning (RL) based generative framework to optimize chemical molecule generation. Our use of Curriculum Learning (CL) to fine-tune the pre-trained generative network allowed the model to satisfy up to 21 objectives and increase the generative network's robustness. The experiments show that the proposed multiple-objective RL-based generative model can correctly identify unknown molecules with an 83 to 100 percent success rate, compared to the baseline approach of 0 percent. Additionally, this proposed generative model is not limited to just chemistry research challenges; we anticipate that problems that utilize RL with multiple-objectives will benefit from this framework.

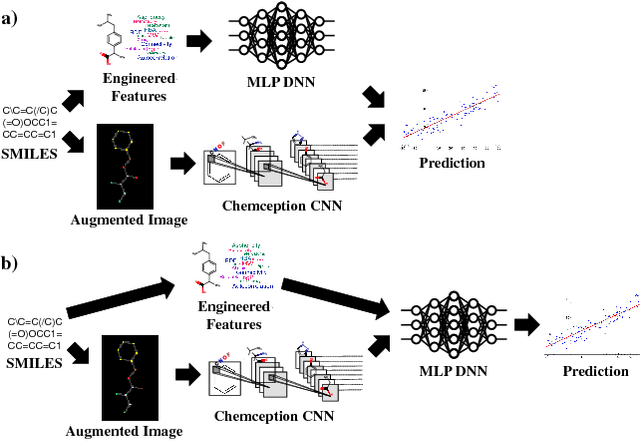

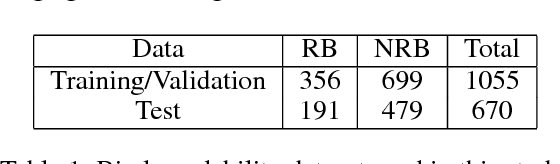

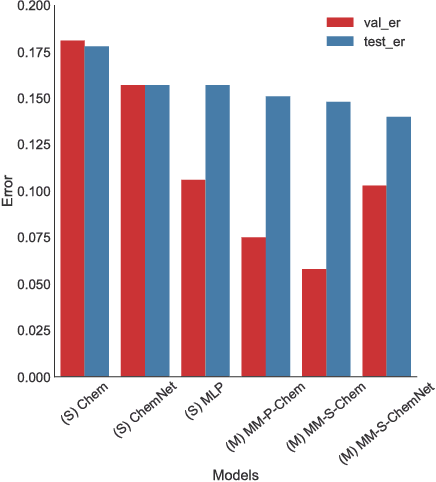

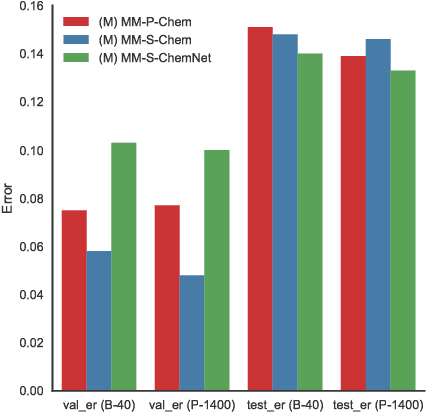

Multimodal Deep Neural Networks using Both Engineered and Learned Representations for Biodegradability Prediction

Sep 13, 2018

Abstract:Deep learning algorithms excel at extracting patterns from raw data, and with large datasets, they have been very successful in computer vision and natural language applications. However, in other domains, large datasets on which to learn representations from may not exist. In this work, we develop a novel multimodal CNN-MLP neural network architecture that utilizes both domain-specific feature engineering as well as learned representations from raw data. We illustrate the effectiveness of such network designs in the chemical sciences, for predicting biodegradability. DeepBioD, a multimodal CNN-MLP network is more accurate than either standalone network designs, and achieves an error classification rate of 0.125 that is 27% lower than the current state-of-the-art. Thus, our work indicates that combining traditional feature engineering with representation learning can be effective, particularly in situations where labeled data is limited.

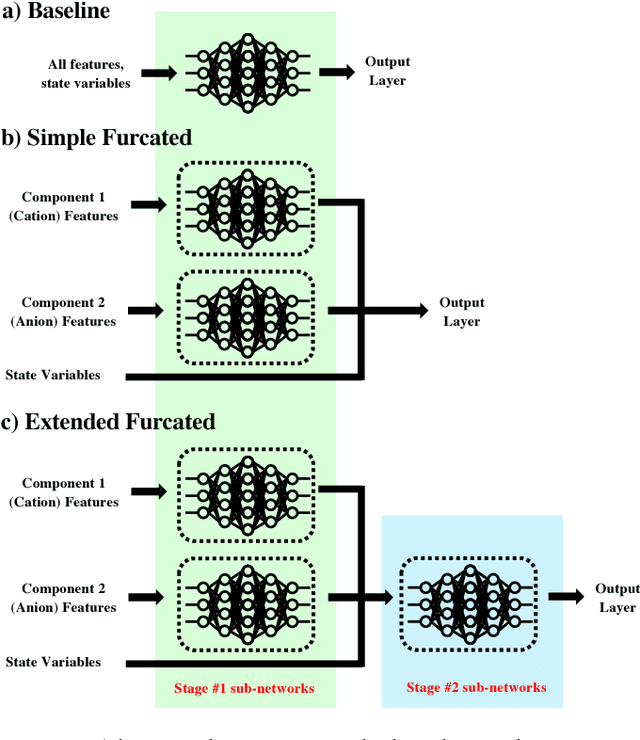

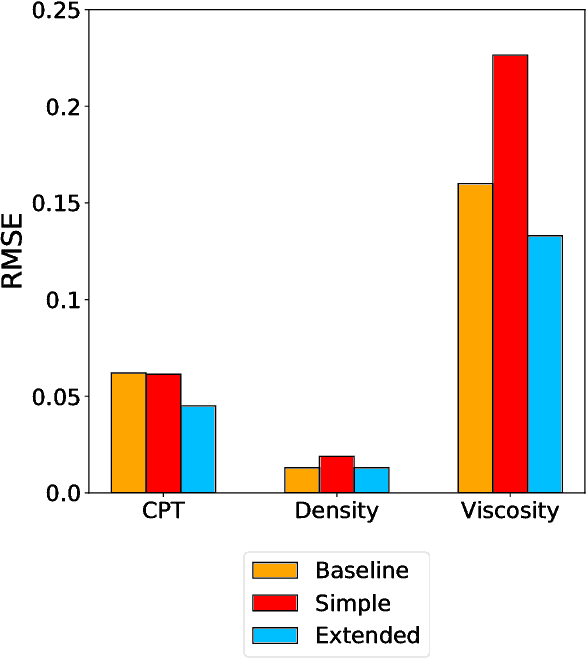

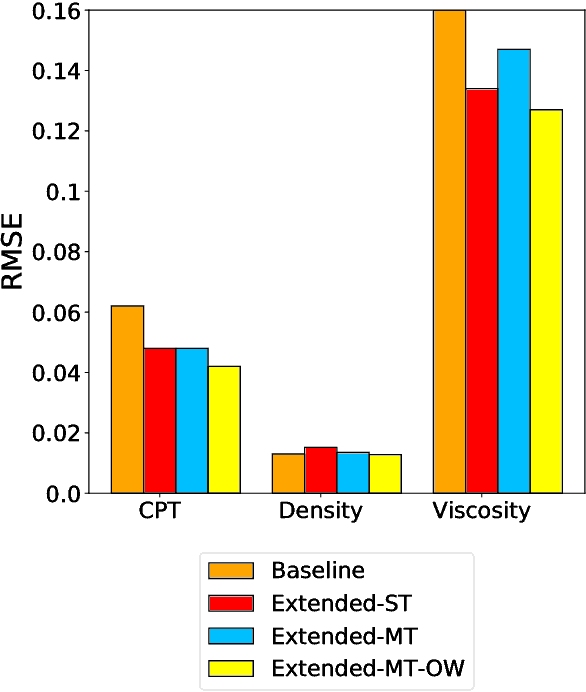

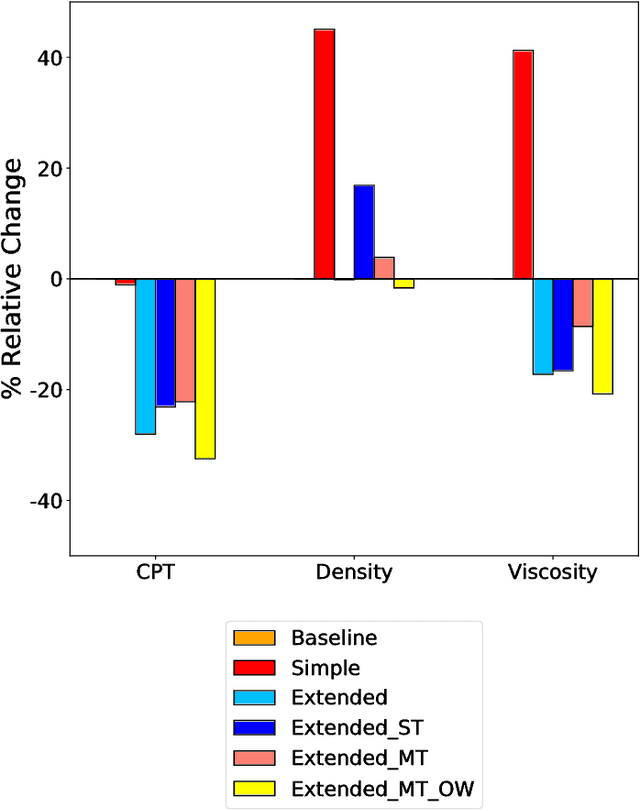

IL-Net: Using Expert Knowledge to Guide the Design of Furcated Neural Networks

Sep 13, 2018

Abstract:Deep neural networks (DNN) excel at extracting patterns. Through representation learning and automated feature engineering on large datasets, such models have been highly successful in computer vision and natural language applications. Designing optimal network architectures from a principled or rational approach however has been less than successful, with the best successful approaches utilizing an additional machine learning algorithm to tune the network hyperparameters. However, in many technical fields, there exist established domain knowledge and understanding about the subject matter. In this work, we develop a novel furcated neural network architecture that utilizes domain knowledge as high-level design principles of the network. We demonstrate proof-of-concept by developing IL-Net, a furcated network for predicting the properties of ionic liquids, which is a class of complex multi-chemicals entities. Compared to existing state-of-the-art approaches, we show that furcated networks can improve model accuracy by approximately 20-35%, without using additional labeled data. Lastly, we distill two key design principles for furcated networks that can be adapted to other domains.

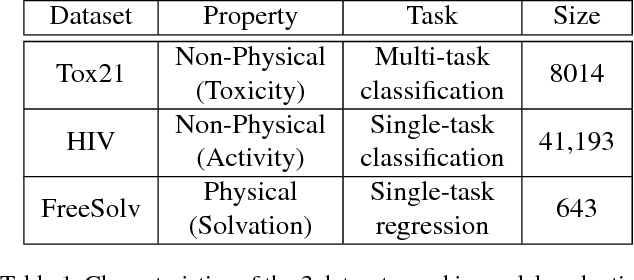

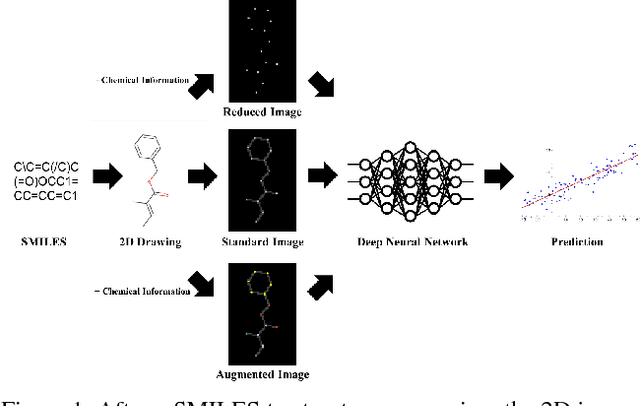

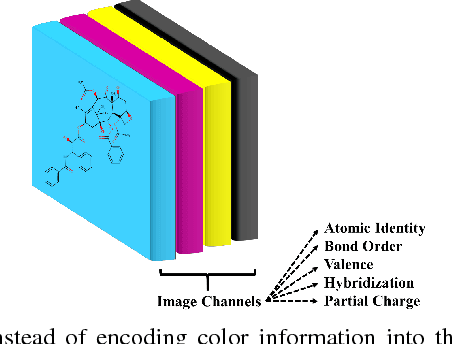

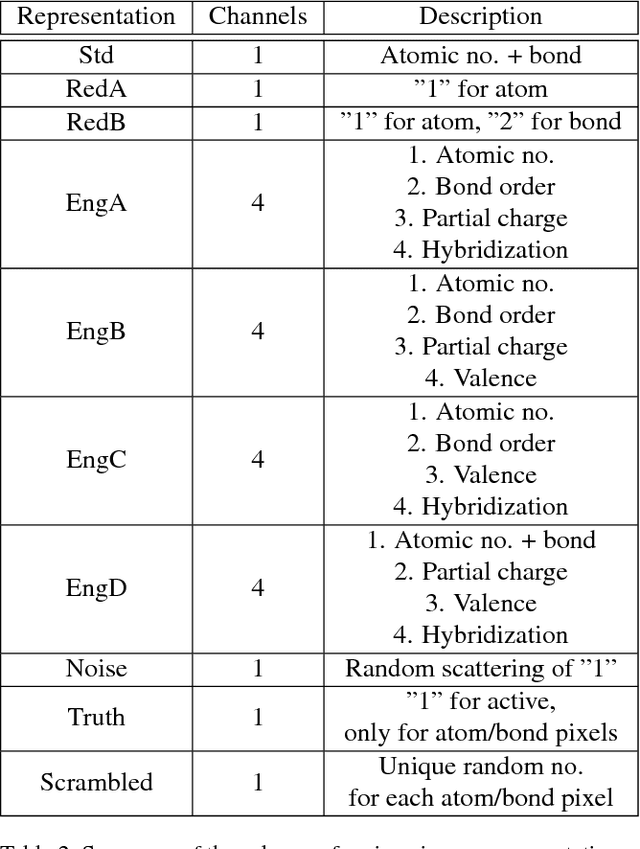

How Much Chemistry Does a Deep Neural Network Need to Know to Make Accurate Predictions?

Mar 18, 2018

Abstract:The meteoric rise of deep learning models in computer vision research, having achieved human-level accuracy in image recognition tasks is firm evidence of the impact of representation learning of deep neural networks. In the chemistry domain, recent advances have also led to the development of similar CNN models, such as Chemception, that is trained to predict chemical properties using images of molecular drawings. In this work, we investigate the effects of systematically removing and adding localized domain-specific information to the image channels of the training data. By augmenting images with only 3 additional basic information, and without introducing any architectural changes, we demonstrate that an augmented Chemception (AugChemception) outperforms the original model in the prediction of toxicity, activity, and solvation free energy. Then, by altering the information content in the images, and examining the resulting model's performance, we also identify two distinct learning patterns in predicting toxicity/activity as compared to solvation free energy. These patterns suggest that Chemception is learning about its tasks in the manner that is consistent with established knowledge. Thus, our work demonstrates that advanced chemical knowledge is not a pre-requisite for deep learning models to accurately predict complex chemical properties.

SMILES2Vec: An Interpretable General-Purpose Deep Neural Network for Predicting Chemical Properties

Mar 18, 2018

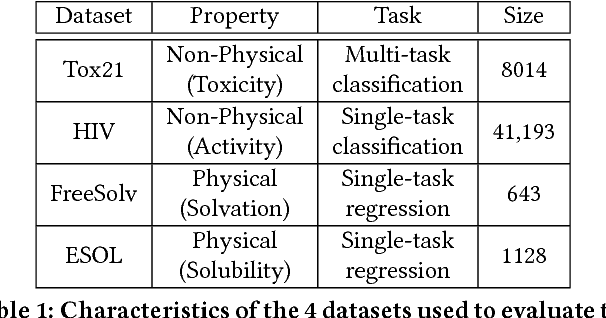

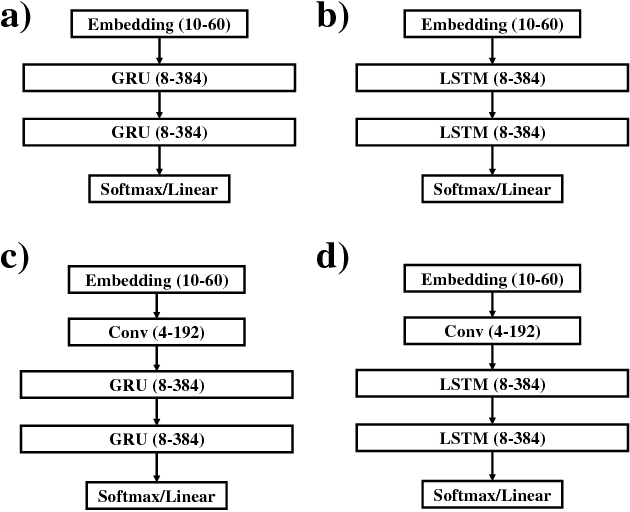

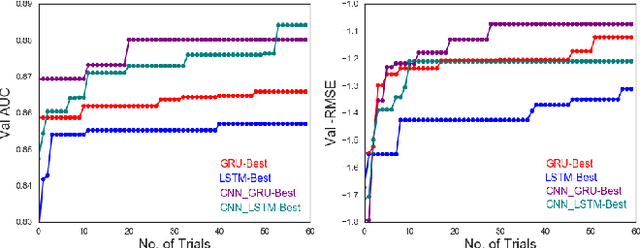

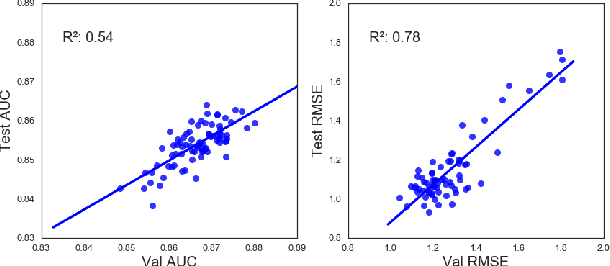

Abstract:Chemical databases store information in text representations, and the SMILES format is a universal standard used in many cheminformatics software. Encoded in each SMILES string is structural information that can be used to predict complex chemical properties. In this work, we develop SMILES2vec, a deep RNN that automatically learns features from SMILES to predict chemical properties, without the need for additional explicit feature engineering. Using Bayesian optimization methods to tune the network architecture, we show that an optimized SMILES2vec model can serve as a general-purpose neural network for predicting distinct chemical properties including toxicity, activity, solubility and solvation energy, while also outperforming contemporary MLP neural networks that uses engineered features. Furthermore, we demonstrate proof-of-concept of interpretability by developing an explanation mask that localizes on the most important characters used in making a prediction. When tested on the solubility dataset, it identified specific parts of a chemical that is consistent with established first-principles knowledge with an accuracy of 88%. Our work demonstrates that neural networks can learn technically accurate chemical concept and provide state-of-the-art accuracy, making interpretable deep neural networks a useful tool of relevance to the chemical industry.

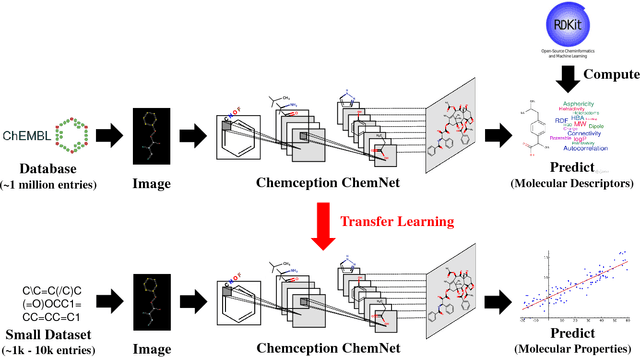

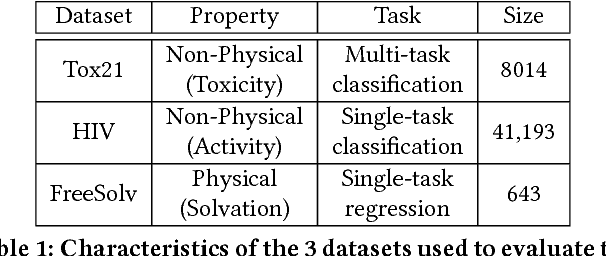

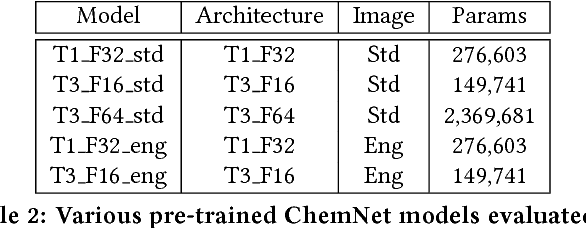

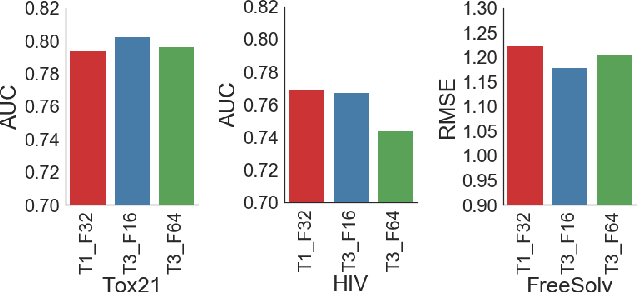

Using Rule-Based Labels for Weak Supervised Learning: A ChemNet for Transferable Chemical Property Prediction

Mar 18, 2018

Abstract:With access to large datasets, deep neural networks (DNN) have achieved human-level accuracy in image and speech recognition tasks. However, in chemistry, data is inherently small and fragmented. In this work, we develop an approach of using rule-based knowledge for training ChemNet, a transferable and generalizable deep neural network for chemical property prediction that learns in a weak-supervised manner from large unlabeled chemical databases. When coupled with transfer learning approaches to predict other smaller datasets for chemical properties that it was not originally trained on, we show that ChemNet's accuracy outperforms contemporary DNN models that were trained using conventional supervised learning. Furthermore, we demonstrate that the ChemNet pre-training approach is equally effective on both CNN (Chemception) and RNN (SMILES2vec) models, indicating that this approach is network architecture agnostic and is effective across multiple data modalities. Our results indicate a pre-trained ChemNet that incorporates chemistry domain knowledge, enables the development of generalizable neural networks for more accurate prediction of novel chemical properties.

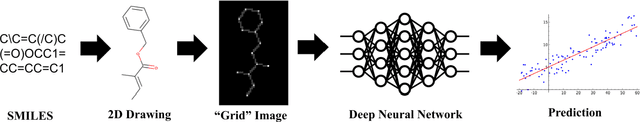

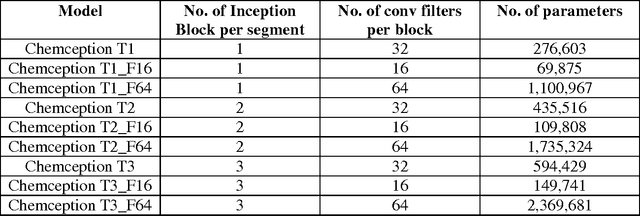

Chemception: A Deep Neural Network with Minimal Chemistry Knowledge Matches the Performance of Expert-developed QSAR/QSPR Models

Jun 20, 2017

Abstract:In the last few years, we have seen the transformative impact of deep learning in many applications, particularly in speech recognition and computer vision. Inspired by Google's Inception-ResNet deep convolutional neural network (CNN) for image classification, we have developed "Chemception", a deep CNN for the prediction of chemical properties, using just the images of 2D drawings of molecules. We develop Chemception without providing any additional explicit chemistry knowledge, such as basic concepts like periodicity, or advanced features like molecular descriptors and fingerprints. We then show how Chemception can serve as a general-purpose neural network architecture for predicting toxicity, activity, and solvation properties when trained on a modest database of 600 to 40,000 compounds. When compared to multi-layer perceptron (MLP) deep neural networks trained with ECFP fingerprints, Chemception slightly outperforms in activity and solvation prediction and slightly underperforms in toxicity prediction. Having matched the performance of expert-developed QSAR/QSPR deep learning models, our work demonstrates the plausibility of using deep neural networks to assist in computational chemistry research, where the feature engineering process is performed primarily by a deep learning algorithm.

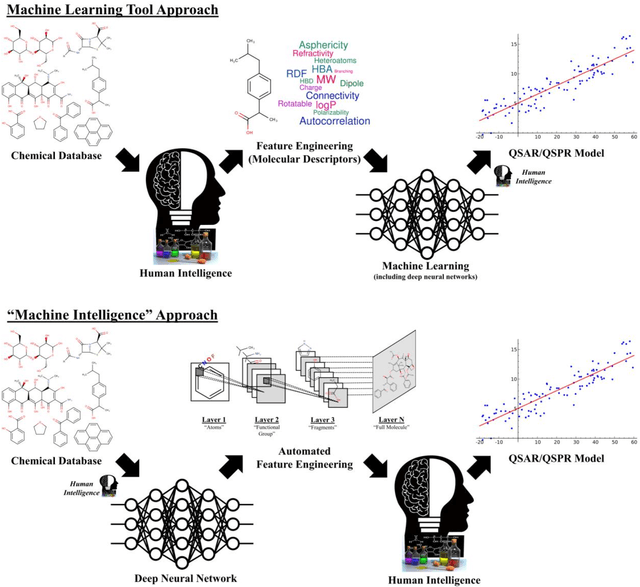

Deep Learning for Computational Chemistry

Jan 17, 2017

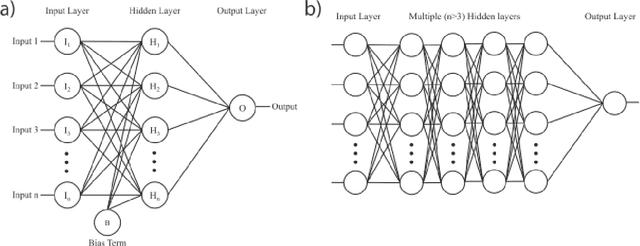

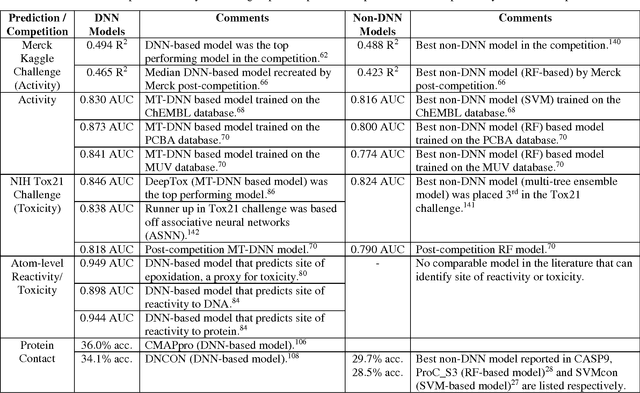

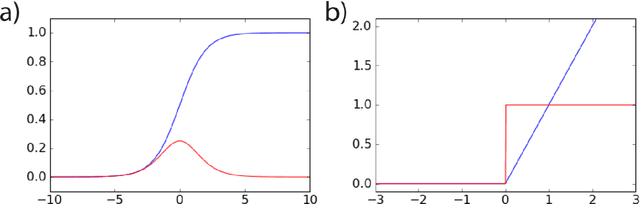

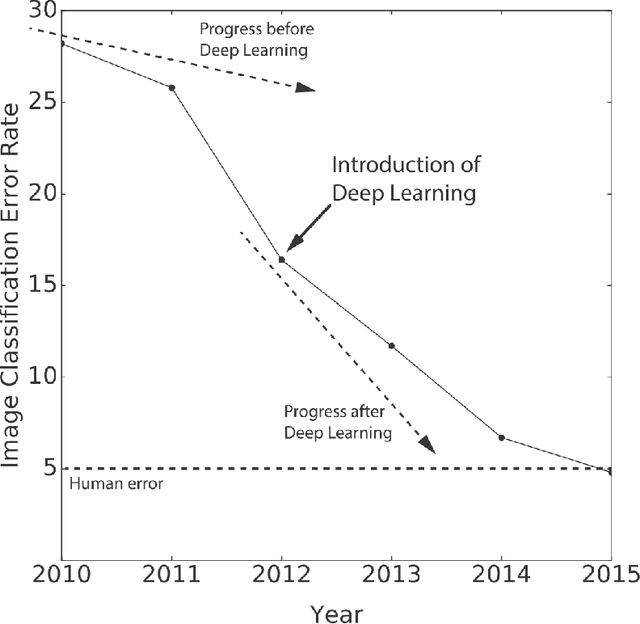

Abstract:The rise and fall of artificial neural networks is well documented in the scientific literature of both computer science and computational chemistry. Yet almost two decades later, we are now seeing a resurgence of interest in deep learning, a machine learning algorithm based on multilayer neural networks. Within the last few years, we have seen the transformative impact of deep learning in many domains, particularly in speech recognition and computer vision, to the extent that the majority of expert practitioners in those field are now regularly eschewing prior established models in favor of deep learning models. In this review, we provide an introductory overview into the theory of deep neural networks and their unique properties that distinguish them from traditional machine learning algorithms used in cheminformatics. By providing an overview of the variety of emerging applications of deep neural networks, we highlight its ubiquity and broad applicability to a wide range of challenges in the field, including QSAR, virtual screening, protein structure prediction, quantum chemistry, materials design and property prediction. In reviewing the performance of deep neural networks, we observed a consistent outperformance against non-neural networks state-of-the-art models across disparate research topics, and deep neural network based models often exceeded the "glass ceiling" expectations of their respective tasks. Coupled with the maturity of GPU-accelerated computing for training deep neural networks and the exponential growth of chemical data on which to train these networks on, we anticipate that deep learning algorithms will be a valuable tool for computational chemistry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge