How Much Chemistry Does a Deep Neural Network Need to Know to Make Accurate Predictions?

Paper and Code

Mar 18, 2018

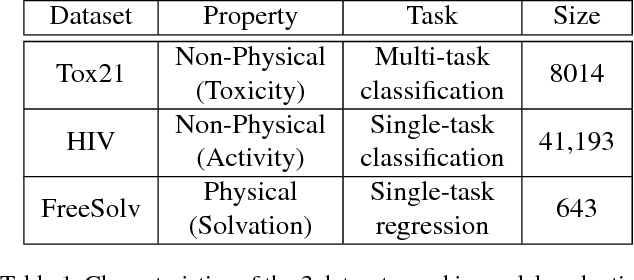

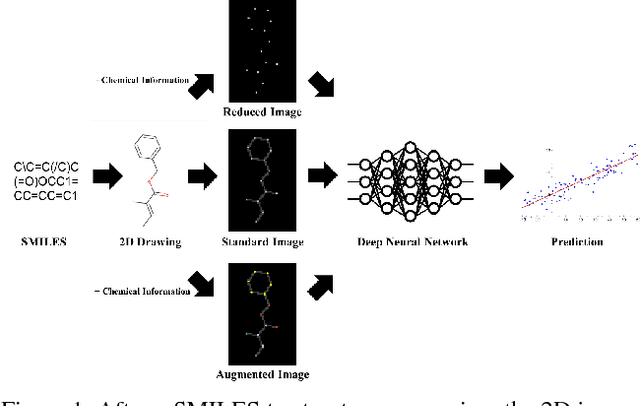

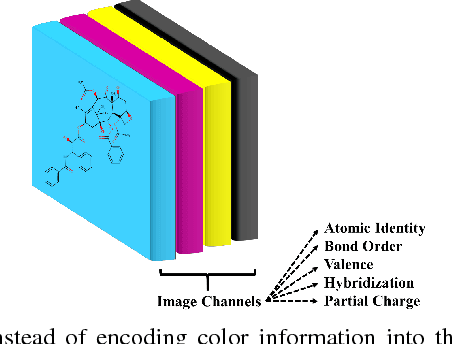

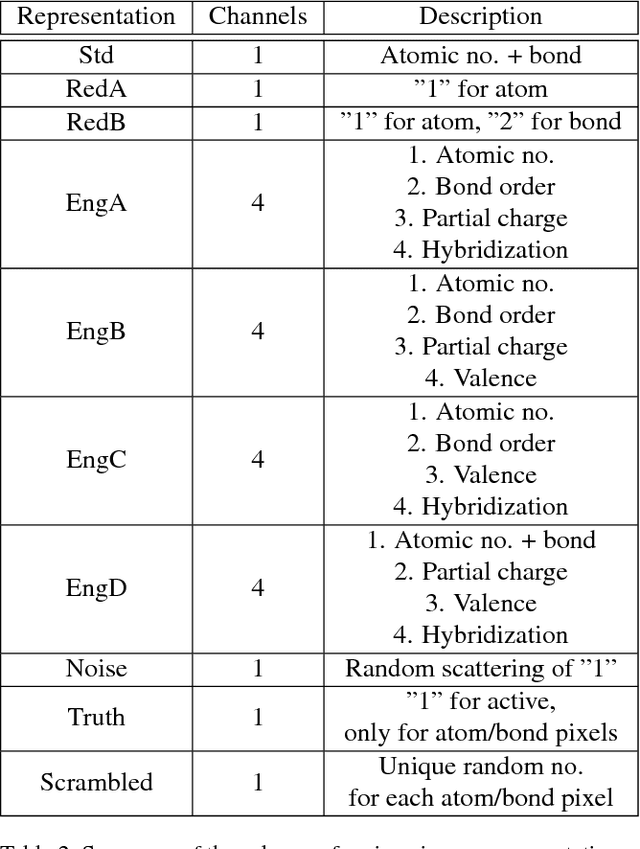

The meteoric rise of deep learning models in computer vision research, having achieved human-level accuracy in image recognition tasks is firm evidence of the impact of representation learning of deep neural networks. In the chemistry domain, recent advances have also led to the development of similar CNN models, such as Chemception, that is trained to predict chemical properties using images of molecular drawings. In this work, we investigate the effects of systematically removing and adding localized domain-specific information to the image channels of the training data. By augmenting images with only 3 additional basic information, and without introducing any architectural changes, we demonstrate that an augmented Chemception (AugChemception) outperforms the original model in the prediction of toxicity, activity, and solvation free energy. Then, by altering the information content in the images, and examining the resulting model's performance, we also identify two distinct learning patterns in predicting toxicity/activity as compared to solvation free energy. These patterns suggest that Chemception is learning about its tasks in the manner that is consistent with established knowledge. Thus, our work demonstrates that advanced chemical knowledge is not a pre-requisite for deep learning models to accurately predict complex chemical properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge